We are back with another episode of True ML Talks. In this, we dive deep into Loblaw Digital ML Platform and we are speaking with Adhithya Ravichandran

Adhithya is a Senior Software Engineer at Loblaw Digital. He is part of the machine learning platform team, where he builds and maintains the machine learning platform.

📌

Our conversations with Adhitihya will cover below aspects:

- ML Platform Team at Loblaw

- Customized ML Tools and Components

- Helios Recommendation Engine

- Recommendation Systems: Personalization and Handling Real-Time Context

- Empowering Machine Learning Observability

- Advancements in Generative AI

Watch the full episode below:

ML Platform Team at Loblaw

The ML platform team, responsible for platformizing machine learning, has been in existence for over five years. Its formation was driven by the need to optimize operations efficiently within the large organization. The team's size is small, comprising less than ten members, but it plays a vital role in implementing niche products and toolsets.

Customized ML Tools and Components

AirFlow and other Niche Toolset

Loblaw Digital's ML platform team employs niche products and toolsets, including Airflow, a workflow orchestrator. Airflow is used not only by machine learning teams but also extensively by data engineering, business intelligence, and analytics teams. The team emphasizes using open source tools and has developed a robust practice for efficient tool utilization.

Streamlining Workflows with Code Templates

The ML platform team collaborates closely with specific teams responsible for use cases. They identify efficient methods to accomplish tasks and convert these best practices into reusable code templates and Python packages. Over time, these practices have evolved into a core set of codified practices, accessible to all teams, making workflows more streamlined and efficient.

Helios Recommendation Engine

The Helios recommendation engine, a cornerstone of Loblaw Digital's e-commerce platform, was born out of the need to enhance user experience and capitalize on the vast amount of data at the company's disposal. Being Canada's largest retailer with a substantial customer loyalty program, Loblaw Digital had access to an unparalleled dataset. This wealth of information presented an opportunity to build custom recommendation models tailored to Canadian customers' preferences.

Initially, Loblaw Digital relied on third-party tools for recommendations. However, as the project gained momentum, it became evident that building an in-house recommendation engine was not only feasible but also cost-effective. With growing expertise in deploying their own models, the team leveraged tools like Google Kubernetes Engine (GKE) on Google Cloud Platform (GCP) and Seldon, an open-source model serving tool. These components formed the foundation for creating an API wrapper around their recommendation models.

Model serving was a pivotal challenge that the Helios recommendation engine aimed to address. Loblaw Digital possessed the capability to train sophisticated recommendation models and had an array of internal teams working on various aspects of their e-commerce platform. However, integrating their models seamlessly into the platform proved to be a complex task when using third-party providers. Hence, the decision to develop a custom solution that could efficiently serve their own models.

Although Seldon, a tool used in their solution, is technically a third-party tool, Loblaw Digital maintained the Seldon operators and customized it to meet their specific needs. This approach allowed them to have greater control over their model serving infrastructure and ensure a seamless integration with their e-commerce platform.

You can read more about the Helio Recommendation Engine in this blog written by the team at Loblaw Digital:

📌

Optimizing Inferencing: Balancing Real-Time and Batch for Helios Recommendation Engine:

Loblaw Digital's inferencing needs are diverse, encompassing real-time serving for instant user recommendations and batch inferencing for other use cases. Historically, they've primarily used batch inferencing, optimizing cost-effectiveness by pre-computing inferences and storing them for efficient retrieval.

Recognizing the evolving e-commerce landscape, Loblaw Digital increasingly embraces real-time inferencing to meet user demands for instant recommendations, prioritizing a seamless shopping experience.

Recommendation Systems: Personalization and Real-Time Context

Empowering Personalization

Personalization at the Core

Loblaw Digital has firmly established a foundation for personalization within their recommendation system. They began with matrix multiplication, which has been a staple technique for a few years. As their journey progressed, Loblaw Digital incorporated more sophisticated models, including massive transformers, to enhance personalization.

Handling Real-Time Context Challenges

Real-Time Context: A Complex Challenge

In recommendation systems, responding to real-time context is a significant challenge. Real-time context includes immediate user signals, such as the user's prior click, and more extended contextual information, such as seasonal patterns over the last two years for specific products. Balancing these extreme types of features presents a substantial engineering challenge.

Empowering Others Through the Platform Team

Loblaw Digital's platform team plays a pivotal role in addressing these complex challenges. They are developing data contracts and a "data as a service" approach to provide timely data to various stakeholders. The platform team has transitioned from a proprietary third-party vendor for in-session, in-browser actions to an open-source solution called Snowplow analytics. This transition allows Loblaw Digital to have greater control over data pipelines, enabling faster data availability to backend services, including recommendation models. The platform team operates within the Data and Machine Learning Platform organization, responsible for various data-related functions, including real-time data pipelines and data as a service. The centralization of these functions within the team facilitates efficient problem-solving and ensures alignment with the organization's vision.

We have now implemented our open source version of Snowplow analytics. So we have a lot more control over the pipeline's from someone's browser app. I'm having them be available faster to our backend services, including models and I've seen some traction on that already. - Adhithiya

Empowering Machine Learning Observability

Integrated Approach to Data and Observability

- Independence: Loblaw Digital's mature organization empowers machine learning engineers and data scientists to independently build data pipelines for their models.

- Self-Sufficiency: Streamlined processes and templates facilitate self-sufficiency.

- Data Discoverability: Organization-wide strategies promote data discoverability, enabling autonomous data access.

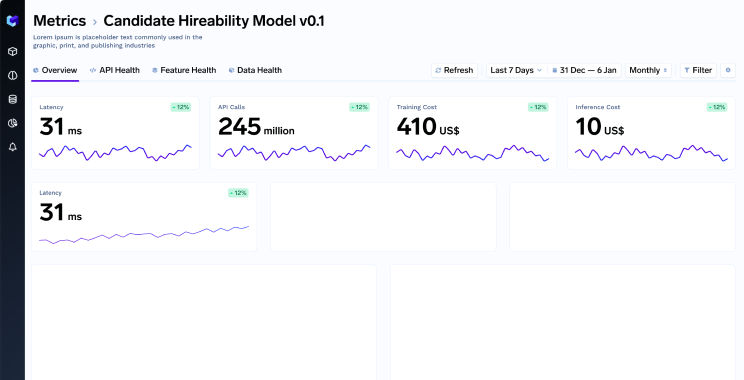

Comprehensive Machine Learning Observability - Crucial Emphasis: Adhithya underscores the importance of machine learning observability.

- Metrics: General observability metrics encompass request volumes, latency, error rates, and deployment health.

- Centralized Approach: Loblaw Digital centralizes monitoring and observability, simplifying processes with out-of-the-box tools.

Addressing Model Observability Challenges

- Focused on Data: Model observability focuses on assessing input data statistics within specific timeframes.

- Operational Agility: It aims to enable rapid responses based on data insights to meet operational needs.

- Vendor Solutions: Loblaw Digital explores vendor solutions for advanced observability within shorter timeframes.

Enhancing Observability with Specialized Solutions

- Vendor Advancements: Vendors develop solutions similar to a Prometheus stack optimized for real-time analytics.

- Leveraging Advantages: These solutions harness the "big data stack" advantages for faster metric computations tailored to model observability.

- Considering Adoption: Loblaw Digital considers adopting such solutions based on experience with self-hosted Prometheus.

Key Role of Centralized Logging

- Data Capture: Loblaw Digital employs a centralized logger service to capture machine learning API messages and responses.

- Efficient Operation: The service operates asynchronously with minimal service impact.

- Data Utilization: Captured data feeds into the organization's analytics data warehouse, facilitating comprehensive reporting and analysis.

Advancing End-to-End Observability

- Operational: Centralized logger and observability solutions are operational for specific use cases.

- Performance Tracking: Teams track model performances, correlate inferences with user actions, and assess model impact.

- Continual Enhancement: Loblaw Digital continues to enhance and centralize observability services for wider organizational adoption.

Advancements in Generative AI

Intriguing Progress in Generative AI

Loblaw Digital is making substantial strides in the realm of generative AI. The team is exploring numerous opportunities in this domain. Internally, there is immense enthusiasm, not only in terms of utilizing well-known chat interfaces but also in building innovative products and hosting proprietary models.

Deep Commitment to Generative AI

Loblaw Digital is deeply invested in the potential of generative AI. The organization is actively considering the development of products leveraging generative AI. There is a strong desire to explore the possibility of hosting their own models in the future.

Leadership's Forward-Thinking Approach

Loblaw Digital's leadership is open to exploring a wide range of possibilities in the generative AI space. The organization's ambition extends beyond utilizing third-party vendors to envisioning a future where they can create and host their generative AI models. Loblaw Digital considers itself fortunate to be at the forefront of such exciting developments.

Read our previous blogs in the TrueML Series

Keep watching the TrueML youtube series and reading all the TrueML blog series.

TrueFoundry is a ML Deployment PaaS over Kubernetes to speed up developer workflows while allowing them full flexibility in testing and deploying models while ensuring full security and control for the Infra team. Through our platform, we enable Machine learning Teams to deploy and monitor models in 15 minutes with 100% reliability, scalability, and the ability to roll back in seconds - allowing them to save cost and release Models to production faster, enabling real business value realisation.