Model Serving & Inference

- Effortlessly deploy any open-source LLM with pre-configured optimizations.

- Connect to Hugging Face or your preferred model registry with ease.

- Leverage top-tier model servers like vLLM and SGLang for high-performance inference.

- Autoscaling & intelligent infrastructure provisioning

Try it now

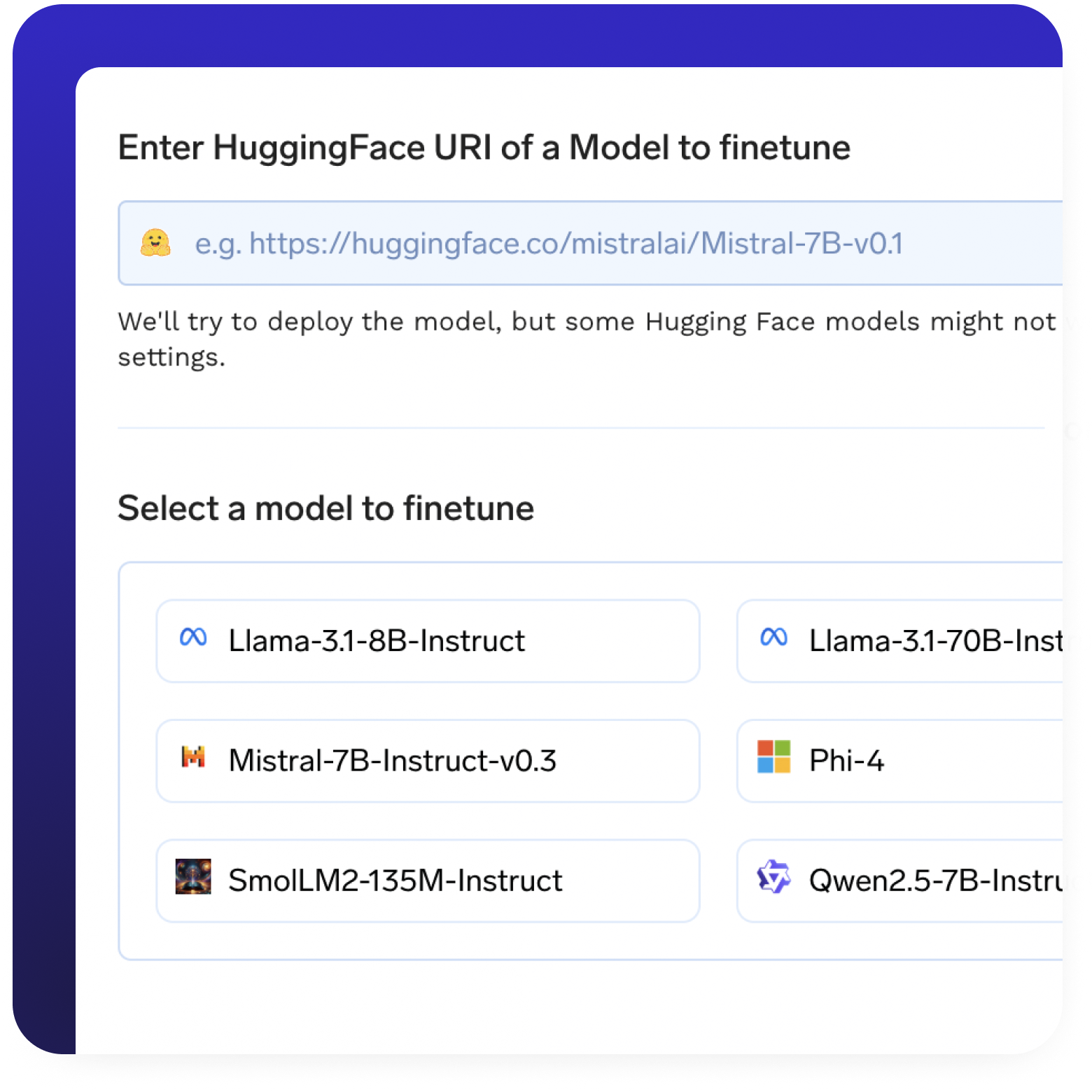

Model Finetuning

- No-code & full-code fine-tuning support on custom datasets.

- LoRA & QLoRA for efficient low-rank adaptation.

- Checkpointing support for seamless training resumption.

- One-click deployment of fine-tuned models with best-in-class model servers.

- Automated training pipelines with built-in experiment tracking.

- Distributed training support for faster, large-scale model optimization.

Try it now

AI Gateway

- Unified API layer to serve and manage models across OpenAI, Llama, Gemini, etc

- Built-in rate limiting & access control to manage usage securely.

- Real-time usage & cost metrics for better monitoring and optimization.

- Fallback & automatic retries to ensure high availability and reliability.

Try it now

Prompt Management

- Experiment and iterate on prompts with a structured testing framework

- Version-controlled prompt engineering

Try it now

Tracing & Guardrails

- Capture and analyze every prompt, response, and token usage to ensure transparency and traceability.

- Log latency, completion rates, and API calls to optimize model performance.

- Integrate with custom guardrails or external tools for PII detections, content moderation, etc

Try it now

One click RAG deployment

- Deploys all RAG components in a single click, including VectorDB, embedding models, frontend, and backend.

- Configurable infrastructure to optimize storage, retrieval, and query processing.

- Scalable architecture to support dynamic and growing knowledge bases.

Try it now

Deploy Any Agent Framework

- Deploy and manage AI agents across multiple frameworks, including LangChain, AutoGen, CrewAI, and custom-built agents.

- Framework-agnostic deployment, ensuring compatibility with any agent-based architecture.

- Support for multi-agent collaboration, enabling agents to interact, share context, and execute tasks autonomously.

Try it now

Enterprise-Ready

Your data and models are securely housed within your cloud / on-prem infrastructure.

Fully Modular Systems

Integrates with and complements your existing stackTrue Compliance

SOC 2, HIPAA, and GDPR standards to ensure robust data protectionSecure By Design

Flexible Role based access control and audit trailsIndustry-standard Auth

SSO Integration via OIDC or SAML

Backed by world class investors

.webp)

Testimonials TrueFoundry makes your ML team 10x faster

.webp)

Deepanshi S

Lead Data Scientist

TrueFoundry simplifies complex ML model deployment with a user-friendly UI, freeing data scientists from infrastructure concerns. It enhances efficiency, optimizes costs, and effortlessly resolves DevOps challenges, proving invaluable to us.

Matthieu Perrinel

Head of ML

The computing costs savings we achieved as a result of adopting TrueFoundry, were greater than the cost of the service (and that's without counting the time and headaches it saves us).

Soma Dhavala

Director Of Machine Learning

TrueFoundry helped us save 40-50% of the cloud costs. Most companies give you a tool and leave you but TrueFoundry has given us excellent support whenever we needed them.

Rajesh Chaganti

CTO

Using the TrueFoundry platform we were able to reduce our cloud costs significantly. We were able to seamlessly transit for AMI based system to a docker-Kubernetes based architecture within a few weeks.

Sumit Rao

AVP of Data Science

TrueFoundry has been pivotal in our Machine Learning use cases. They have helped our team realize value faster from Machine Learning.

Vivek Suyambu

Senior Software Engineer

TrueFoundry makes open-source LLM deployment and fine-tuning effortless. Its intuitive platform, enriched with a feature-packed dashboard for model management, is complemented by a support team that goes the extra mile.

9.9

Quality of Support

GenAI infra- simple, faster, cheaper

Trusted by 30+ enterprises and Fortune 500 companies

.webp)