We are back with another episode of True ML Talks. In this, we dive deep into Slack's Recommend API, SlackGPT and LLM's and we are speaking with Katrina Ni.

Katrina Ni is a senior ML engineer as well as a lead member of the technical staff at Slack. She has been leading the ML team there, worked with the recommend API, spam detection, across product functionalities.

📌

Our conversations with Katrina will cover below aspects:

- Use Cases of ML and Gen AI at Slack

- Infrastructure at Slack with a Focus on ML

- Training, Validation, Deployment and Monitoring for Recommend API at Slack

- Measuring Business Impact for the Recommend API

- Impact of Customer Privacy on Training Infrastructure

- Building Slack GPT and Challenges in Training LLMS

- The Future of Prompt Engineering in Language Models

- Staying Updated with the Advancements in LLMs

Watch the full episode below:

Use Cases of ML and Gen AI @ Slack

- Personalized Channel Recommendations: ML and Gen AI enable personalized channel recommendations at Slack. New users joining a team receive suggestions for relevant channels based on relationships and data-driven insights, enhancing the user experience.

- Integrating Recommender API: Slack's integrated Recommender API offers service recommendations across product function teams. The sophisticated recommender engine seamlessly caters to users of all sizes, despite recommendations not being the primary business model.

- Detecting and Scanning Malware: ML and Gen AI also aid in security improvements at Slack by detecting and scanning malware links, creating a safer communication environment.

Infrastructure at Slack with a Focus on ML

Slack relies on skilled data engineering teams and robust data warehouse solutions. They use Airflow for job scheduling and big data processing, providing vital support for ML tasks.

Slack's infrastructure is based on Kubernetes, supporting their microservices architecture. Their cloud team developed a Kubernetes-based framework for easy microservice deployment, offering flexibility and scalability.

To cater to their specific needs, Slack developed a custom feature store instead of using existing solutions. This custom store efficiently manages and utilizes features for ML applications.

A dedicated team at Slack built an orchestration layer atop Kubernetes, streamlining microservice deployment and integrating with internal services like console. While it enhances Kubernetes usage, challenges remain for applications heavily dependent on databases.

Recommend API at Slack

During Slack's early days, efforts were made to develop effective embedding and faction mechanisms. These involved processing vast amounts of data to understand user interactions and generate numerical embeddings for users and channels. One outcome was an embedding service based on user activities, with another based on messages, though not widely adopted at the time.

Later, the focus shifted to using these embeddings to build a more user-friendly product. The concept of recommendations emerged, identifying areas where suggestions could benefit users, such as recommending channels or users. This led to the development of the Recommend API, which integrated with various parts of Slack's platform and utilized existing embeddings to fetch relevant data based on user activities. The API also incorporated the feature store, enriching data with additional features and applying filtering and scoring mechanisms.

As development progressed, the Recommend API was integrated into different features within Slack, allowing the platform to recommend relevant channels or users to its users, streamlining their experience and boosting engagement.

You can read more about SlackGPT in the blog given below.

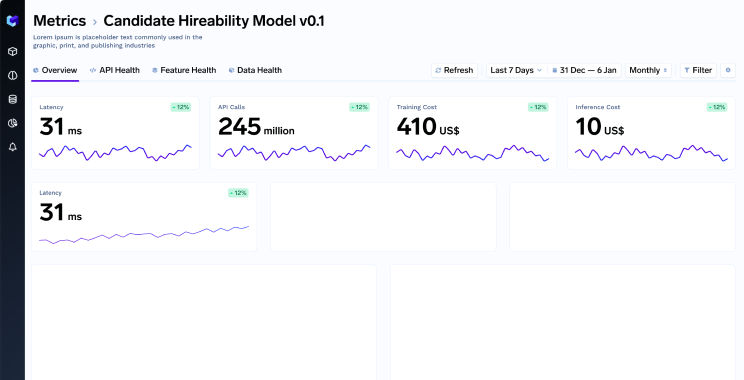

Here is an image from the same blog that give a really good overview of Slack's Recommend API Infrastructure

Model Training Pipeline and Validation Workflow for the Recommend API

By leveraging Kubernetes, Airflow, and a hierarchical approach to training and validation, Slack ensures the Recommend API's models are effective and reliable, providing personalized recommendations to users. AB testing guarantees rigorous evaluation of model performance in an online environment before deployment, contributing to a robust and data-driven ML operations framework at Slack.

1. Hierarchical Corpus and Use Cases: The model training pipeline at Slack follows a hierarchical approach. It begins with a hierarchical corpus comprising channels or users, with multiple use cases like daily digest, composer, or channel browser, catering to specific functionalities within Slack.

2. Model Selection and Experimentation: For each use case, various models are trained and experimented with, starting with large regression models and exploring other algorithms like XGBoost or LightGBM. Model parameters are fine-tuned, and different data variations are experimented with to identify the best-suited model.

3. Training Pipeline with Kubernetes and Airflow: The training pipeline is orchestrated using Kubernetes and Airflow. Kubernetes clusters handle model training tasks efficiently, with Airflow managing the workflow from data gathering to training various models for different use cases.

4. Logging and Offline Metrics: During training, the team logs offline metrics and model parameters for future monitoring and analysis.

5. Seamless Model Addition and Management: The architecture allows seamless addition of new sources or models for training with minimal code changes.

6. Validation and AB Testing: Rigorous validation is conducted before deploying models to production, comparing offline metrics like AUC for classification or ranking models. AB testing is extensively used, selecting models with better online metrics for deployment.

7. Internal AB Testing Framework: Slack has its internal AB testing framework, used by the ML team and product teams when launching new features. The framework enables data-driven decisions by comparing model performance on various business metrics.

Model Deployment and Scale for the Recommend API

Slack's ML models are deployed in Kubernetes clusters using GRPC as the interface for handling requests. GRPC offers safety, efficiency, and faster processing compared to JSON-based APIs like FastAPI. Despite its restrictions, GRPC ensures robust and efficient communication between the API and models, contributing to a smooth deployment process.

The deployment strategy includes leveraging Kubernetes' auto-scaling capabilities, allowing models to adjust resource usage based on demand. With a vast user base, Slack handles a significant number of requests, averaging around 100 requests per second.

Implementing GRPC and setting up the serving cluster microservice required focused effort, but it proved effective in handling scale and optimizing model performance.

Model Monitoring and Automated Retraining for the Recommend API

1. Tracking Offline and Online Metrics: Slack's model monitoring pipeline efficiently tracks offline and online metrics. Offline metrics like accuracy, F1, and ROC are logged, and dedicated dashboards provide visualization. Online metrics, including acceptance rate, are also monitored through a separate dashboard.

2. Anomaly Detection and Feature Drift: Slack utilizes its own anomaly detection framework to monitor feature drift. Regular model retraining (daily training and weekly deployments) reduces the impact of feature drift, making it less of a concern.

3. Automated Retraining Pipeline: The retraining pipeline is automated using Airflow, ensuring models stay up-to-date with the latest data.

4. Automated Dashboards for Visualization: Data engineers have developed user-friendly dashboards that visualize model performance and metrics. The dashboards automatically update with new data, facilitating tracking of new use cases.

5. Effortless Integration of New Use Cases: Adding new use cases to the monitoring and retraining pipeline is effortless, thanks to the automated integration framework set up by data engineers.

By leveraging automation and regular retraining, Slack's ML models are continuously monitored, optimized, and reliable in production. The robust MLOps framework contributes to seamless and efficient model deployments for Slack's large user base.

Measuring Business Impact for the Recommend API

Slack employs various metrics to evaluate the business impact of the Recommend API, gaining valuable insights into its performance and effectiveness. The key metrics used are:

- Acceptance Rate: Monitors the online performance of the API by measuring the rate at which users accept or interact with recommended channels or users. A higher acceptance rate indicates better user experience and engagement.

- User Clicks and Acceptance: Tracks user clicks on recommended channels or users and their acceptance of suggested connections. These metrics reveal user engagement with recommendations and meaningful interactions within the platform.

- Custom Metrics for Product Teams: Different product teams use custom metrics tailored to their specific goals and objectives. These metrics could include user joiners, team success, and top-priority stack metrics.

- AB Testing: Crucial for evaluating model performance and variants of the API. It allows data-driven decisions on selecting the most effective model for deployment.

- Holistic Business Impact Assessment: Combines insights from multiple metrics to get a comprehensive understanding of the API's effectiveness in achieving objectives and delivering value to users.

By analyzing these metrics and optimizing the Recommend API based on user feedback, Slack ensures the API significantly improves user experience, fosters connections, and drives positive business outcomes within the platform.

Impact of Customer Privacy on Training Infrastructure

Slack's commitment to customer privacy significantly influences the training infrastructure's architecture and data handling practices. The stringent focus on data privacy is reflected in various aspects of the MLOps stack, ensuring the secure and compliant handling of sensitive information. Here's how customer privacy impacts Slack's training infrastructure:

- Careful Handling of Sensitive Data: Slack employs robust data privacy measures to handle sensitive information, such as Personally Identifiable Information (PII). Data engineering teams work to remove PII and implement retention policies to comply with privacy regulations.

- Restricted Access to Sensitive Data: Access to datasets containing sensitive information is limited, housed in the segregated data warehouse named Manitoba. This restricted access enhances data security, though it can present challenges during model debugging and analysis.

- Debugging Challenges and Model Interactions: Restricted access to sensitive data may complicate model debugging, requiring alternative approaches and monitoring tools to ensure model behavior accuracy.

- Data Privacy in Model Training: Slack ensures compliance with data privacy policies when training models, especially for tasks like spam detection, which may involve interacting with sensitive information.

- Architectural Adaptations for Data Privacy: To maintain data privacy, Slack makes specific architectural choices, incorporating secure data storage, access controls, and data encryption practices.

Building Slack GPT and Challenges in Training Large Language Models

Slack introduced Slack GPT, a powerful language generation AI for summarization to address information overload on the platform, enhancing user productivity.

Building Slack GPT presented challenges and required infrastructure innovations for training large language models. This involved setting up clusters, using Kubernetes, and exploring parallel processing techniques. To improve summarization quality, the team implemented prompt tuning, carefully crafting prompts to influence the model's behavior and generate coherent summaries.

Systematic evaluation of prompts was crucial, and the team developed tools to assess prompt effectiveness, enabling experimentation with different prompts. Bridging the gap between offline and online evaluation ensured that the quality observed during offline exploration translated seamlessly to the online user experience.

As Slack invests in AI capabilities, ML engineers play a pivotal role in optimizing user experiences through exploration, evaluation, and systematic improvement of AI-driven features like Slack GPT.

You can read more about SlackGPT from the link below.

The Future of Prompt Engineering in Language Models

Prompt engineering is crucial for language models, impacting response quality. As of now, it involves trial and error, lacking a standardized approach. Future possibilities include:

- Standardization and Best Practices: Emergence of standardized practices and guidelines to optimize prompts for specific use cases.

- Automated Prompt Optimization: AI-driven systems refining prompts based on analysis and user feedback, streamlining the process.

- Enhanced Experimentation Interfaces: User-friendly platforms for efficient prompt manipulation and comparison across models.

- Incorporating User Feedback: Real-time insights guiding prompt adjustments for adaptive and personalized responses.

Prompt engineering is an actively explored area, aiming to strike a balance between manual intervention and automation, shaping the future of AI-powered communication.

Staying Updated with the Advancements in LLMs

Staying updated with the continuous advancements in large language models (LLMs) is essential for data scientists and ML engineers.

Many professionals in the AI and NLP community use Twitter as a valuable platform to access real-time information about new research, model releases, and breakthroughs in the LLM space. Following influential researchers and practitioners on Twitter allows them to quickly learn about the latest trends and updates in the field.

Additionally, professionals stay informed through interactions with their colleagues. Within their teams, important news, such as the release of LLMs like Llama-2 and its integration with platforms like SageMaker, is shared, creating a collaborative learning environment.

While some data scientists and ML engineers may focus on reading research papers, others prioritize practical use cases. They are more inclined to explore how new developments can directly impact their work and improve the applications of LLMs in real-world scenarios.

Read our previous blogs in the TrueML Series

Keep watching the TrueML youtube series and reading all the TrueML blog series.

TrueFoundry is a ML Deployment PaaS over Kubernetes to speed up developer workflows while allowing them full flexibility in testing and deploying models while ensuring full security and control for the Infra team. Through our platform, we enable Machine learning Teams to deploy and monitor models in 15 minutes with 100% reliability, scalability, and the ability to roll back in seconds - allowing them to save cost and release Models to production faster, enabling real business value realisation.