AI inferencing powers real-time decision-making in today’s intelligent systems. It is the stage where a trained machine learning model is used to make predictions or generate responses based on new input data. Whether it is a chatbot responding to users, a self-driving car detecting objects, or a recommendation engine suggesting products, inference is what makes AI usable in the real world.

While model training builds the foundation, inferencing is where AI systems are deployed at scale. This article explains what AI inferencing is, how it works, how it differs from training, and the platforms that support it in production environments.

What is AI Inferencing?

AI inferencing is the process of using a pre-trained machine learning model to make predictions or generate outputs from new, unseen data. It is the operational phase of an AI system where the model is applied in real-world scenarios, such as responding to user queries, analyzing sensor data, or classifying images.

Unlike training, which involves learning patterns from large datasets by adjusting model parameters, inferencing is a forward-pass operation. The model takes input data, processes it through its layers using fixed weights, and produces an output. This output can be a class label, a generated sentence, a bounding box, or a score, depending on the use case.

Inferencing can happen in various environments, including cloud servers, on-premise data centers, mobile devices, and edge hardware. Each environment brings different requirements for latency, compute power, and energy efficiency. For example, a language model serving millions of users must return responses in milliseconds, while an on-device health monitor needs to work with low power and limited memory.

Modern AI applications often depend on inference pipelines that involve preprocessing inputs, passing them through the model, and post-processing the outputs before returning results to the end-user or downstream systems.

AI inferencing is the bridge between model development and real-world application. It is where machine learning becomes useful, responsive, and actionable in production systems. Understanding how inferencing works is critical for building AI solutions that are both efficient and scalable.

AI Inference vs Training: What’s the Difference?

AI training and inference are two distinct phases in the machine learning lifecycle, each serving a different purpose.

Training is the process of teaching a model to recognize patterns by feeding it large volumes of labeled data. During training, the model's parameters are adjusted through iterative computations, typically using optimization techniques like gradient descent. This phase is resource-intensive and requires powerful hardware, long runtimes, and access to large datasets.

Inference, on the other hand, is what happens after training is complete. It is the deployment phase where the trained model is used to make predictions on new, unseen data. Inference involves a forward pass through the model without any changes to the internal weights. It is designed to be fast, efficient, and capable of running in real-time environments.

While training is often performed in controlled, offline environments using GPUs or specialized accelerators, inference needs to be optimized for production constraints like low latency, minimal memory usage, and cost-efficiency. Inference may also need to support scaling across multiple users, devices, or geographic regions.

Another key distinction is frequency. Training is done periodically or once, whereas inference happens continuously in response to user input or real-time events. As AI systems move from research to production, inference becomes the primary operational concern.

How Does AI Inference Work?

AI inference begins when input data is passed through a trained model to generate an output. This process is typically organized into a pipeline that includes preprocessing, model execution, and post-processing. Each stage plays a role in ensuring fast, accurate, and usable predictions.

The first step is preprocessing, where raw inputs are converted into a format compatible with the model. For text, this may involve tokenization. For images or audio, it can include resizing, normalization, or filtering.

Next is the forward pass through the model. The input is processed through the network’s layers using fixed weights learned during training. The model produces raw output values that represent predictions or probabilities, depending on the task.

Then comes post-processing, which converts raw model outputs into meaningful results. This could include converting logits to class labels, decoding token sequences into readable text, or formatting results for a user interface or downstream API.

- Preprocessing: Cleans and formats input data for the model

- Model Execution: Runs the input through the trained model to produce output

- Post-processing: Translates output into usable predictions or responses

Inference can be run synchronously or asynchronously, depending on the system’s architecture. In production systems, it is often paired with monitoring tools, logging, and rate-limiting to ensure performance and stability under real-world usage.

AI inference is designed for efficiency and responsiveness, enabling real-time applications like virtual assistants, fraud detection engines, recommendation systems, and many more.

Components of AI Inferencing

AI inferencing relies on a set of core components that work together to deliver fast and accurate predictions. These components span hardware, software, and infrastructure and are crucial for running models efficiently in production environments.

Trained Model

At the heart of inferencing is the trained model itself. This could be a neural network, transformer, or decision tree, depending on the use case. The model contains the learned weights and architecture necessary to process input data and produce output.

Inference Engine or Runtime

The inference engine is responsible for executing the trained model. It takes the input, performs the forward pass, and returns the result. Popular inference engines include ONNX Runtime, TensorRT, TFLite, and vLLM. These runtimes are optimized for specific hardware and can improve latency and throughput.

Hardware Infrastructure

Inference performance heavily depends on the underlying hardware. GPUs are widely used for deep learning models, while CPUs or specialized chips like TPUs and AWS Inferentia are used in specific environments. Hardware must be selected based on workload characteristics, latency requirements, and cost constraints.

Serving Layer (API/Container)

The serving layer exposes the model as an API endpoint, allowing applications to send requests and receive predictions. This layer typically includes containers, load balancers, and autoscaling components to manage traffic and ensure uptime.

Monitoring and Observability Tools

To ensure reliability and performance, inference systems include monitoring tools that track latency, error rates, resource usage, and request volumes. Observability is key to identifying bottlenecks, debugging issues, and optimizing performance.

- A successful inference pipeline brings together the model, engine, hardware, and monitoring.

- Each component must be optimized to ensure responsiveness, scalability, and cost-efficiency in production.

Types of AI Inference

AI inference can take many forms depending on the model architecture, application, and deployment environment. Understanding the types of inference helps in selecting the right strategy for specific use cases and performance goals.

Real-Time (Online) Inference: This type of inference is performed instantly in response to a user request or external event. It is commonly used in chatbots, virtual assistants, fraud detection systems, and recommendation engines. Real-time inference demands low latency and high availability, often requiring GPU acceleration and autoscaling.

Batch Inference: Batch inference processes large volumes of data at scheduled intervals rather than instantly. It is used in applications like customer segmentation, credit scoring, and content tagging. While it is less time-sensitive than real-time inference, batch processing needs to be optimized for throughput and cost-efficiency.

Edge Inference: Edge inference runs AI models directly on edge devices such as smartphones, IoT sensors, or embedded systems. It minimizes latency and reduces the need for constant cloud connectivity. Edge inference is critical for use cases like autonomous vehicles, wearable health monitors, and industrial automation.

Streaming Inference: This involves processing continuous streams of data in near real-time. It is used in video analytics, anomaly detection, and voice transcription. Streaming inference must handle time-sensitive data with consistent performance.

- Real-time and edge inference prioritize low latency and responsiveness.

- Batch and streaming inference optimize for scale, throughput, and data continuity.

Each type of inference serves different business needs and comes with its own trade-offs in performance, infrastructure, and complexity. Choosing the right approach depends on use-case requirements, resource constraints, and user expectations.

Challenges of AI Inference

Deploying AI inference at scale comes with several challenges that impact performance, reliability, and cost. One of the most common issues is latency, especially in real-time systems where even slight delays affect user experience. Ensuring low-latency responses while maintaining accuracy is a constant trade-off.

Resource optimization is another major challenge. Large models require significant computing power, often demanding GPUs or specialized accelerators. Managing these resources efficiently, especially in multi-tenant or high-traffic environments, becomes complex and expensive.

Scalability is also critical. Inference systems must handle spikes in traffic, autoscale quickly, and maintain consistent performance under varying loads. Additionally, observability is essential to detect bottlenecks, failures, or performance degradation.

Security, version control, and deployment consistency further complicate inference in enterprise environments.

- Balancing speed, cost, and scale is the core challenge in inference systems.

- Without the right infrastructure, AI models can underperform in production.

Best AI Inference Platforms

Choosing the right platform for AI inference is critical to achieving performance, scalability, and cost-efficiency in production. Whether you're deploying large language models, computer vision pipelines, or custom transformers, the underlying infrastructure can make or break the user experience. Below are some of the most reliable and developer-friendly platforms that help teams serve AI models at scale with minimal operational overhead.

1. TrueFoundry

TrueFoundry is one of the most advanced AI inference platforms available for deploying and scaling large language models in production. Its Kubernetes-native architecture is optimized for performance, offering a unified AI Gateway that supports 250+ models across vLLM, TGI, and bring-your-own endpoints. This allows teams to serve models like Mistral, LLaMA, Claude, and custom fine-tuned variants through a single OpenAI-compatible API. TrueFoundry abstracts the complexity of infrastructure with intelligent batching, token streaming, KV caching, and GPU autoscaling, ensuring ultra-low latency even under high concurrency.

Designed for enterprise-scale GenAI systems, TrueFoundry offers prompt versioning, fallback logic, and model routing out of the box. Teams get fine-grained control with token-level rate limiting, detailed observability into latency and usage, and real-time prompt logging. With built-in support for SSO, RBAC, CLI automation, and integration with vector databases, it becomes the go-to choice for building AI copilots, assistants, and RAG pipelines securely and at scale.

Top Inferencing Features:

- High-performance vLLM and TGI support for token streaming, KV caching, and optimized batch serving

- Multi-model routing and fallback logic for intelligent, resilient response handling

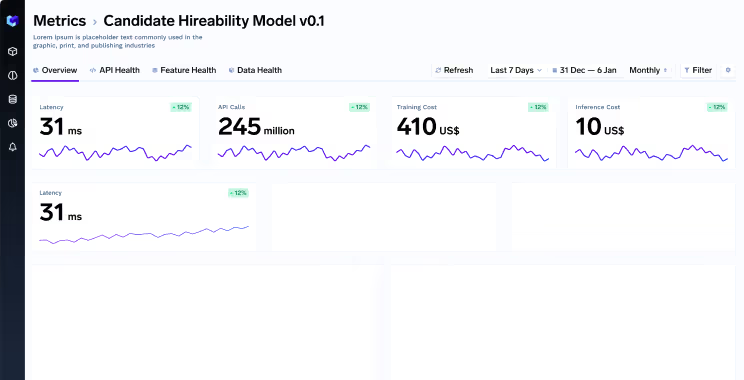

- Real-time observability including latency tracking, token usage, and prompt-response logging via dashboards and APIs

2. Together AI

Together, AI is a cloud-native GenAI platform that offers hosted inference APIs for open-source LLMs like Mistral, Mixtral, and LLaMA 2. It is built for performance at scale and offers low-latency, high-throughput APIs designed for production use. Together AI is ideal for teams that want powerful models without the overhead of infrastructure management. It also supports fine-tuning and custom deployment options through its SDK.

Top Features:

- Hosted LLM inference with minimal setup

- Fine-tuning support for custom model variants

- Optimized for low-latency, high-throughput workloads

3. DeepInfra

DeepInfra provides a platform to serve open-source AI models via scalable APIs. It allows developers to deploy and access models without managing infrastructure, offering both hosted and bring-your-own-model options. DeepInfra supports a wide range of models and emphasizes API simplicity and cost efficiency. It’s a good choice for early-stage startups or teams prototyping AI features quickly.

Top Features:

- Simple API access to hosted models

- Support for custom model deployments

- Cost-effective and developer-friendly infrastructure

4. Hugging Face Inference Endpoints

Hugging Face offers managed inference endpoints for any model hosted on its platform. Developers can deploy models from the Hugging Face Hub to scalable, production-grade infrastructure with just a few clicks. It supports autoscaling, security configurations, and custom Docker containers. Hugging Face endpoints are well-suited for teams already using its ecosystem for model development and experimentation.

Top Features:

- One-click deployment from Hugging Face Hub

- Autoscaling and traffic handling built-in

- Custom containers and private model support

Conclusion

AI inference is the cornerstone of real-world AI applications, enabling models to deliver value through fast and accurate predictions. While training builds the intelligence, inference brings it to life in production. As AI adoption grows, optimizing inference for speed, cost, and scale becomes increasingly important. With the right tools and infrastructure, teams can deploy powerful models efficiently and reliably. Platforms like TrueFoundry, Together AI, DeepInfra, and Hugging Face make it easier to operationalize AI without deep DevOps overhead. Understanding the inference landscape is essential for building AI systems that are not just smart but also scalable and production-ready.