LLM Locust: A Tool for Benchmarking LLM Performance

What is LLM Benchmarking?

LLM Benchmarking is the process of evaluating how efficiently a Large Language Model (LLM) inference server performs under load. It goes beyond traditional performance testing by focusing on real-time response characteristics that directly impact user experience and system scalability.

Here are some of the key metrics involved:

- Time to First Token (TTFT):

The delay between sending a request and receiving the first token of the response. This reflects the model’s initial processing latency. - Output Tokens per Second (tokens/s):

Measures how quickly the model generates response tokens, indicating generation speed and system responsiveness. - Inter-Token Latency:

The time between consecutive tokens in a streaming response. Lower values indicate smoother, more natural-feeling output in real-time applications. - Requests per Second (RPS):

The number of inference requests an LLM can handle per second—an essential measure of throughput.

Tracking and analyzing these metrics is critical for:

- Comparing LLM providers

- Optimizing deployments across CPUs, GPUs, or specialized accelerators

- Fine-tuning server configurations for latency-sensitive applications

That’s where LLM Locust comes in.

Why Traditional Load Testing Tools Like Locust Fall Short for LLM Benchmarking (And How LLM Locust Fixes It)

As LLMs continue to power more real-time and interactive applications, benchmarking their performance accurately is more important than ever. While tools like Locust are excellent for traditional load testing, they fall short when it comes to the streaming, token-level granularity LLMs require.

Enter LLM Locust—a tool purpose-built to bridge this gap.

Why Locust Is Great for Traditional Load Testing

Let’s give credit where it’s due. Locust remains one of the most beloved tools for load testing due to its:

- Python-native scripting: Flexible and intuitive for test scenario creation

- Lightweight concurrency: Greenlets allow for thousands of simulated users

- Real-time Web UI: Simple and powerful for monitoring load tests live

For standard APIs or services, it’s a fantastic choice. But for LLMs? Not quite enough.

The Problem: LLMs Break the Load Testing Mold

1. No Support for LLM-Specific Metrics

Locust doesn’t natively track LLM-specific performance indicators, such as:

- Time to First Token (TTFT)

- Output tokens per second

- Inter-token latency

These streaming dynamics are fundamental to understanding how well an LLM performs, especially in real-time use cases.

2. Token Streaming Inconsistency + CPU Bottlenecks

LLM APIs often stream tokens inconsistently—some return zero tokens at first, others send one token at a time, and some deliver multiple tokens in a single chunk.

To measure output tokens accurately, responses must be re-tokenized, since the API responses can’t be trusted to follow a consistent format.

But here’s the catch: tokenization is a CPU-bound task, especially when done for every streaming response. Locust uses greenlets for lightweight concurrency, but they still operate under Python’s Global Interpreter Lock (GIL). That means CPU-heavy operations like tokenization can block the event loop, reducing throughput and skewing your benchmark results.

The combination of inconsistent streaming behavior and Python’s GIL makes this a significant performance bottleneck in traditional Locust setups.

3. No Custom Charts

Want to plot TTFT or streaming throughput? Locust’s UI doesn’t support custom LLM metrics out of the box, leaving key data invisible during test runs.

4. Competing Tools Are Limited

Tools like genai-perf are valuable, but often provide:

- One-off benchmark snapshots

- Limited configurability

- No real-time visual feedback

They lack the iterative, exploratory flexibility needed in real-world benchmarking.

The Solution: Meet LLM Locust

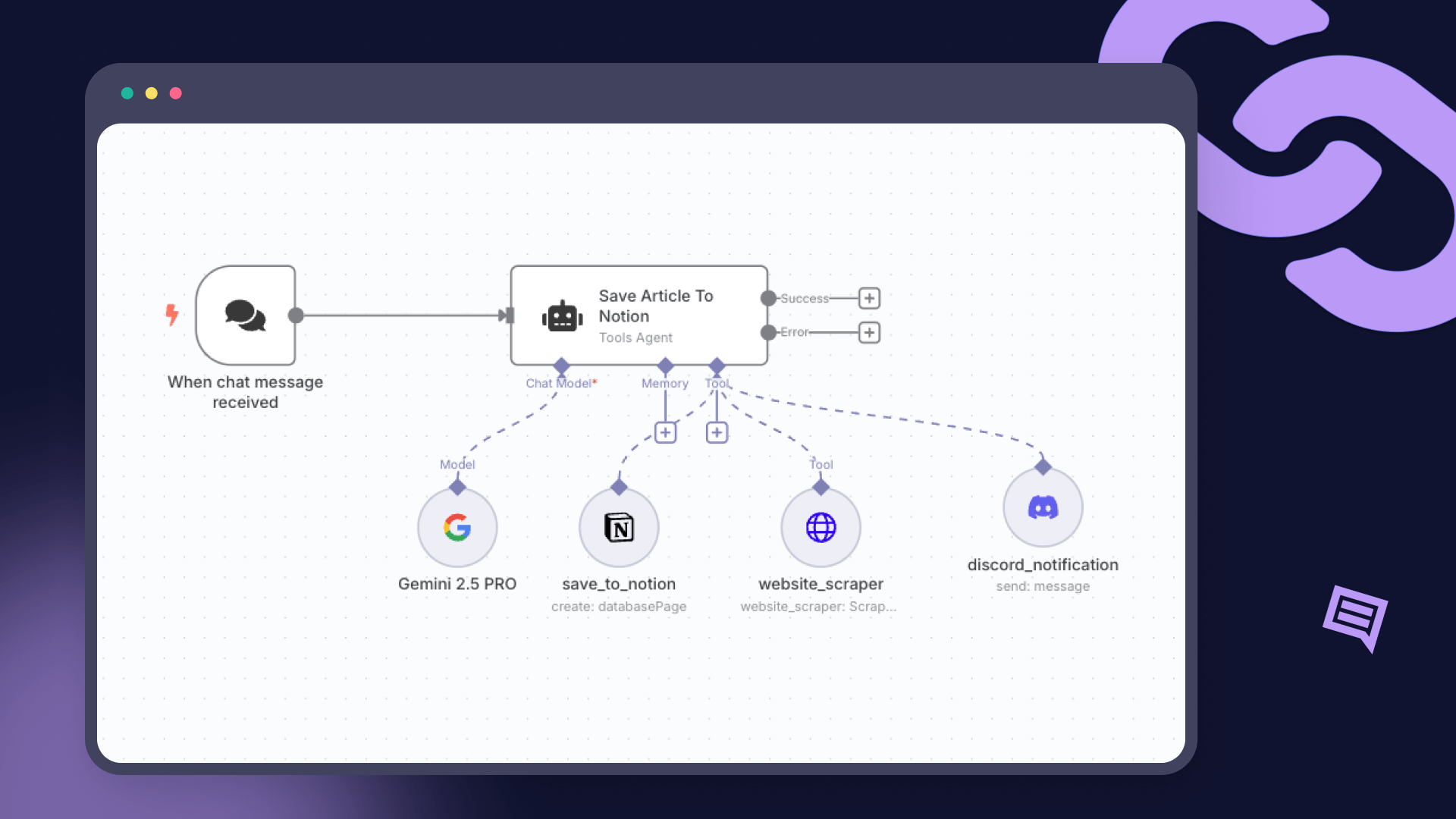

LLM Locust combines the simplicity of Locust with deep support for LLM-specific benchmarking. Inspired by BentoML’s llm-bench, it introduces a modular architecture and custom frontend for real-time insights.

How LLM Locust Works

1. Asynchronous Request Generation

Simulated users send continuous asynchronous requests to your LLM API, mimicking real-world load. This runs on a separate python process, so there are no tokenization bottlenecks.

2. Streaming Response Collection

LLM responses are streamed and routed to a metrics daemon for lightweight parsing and analysis.

3. Metrics Processing

The daemon tokenizes responses, calculates TTFT, tokens/s, and inter-token latency, and buckets the results.

4. Aggregation

Every 2 seconds, data is sent to a FastAPI backend which mimics locust backend, which stores and aggregates metrics globally.

5. Real-Time Visualization

A customized version of Locust frontend displays:

- TTFT per request

- Token throughput over time

- 📊 RPS, latency, and other key stats

Here is the detailed architecture:

Here is a demo of how it looks like:

Conclusion

Locust is a great load testing tool—but not for LLMs out of the box.

LLM Locust brings the streaming, token-level precision required to properly benchmark today’s powerful language models.

Whether you're deploying an open-source model on your own infrastructure or comparing performance across LLM APIs, LLM Locust gives you the clarity, flexibility, and control to do it right.

Github link: https://github.com/truefoundry/llm-locust

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.