ML Deployment as a service

While developing models has become more streamlined, deploying, scaling, and managing ML models in production remains a major hurdle. Platform teams are responsible for ensuring that ML models can be seamlessly deployed, monitored, scaled, and optimized across multiple environments, all while minimizing infrastructure costs and maintaining reliability.

Traditional ML deployment approaches often require extensive Kubernetes expertise, manual GPU resource management, and inefficient scaling mechanisms, leading to high operational overhead for platform teams. In response to these challenges, TrueFoundry offers an ML Deployment as a Service solution, designed to automate infrastructure selection, simplify deployment, optimize performance, and enhance observability.

Challenges Faced by Platform Teams in ML Deployment

1. Manual Infrastructure Configuration & Selection

Deploying ML models requires selecting the right GPU instances, model servers, and Kubernetes configurations. Without intelligent automation, platform teams must manually allocate resources, leading to error-prone, time-consuming deployments.

2. High Operational Overhead

The current process often involves multiple handoffs between data scientists, ML engineers, and DevOps teams. Platform engineers frequently intervene to assist with Kubernetes configurations, scaling, and monitoring—creating inefficiencies and bottlenecks.

3. Lack of GPU-Based Autoscaling

Traditional ML deployments lack built-in GPU autoscaling mechanisms. Without dynamic scaling based on Requests Per Second (RPS), utilization, or time-based triggers, infrastructure is either underutilized (leading to wasted spend) or over-provisioned (causing performance bottlenecks).

4. Complex Model Serving and Selection

Choosing the most efficient model server (e.g., vLLM, SGlang, Triton, FastAPI, TensorFlow Serving) requires deep expertise in performance benchmarking, memory optimization, and load balancing. Without automation, this leads to inefficient deployments and suboptimal performance.

5. Debugging & Observability Challenges

ML deployments generate logs, metrics, and events across multiple platforms. Troubleshooting performance issues or failures is tedious, as logs are often scattered, making it difficult for platform teams to quickly identify and resolve issues.

6. Cost Overruns & Inefficient Scaling

Without automated resource optimization, platform teams must manually monitor and manage idle models, leading to unnecessary cloud expenses. Traditional ML deployment methods do not support auto-shutdown or dynamic scaling.

7. Deployment Strategies & Model Upgrades

Enterprises require zero-downtime model upgrades, but traditional methods lack rolling updates, canary releases, and blue-green deployments. This increases the risk of service disruptions when deploying new model versions.

How TrueFoundry Simplifies ML Deployment

TrueFoundry eliminates these challenges by providing a fully managed ML deployment platform, enabling self-serve deployments, intelligent resource selection, cost optimization, and enhanced observability. Here’s how:

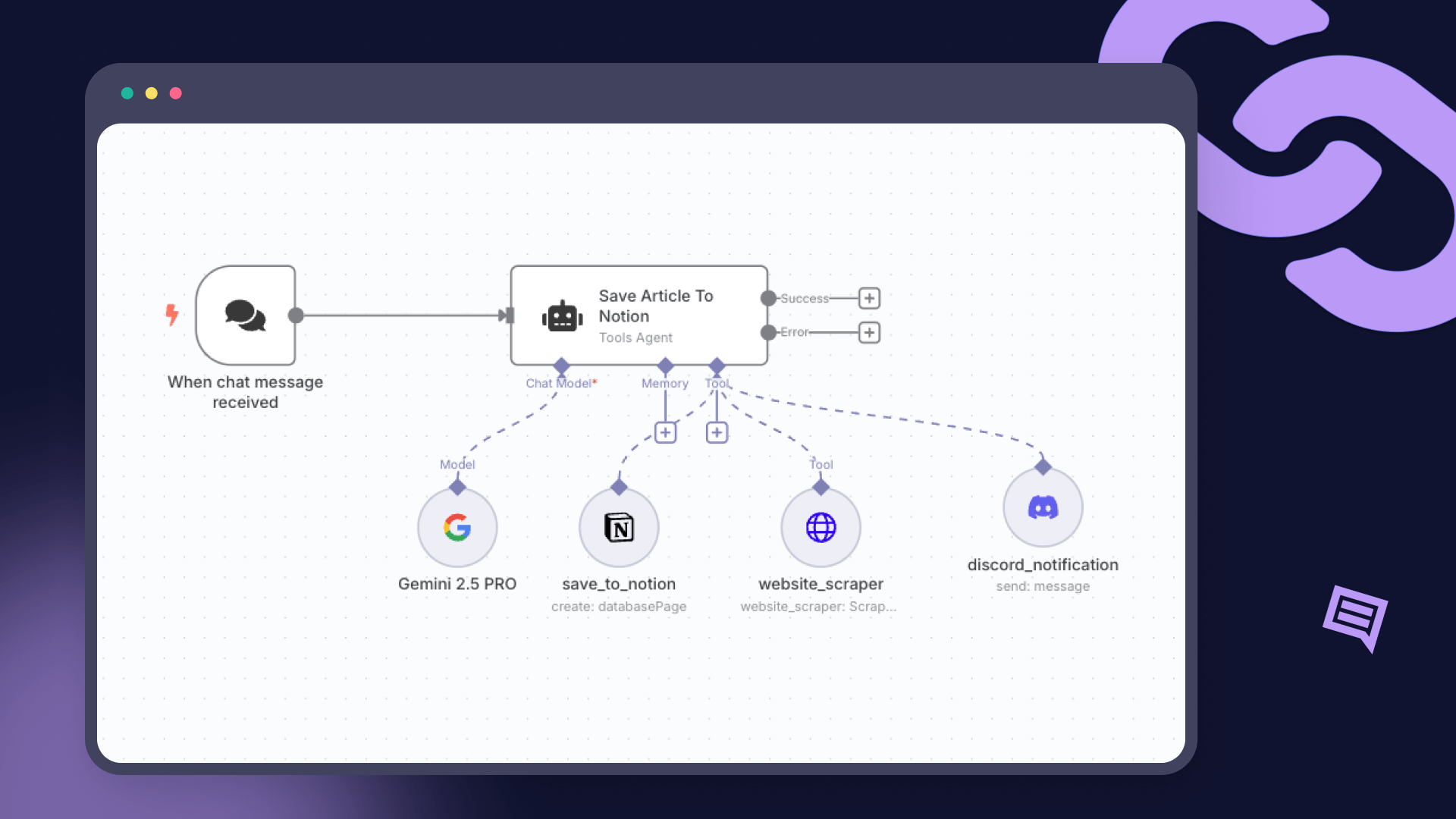

1. Automated & Intelligent Model Deployment

TrueFoundry allows platform teams to deploy ML models with a single click, eliminating the need for Kubernetes expertise. The platform intelligently picks the best infrastructure configurations, selecting the optimal GPU instance types, model servers, and scaling strategies based on workload requirements.

Additionally, GitOps integration ensures that all deployments are automated and reproducible, with built-in YAML generation for easy CI/CD workflows. By abstracting infrastructure complexities, TrueFoundry empowers data scientists and ML engineers to deploy models independently, reducing the operational burden on platform teams.

2. Cost & Performance Optimization

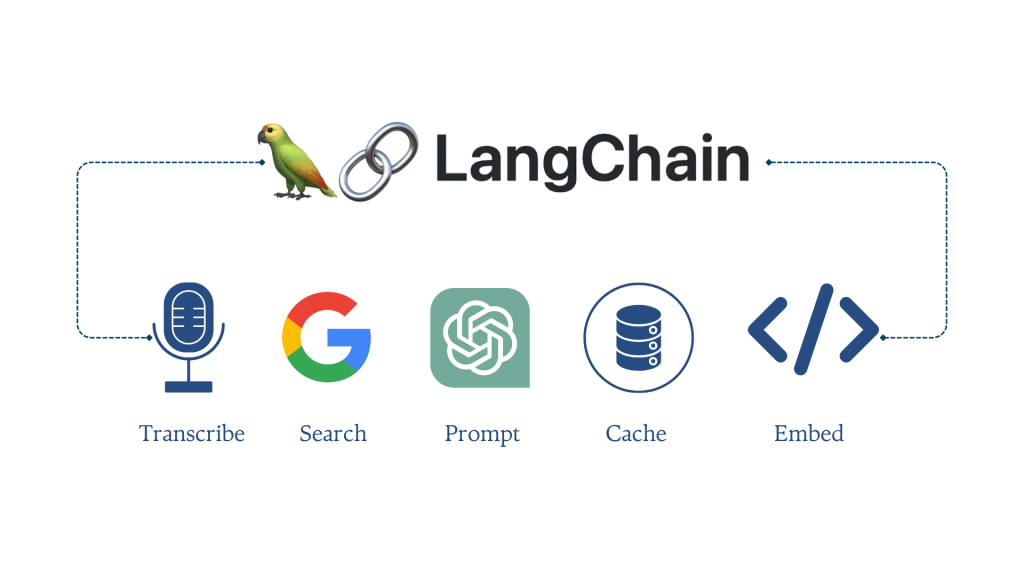

TrueFoundry’s advanced GPU-based autoscaling dynamically adjusts resources based on real-time demand. Models scale up and down based on RPS, GPU utilization, or scheduled triggers, ensuring optimal performance and cost efficiency. The platform also provides:

- Auto Shutdown for Idle Models: Reducing unnecessary GPU consumption.

- Intelligent Model Caching: Improving inference speed and reducing redundant computations.

Additionally, TrueFoundry supports advanced deployment strategies, including rolling updates, canary releases, and blue-green deployments, enabling platform teams to roll out new model versions with zero downtime.

3. Observability & Debugging for ML Workloads

TrueFoundry provides centralized observability, offering logs, metrics, and events all in one place, significantly improving troubleshooting efficiency. This unified dashboard helps platform teams:

- Analyze usage patterns and infrastructure utilization.

- Debug model failures faster with detailed logs and event tracking.

Sticky routing for LLMs further enhances throughput by 50%, ensuring efficient request handling, while model catalog support (currently integrated with Hugging Face) provides an easy way to manage model versions and registries.

Additionally, TrueFoundry’s automated infra suggestions optimize CPU, memory, and autoscaling configurations based on traffic patterns, further streamlining deployment management

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.