Cognita: Building an Open Source, Modular, RAG applications for Production

Assume there is a team A assigned to develop RAG application for use-case-1, then there is team B that is developing RAG application for use-case-2, and then there is team C, that is just planning out for their upcoming RAG application use case. Have you wished that building RAG pipelines across multiple teams should have been easy? Each team need not start from scratch but a modular way where each team can use the same base functionality and effectively develop their own apps on top of it without any interference?

Worry not!! This is why we created Cognita. While RAG is undeniably impressive, the process of creating a functional application with it can be daunting. There's a significant amount to grasp regarding implementation and development practices, ranging from selecting the appropriate AI models for the specific use case to organizing data effectively to obtain the desired insights. While tools like LangChain and LlamaIndex exist to simplify the prototype design process, there has yet to be an accessible, ready-to-use open-source RAG template that incorporates best practices and offers modular support, allowing anyone to quickly and easily utilize it.

Advantages of Cognita:

- A central reusable repository of parsers, loaders, embedders and retrievers.

- Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team.

- Fully API driven - which allows integration with other systems.

Overview

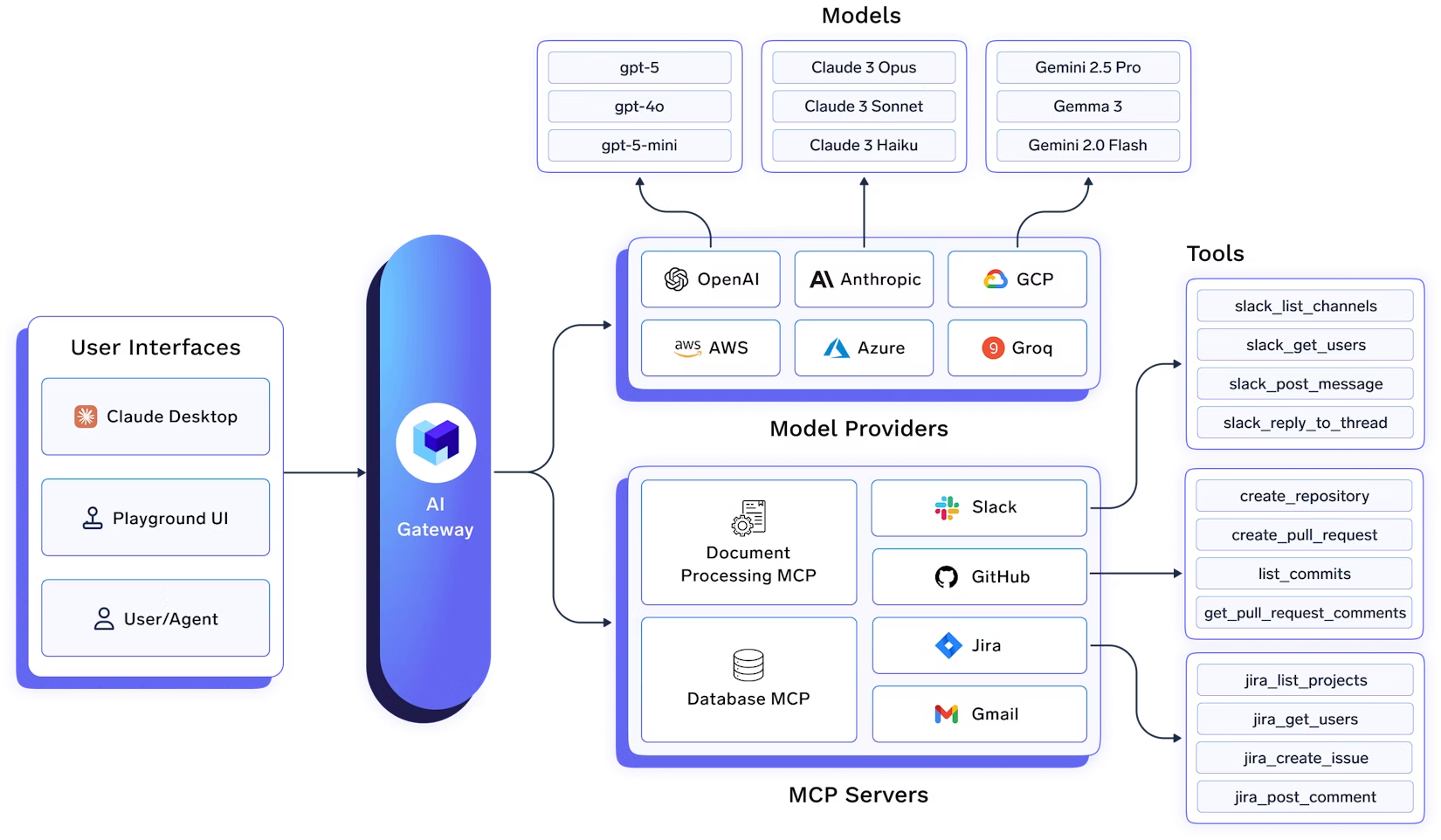

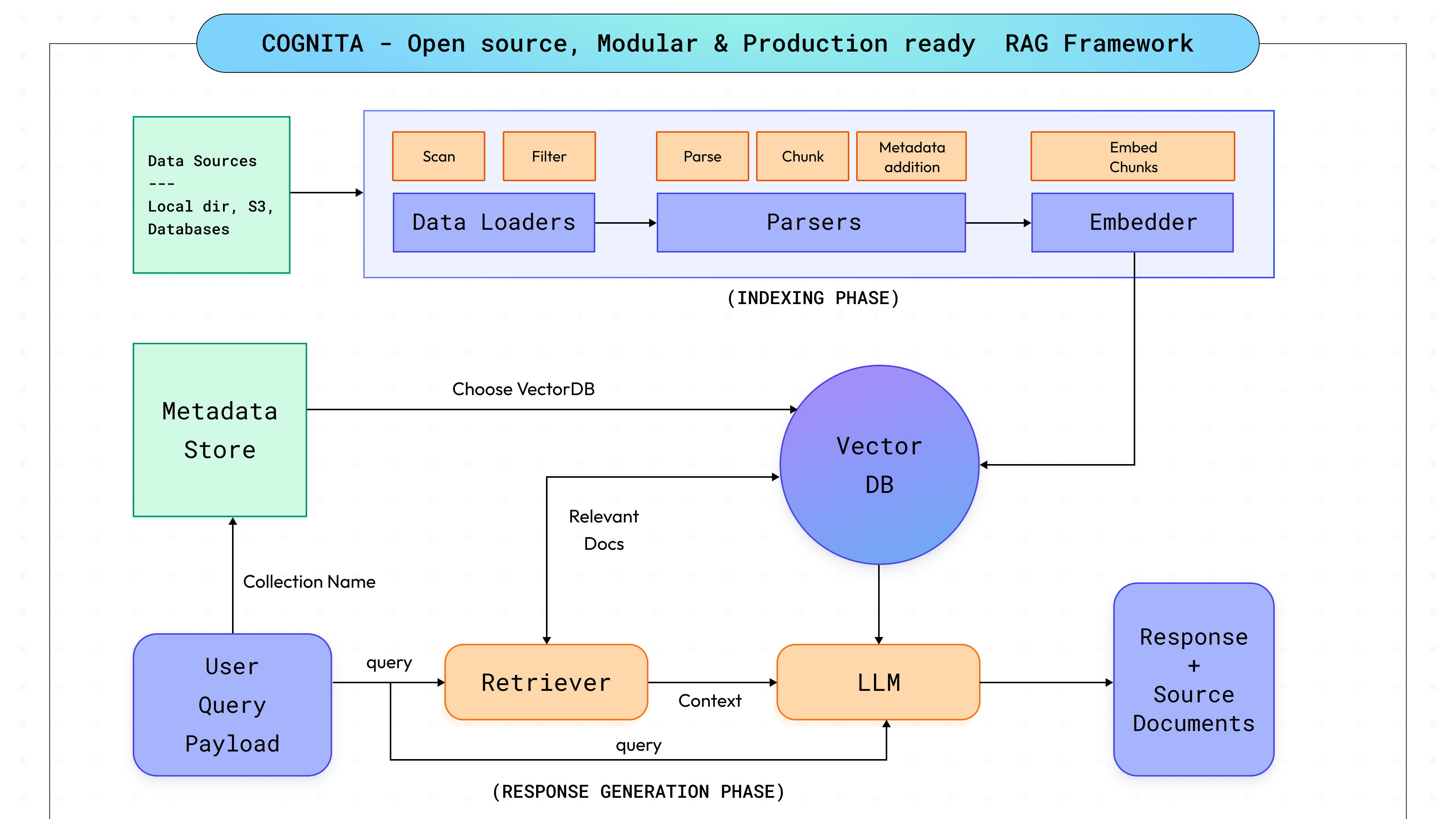

Delving into the inner workings of Cognita, our goal was to strike a balance between full customisation and adaptability while ensuring user-friendliness right out of the box. Given the rapid pace of advancements in RAG and AI, it was imperative for us to engineer Cognita with scalability in mind, enabling seamless integration of new breakthroughs and diverse use cases. This led us to break down the RAG process into distinct modular steps (as shown in the above diagram, to be discussed in subsequent sections), facilitating easier system maintenance, the addition of new functionalities such as interoperability with other AI libraries, and enabling users to tailor the platform to their specific requirements. Our focus remains on providing users with a robust tool that not only meets their current needs but also evolves alongside technology, ensuring long-term value.

Components

Cognita is designed around seven different modules, each customisable and controllable to suit different needs:

- Data Loaders

- Parsers

- Embedders

- Rerankers

- Vector DBs

- Metadata Store

- Query Controllers

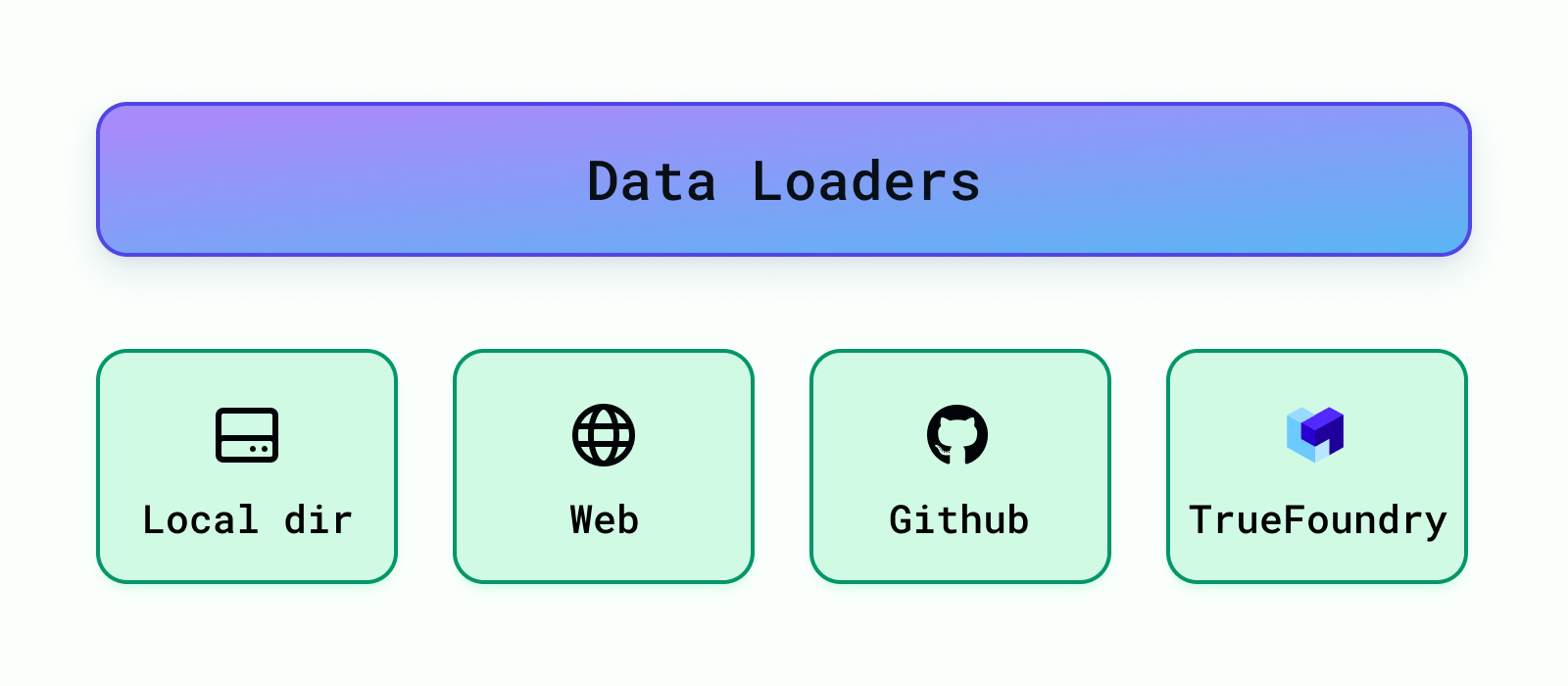

Data Loaders

These load the data from different sources like local directories, S3 buckets, databases, Truefoundry artifacts, etc. Cognita currently supports data loading from local directory, web url, Github repository and Truefoundry artifacts. More data loaders can be easily added under backend/modules/dataloaders/ . Once a dataloader is added you need to register it so that it can be used by the RAG application under backend/modules/dataloaders/__init__.py To register a dataloader add the following:

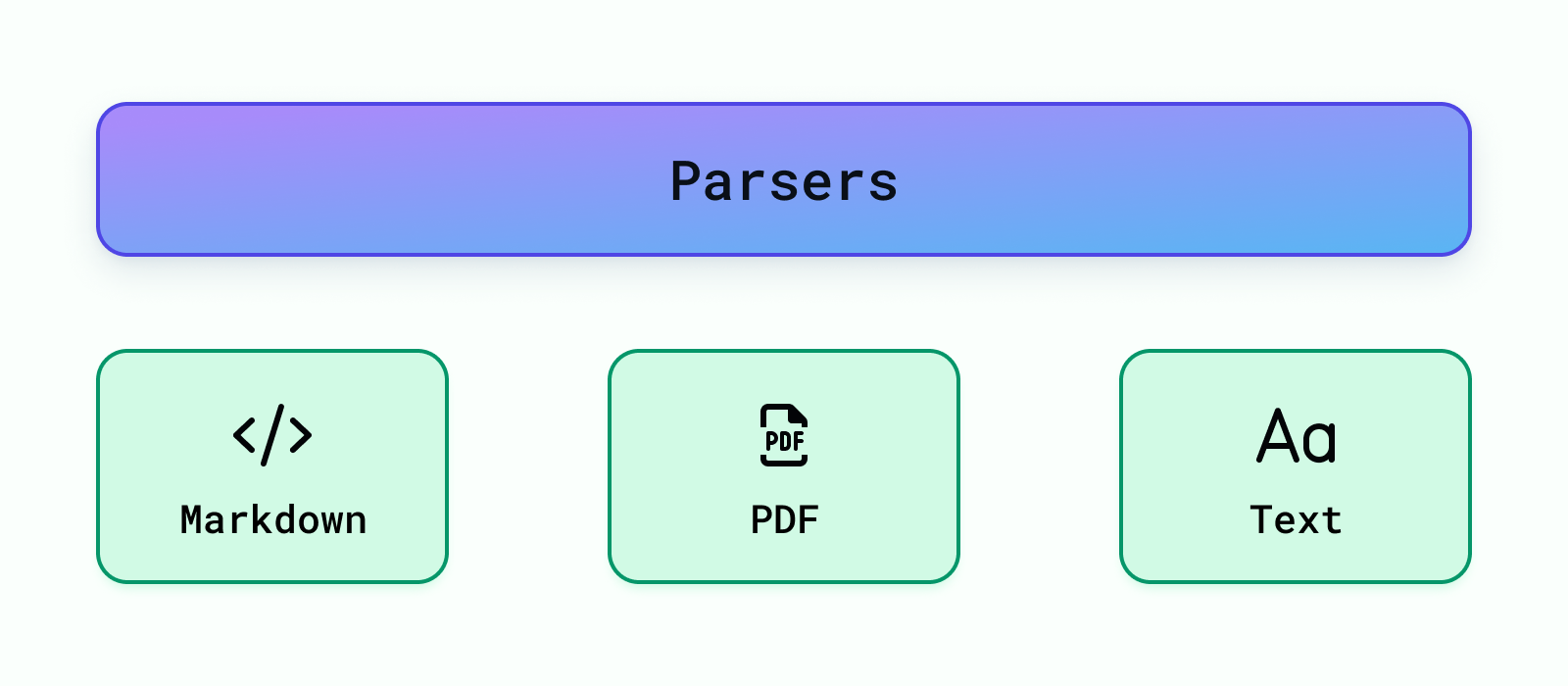

Parsers

In this step, we deal with different kinds of data, like regular text files, PDFs, and even Markdown files. The aim is to turn all these different types into one common format so we can work with them more easily later on. This part, called parsing, usually takes the longest and is difficult to implement when we're setting up a system like this. But using Cognita can help because it already handles the hard work of managing data pipelines for us.

Post this, we split the parsed data into uniform chunks. But why do we need this? The text we get from the files can be different lengths. If we use these long texts directly, we'll end up adding a bunch of unnecessary information. Plus, since all LLMs can only handle a certain amount of text at once, we won't be able to include all the important context needed for the question. So instead, we're going to break down the text into smaller parts for each section. Intuitively, smaller chunks will contain relevant concepts and will be less noisy compared to larger chunks.

Currently we support, parsing for Markdown, PDF and Text files. More data parsers can be easily added under backend/modules/parsers/ . Once a parser is added you need to register it so that it can be used by the RAG application under backend/modules/parsers/__init__.py To register a parser add the following:

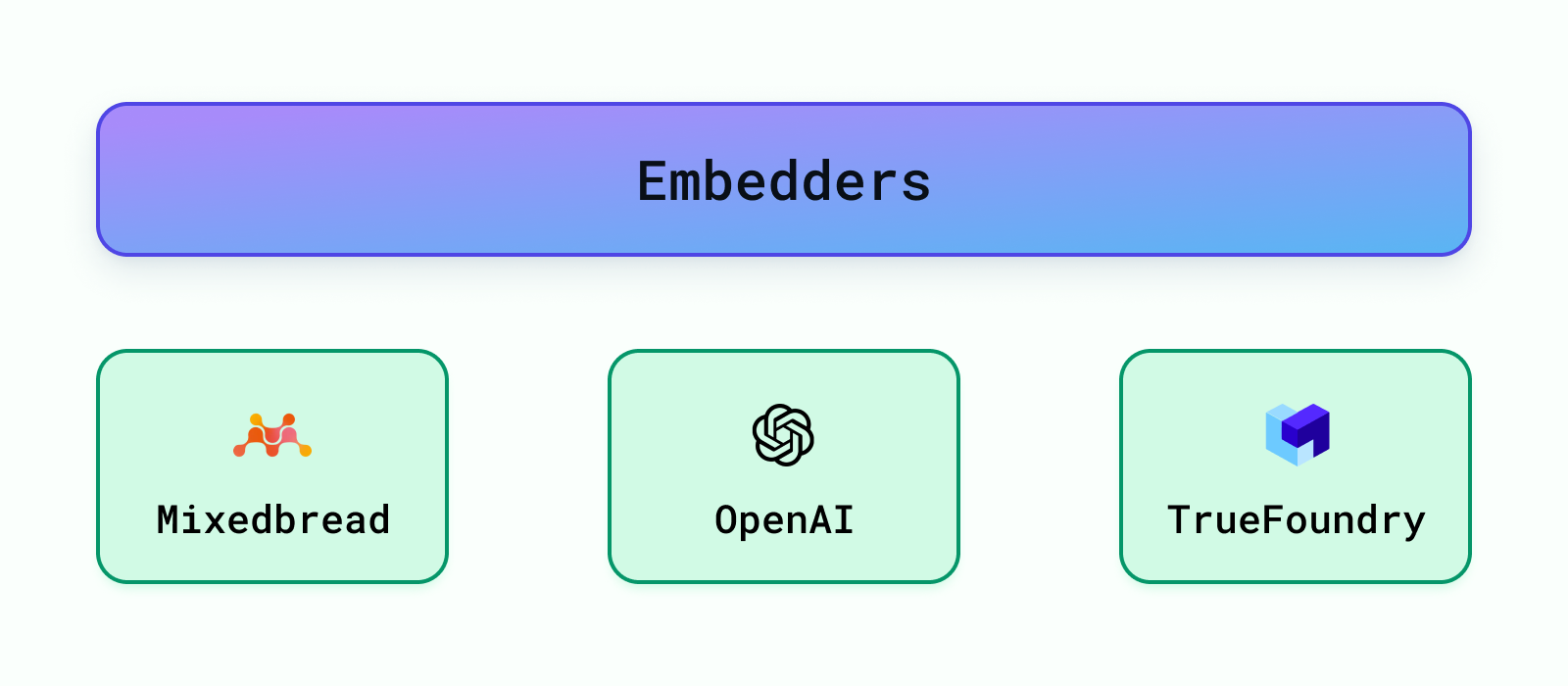

Embedders

Once we've split the data into smaller pieces, we want to find the most important chunks for a specific question. One fast and effective way to do this is by using a pre-trained model (embedding model) to convert our data and the question into special codes called embeddings. Then, we compare the embeddings of each chunk of data to the one for the question. By measuring the cosine similarity between these embeddings, we can figure out which chunks are most closely related to the question, helping us find the best ones to use.

There are many pre-trained models available to embed the data such as models from OpenAI, Cohere, etc. The popular popular ones can be discovered through HuggingFace's Massive Text Embedding Benchmark (MTEB) leaderboard. We provide support for OpenAI Embeddings, TrueFoundry Embeddings and also current SOTA embeddings (as of April, 2024) from mixedbread-ai.

More embedders can be easily added under backend/modules/embedder/ . Once a embedder is added you need to register it so that it can be used by the RAG application under backend/modules/embedders/__init__.py To register a parser add the following:

Note: Remember, embeddings aren't the only method for finding important chunks. We could also use an LLM for this task! However, LLMs are much bigger than embedding models and have a limit on the amount of text they can handle at once. That's why it's smarter to use embeddings to pick the top k chunks first. Then, we can use LLMs on these fewer chunks to figure out the best ones to use as the context to answer our question.

Rerankers

Once embedding step finds some potential matches, which can be a lot, a reranking step is applied. Reranking to makes sure the best results are at the top. As a result, we can choose the top x documents making our context more concise and prompt query shorter. We provide the support for SOTA reranker (as of April, 2024) from mixedbread-ai which is implemented under backend/modules/reranker/

VectorDB

Once we create vectors for texts, we store them in something called a vector database. This database keeps track of these vectors so we can quickly find them later using different methods. Regular databases organize data in tables, like rows and columns, but vector databases are special because they store and find data based on these vectors. This is super useful for things like recognizing images, understanding language, or recommending stuff. For example, in a recommendation system, each item you might want to recommend (like a movie or a product) is turned into a vector, with different parts of the vector representing different features of the item, like its genre or price. Similarly, in language stuff, each word or document is turned into a vector, with parts of the vector representing features of the word or document, like how often the word is used or what it means. These vector databases are designed to handle these efficiently. Using different ways to measure how close vectors are to each other, like how similar they are or how far apart they are, we find vectors that are closest to the given user query. The most common ways to measure this are Euclidean Distance, Cosine Similarity, and Dot Product.

There are various available vector databases in the market, like Qdrant, SingleStore, Weaviate, etc. We currently support Qdrant and SingleStore. Qdrant vector db class is defined under /backend/modules/vector_db/qdrant.py, while SingleStore vector db class is defined under /backend/modules/vector_db/singlestore.py

Other vector dbs can be added too in the vector_db folder and can be registered under /backend/modules/vector_db/__init__.py

To add any vector DB support in Cognita, user needs to do the following:

- Create the class that inherits from

BaseVectorDB(from backend.modules.vector_db.base import BaseVectorDB) and initialize it withVectorDBConfig(from backend.types import VectorDBConfig) - Implement the following methods:

create_collection: To intialize the collection/project/table in vector db.upsert_documents: To insert the documents in the db.get_collections: Get all the collections present in the db.delete_collection: To delete the collection from the db.get_vector_store: To get the vector store for the given collection.get_vector_client: To get the vector client for the given collection if any.list_data_point_vectors: To list already present vectors in the db that are similar to the documents being inserted.delete_data_point_vectors: To delete the vectors from the db, used to remove old vectors of the updated document.

We now show how we can add a new vector db to the RAG system. We take example of both Qdrant and SingleStore vector dbs.

Qdrant Integration

Qdrant is an Open-Source Vector Database and Vector Search Engine written in Rust. It provides fast and scalable vector similarity search service with convenient API. To add Qdrant vector db to the RAG system, follow the below steps:

In .env file you can add the following

VECTOR_DB_CONFIG = '{"url": "<url_here>", "provider": "qdrant"}' # Qdrant URL for deployed instanceVECTOR_DB_CONFIG='{"provider":"qdrant","local":"true"}'# For local file based Qdrant instance without docker

- Create a new class

QdrantVectorDBinbackend/modules/vector_db/qdrant.pythat inherits fromBaseVectorDBand initialize it withVectorDBConfig

- Override the

create_collection

- Override the

upsert_documentsmethod to insert the documents in the db

- Override the

get_collectionsmethod to get all the collections present in the db

- Override the

delete_collectionmethod to delete the collection from the db

- Override the

get_vector_storemethod to get the vector store for the given collection

- Override the

get_vector_clientmethod to get the vector client for the given collection if any

- Override the

list_data_point_vectorsmethod to list already present vectors in the db that are similar to the documents being inserted

- Override the

delete_data_point_vectorsmethod to delete the vectors from the db, used to remove old vectors of the updated document

SingleStore Integration

SingleStore offers powerful vector database functionality perfectly suited for AI-based applications, chatbots, image recognition and more, eliminating the need for you to run a speciality vector database solely for your vector workloads. Unlike traditional vector databases, SingleStore stores vector data in relational tables alongside other types of data. Co-locating vector data with related data allows you to easily query extended metadata and other attributes of your vector data with the full power of SQL.

SingleStore provides a free tier for developers to get started with their vector database. You can sign up for a free account here. Upon signup, go to Cloud -> workspace -> Create User. Use the credentials to connect to the SingleStore instance.

In .env file you can add the following

VECTOR_DB_CONFIG = '{"url": "<url_here>", "provider": "singlestore"}' # url: mysql://{user}:{password}@{host}:{port}/{db}

To add SingleStore vector db to the RAG system, follow the below steps:

- We want to add extra columns to table to store vector id hence, we override SingleStoreDB.

- Create a new class

SingleStoreVectorDBinbackend/modules/vector_db/singlestore.pythat inherits fromBaseVectorDBand initialize it withVectorDBConfig

- Override the

create_collectionmethod to create a collection in SingleStore

- Override the

upsert_documentsmethod to insert the documents in the db

- Override the

get_collectionsmethod to get all the collections present in the db

- Override the

delete_collectionmethod to delete the collection from the db

- Override the

get_vector_storemethod to get the vector store for the given collection

- Override the

get_vector_clientmethod to get the vector client for the given collection if any

- Override the

list_data_point_vectorsmethod to list already present vectors in the db that are similar to the documents being inserted

- Override the

delete_data_point_vectorsmethod to delete the vectors from the db, used to remove old vectors of the updated document

Metadata Store

This contains the necessary configurations that uniquely define a project or RAG app. A RAG app may contain set of documents from one or more data sources combined which we term as collection. The documents from these data sources are indexed into the vector db using data loading + parsing + embedding methods. For each RAG use-case, the metadata store contains:

- Name of the collection

- Name of the associated Vector DB used

- Linked Data Sources

- Parsing Configuration for each data source

- Embedding Model and it's configuration to be used

We currently define two ways to store this data, one locally and other using Truefoudry. These stores are defined under - backend/modules/metada_store/

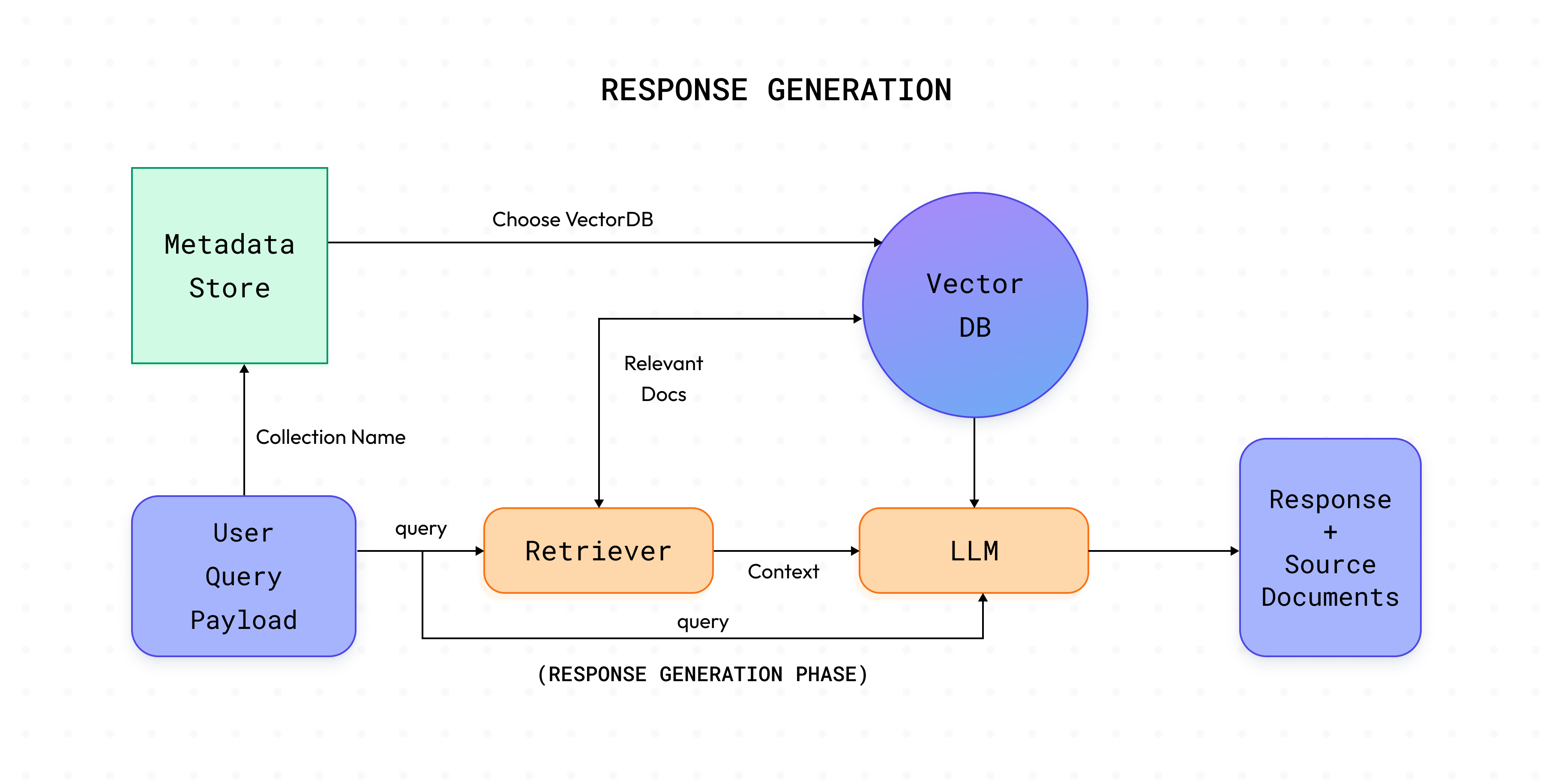

Query Controllers

Once the data is indexed and stored in vector db, now it's time to combine all the parts together to use our app. Query Controllers just do that! They help us retrieve answer for the corresponding user query. A typical query controller steps are as follows:

- Users sends a request payload that contains the query, collection name, llm configuration, promt, retriever and it's configuration.

- Based on the

collection namereleavantvector dbis fectched with it's configuration like embedder used, vector db type, etc - Based on the

query, relevant docs are retrieved using theretrieverfrom vector db. - The fetched documents form the

contextand along with the query a.k.aquestionis given to theLLMto generate the answer. This step can also involve prompt tuning. - If required, along with the generated answer relevant document chunks are also returned in the response.

Note: In case of agents the intermediate steps can also be streamed. It is up to the specific app to decide.

Query controller methods can be directly exposed as an API, by adding http decorators to the respective functions.

To add your own query controller perform the following steps:

- Each RAG use-case should typically have a separate folder that contains the query controller. Assume our folder is

app-2. Hence we shall write our controller under/backend/modules/query_controller/app-2/controller.py - Add

query_controllerdecorator to your class and pass the name of your custom controller as argument - Add methods to this controller as per your needs and use our http decorators like

post,get,deleteto make your methods an API

- Import your custom controller class at

backend/modules/query_controllers/__init__.py

A sample query controller is written under: /backend/modules/query_controller/example/controller.py Please refer for better understanding

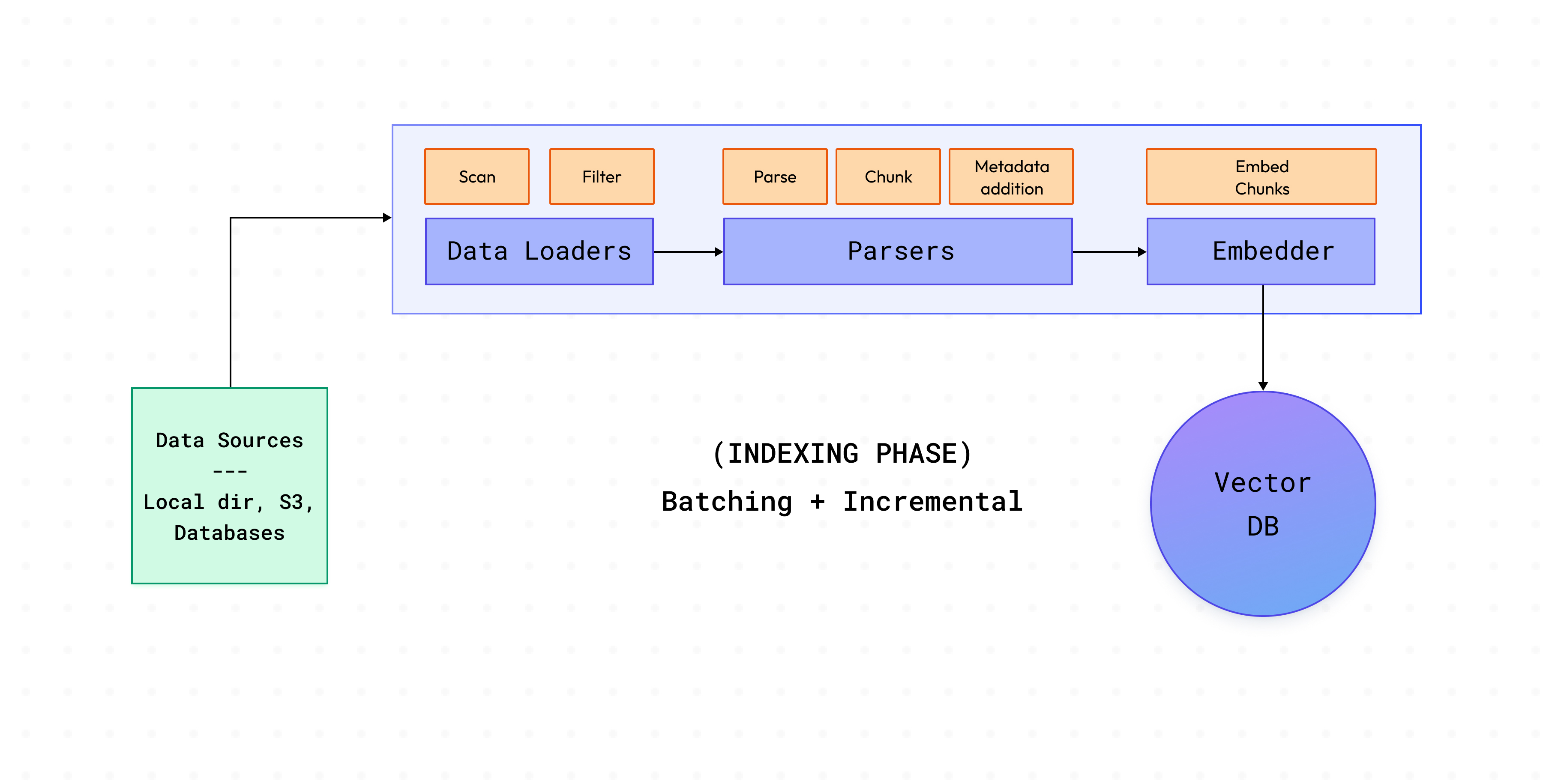

Cognita - Process Flow

A typical Cognita process consists of two phases:

- Data indexing

- Response Generation

Data Indexing

This phase involves loading data from sources, parsing the documents present in these sources and indexing them in the vector db. To handle large quantities of documents encountered in production, Cognita takes this a step further.

- Cognita groups documents in batches, rather than indexing them all together.

- Cognita keeps computes and keeps track of document hash so that whenever a new document is added to the data source for indexing only those documents are indexed rather than indexing the complete data source, this save time and compute dramatically.

- This mode of indexing is also referred as

INCREMENTALindexing, there is also other mode that is supported in Cognita that isFULLindexing.FULLindexing re-ingests the data into vector db irrespective of any vector data present for the given collection.

Response Generation

Response generation phase makes a call to the /answer endpoint of your defined QueryController and generates the response for the requested query.

Using Cognita UI

The following steps will showcase how to use the cognita UI to query documents:

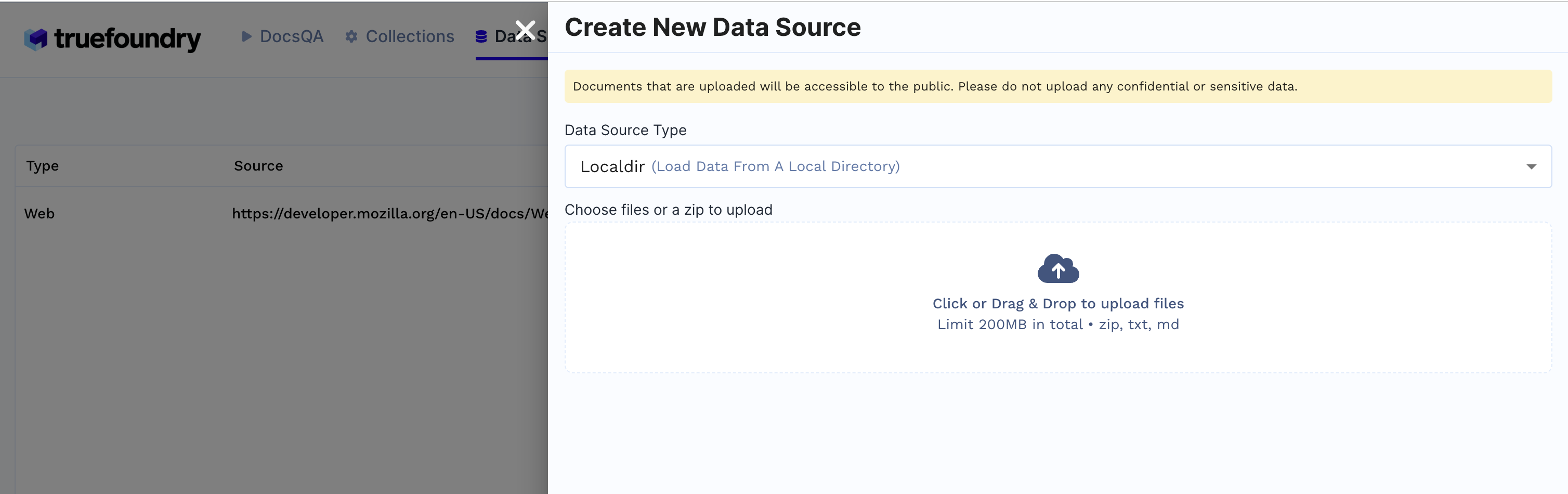

1. Create Data Source

- Click on

Data Sourcestab

- Click

+ New Datasource - Data source type can be either files from local directory, web url, github url or providing Truefoundry artifact FQN.

- E.g: If

Localdiris selected upload files from your machine and clickSubmit.

- E.g: If

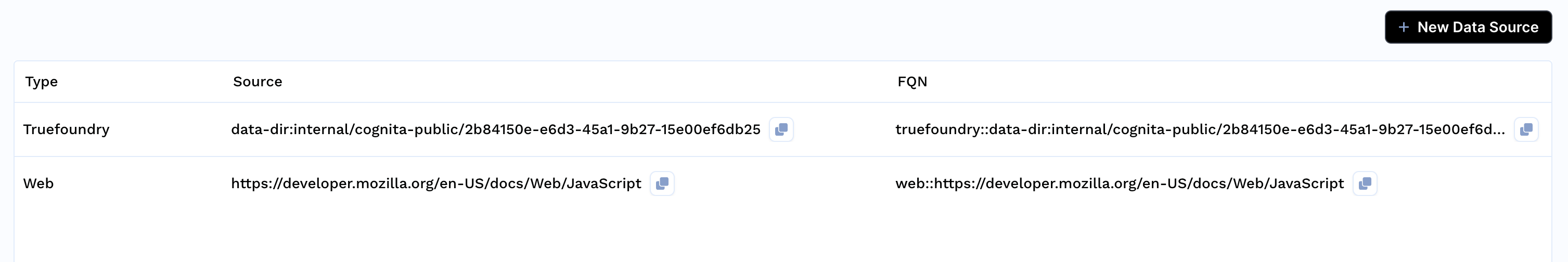

- Created Data sources list will be available in the Data Sources tab.

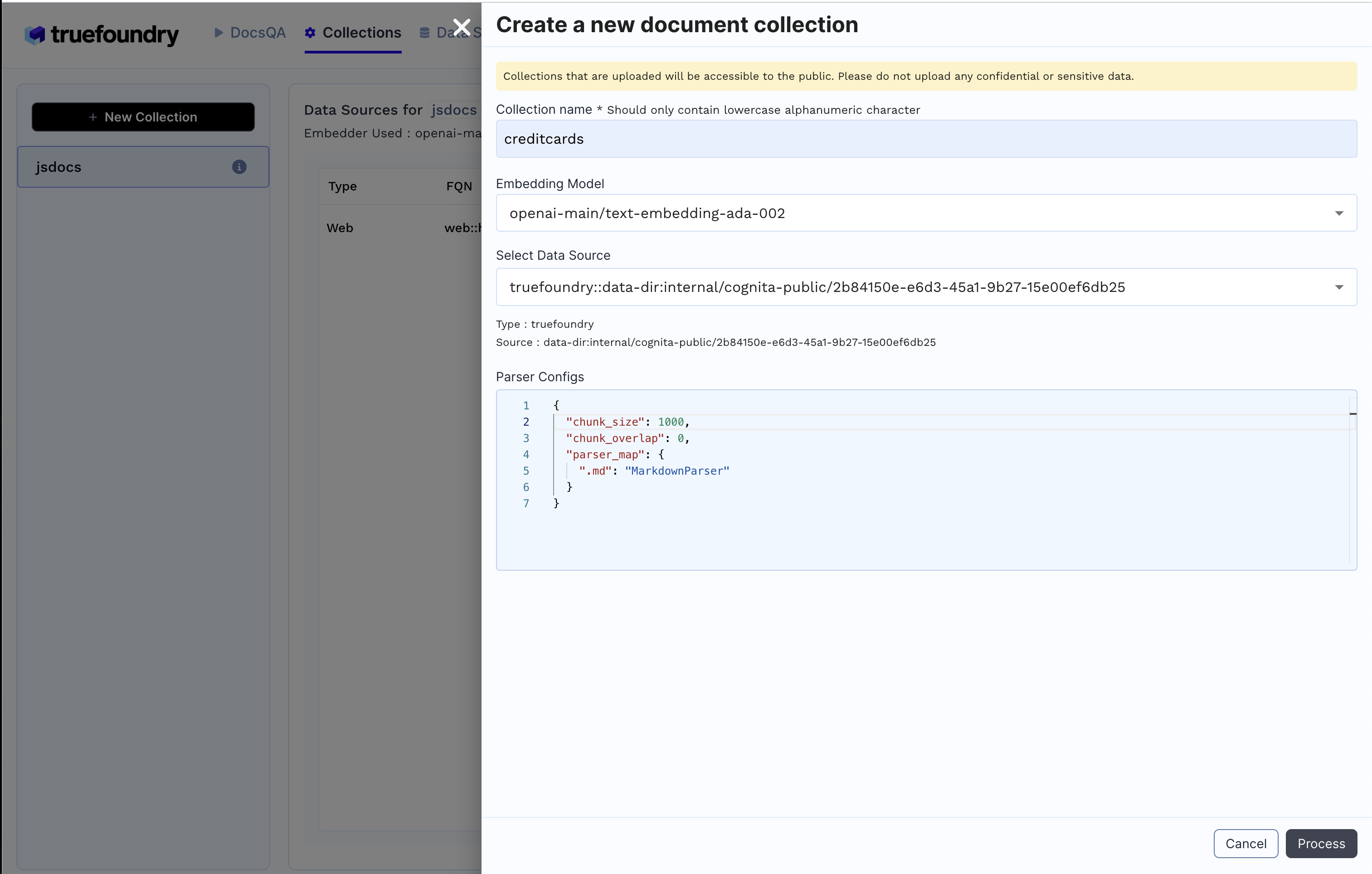

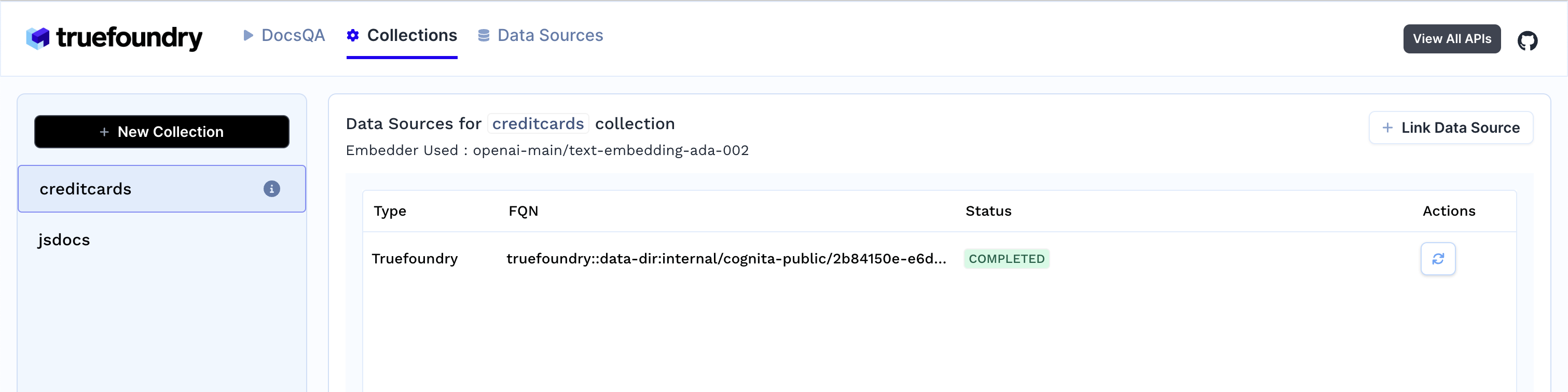

2. Create Collection

- Click on

Collectionstab - Click

+ New Collection

- Enter Collection Name

- Select Embedding Model

- Add earlier created data source and the necessary configuration

- Click

Processto create the collection and index the data.

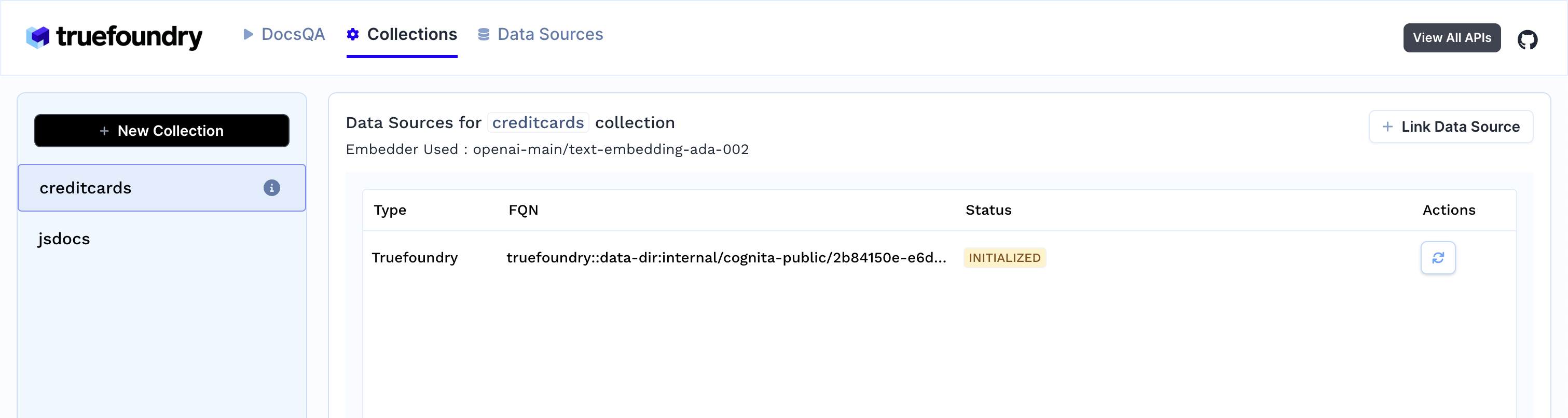

3. As soon as you create the collection, data ingestion begins, you can view it's status by selecting your collection in collections tab. You can also add additional data sources later on and index them in the collection.

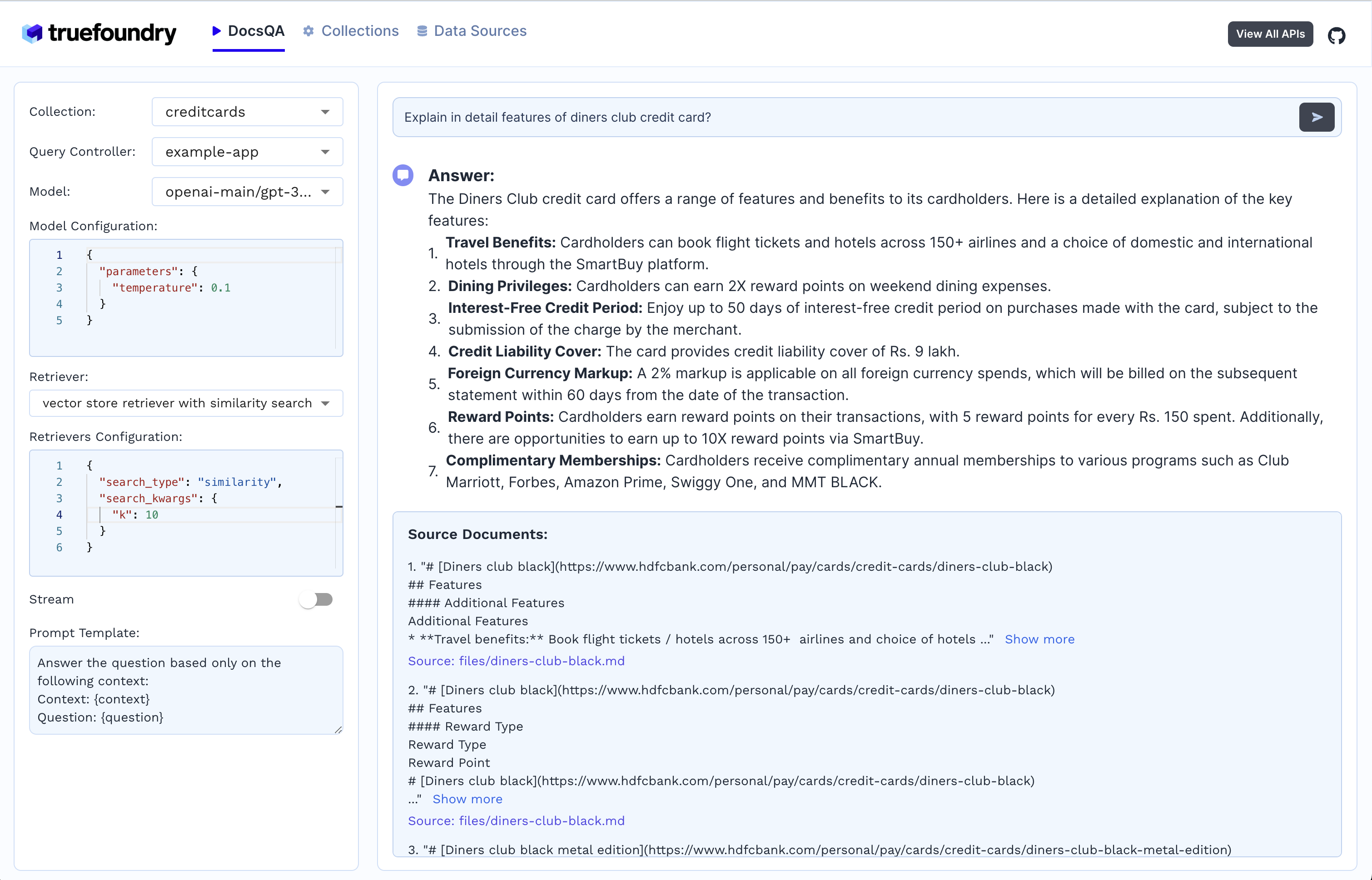

4. Response generation

- Select the collection

- Select the LLM and it's configuration

- Select the document retriever

- Write the prompt or use the default prompt

- Ask the query

Get Started Now!

Book a personalized demo or sign up today to start building your RAG usecases.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.