What is Similarity Search & How Does it work?

Introduction

In today’s data-driven world, searching through vast amounts of data to find similar items is a fundamental operation used in various applications, from databases to search engines and recommendation systems. This process, known as similarity search, involves identifying items that are alike based on certain criteria.

While traditional database searches based on fixed numeric criteria (like finding employees within a specific salary range) are straightforward, similarity search tackles more complex queries. For instance, a user might search for “shoes”, “black shoes”, or a specific model like “Nike AF-1 LV8”. These queries can be vague and varied, requiring the system to understand and differentiate between concepts such as different types of shoes.

Importance and Applications

Similarity search is crucial in many fields, including:

- E-commerce: Recommending products similar to what a user has viewed or purchased.

- Image and Video Search: Finding visually similar images or videos in large databases.

- Natural Language Processing: Matching similar text documents, emails, or articles.

- Healthcare: Identifying similar medical cases or genetic sequences.

The key challenge in similarity search is dealing with large-scale data while accurately understanding the deeper conceptual meanings of the items being searched. Traditional databases, which rely on symbolic object representations, fall short in such scenarios. Instead, we need more advanced techniques that can handle semantic representations of data and perform searches efficiently even at scale.representations, distance metrics, and different search algorithms.

By leveraging similarity search, we can transform complex, abstract queries into actionable insights, making it a powerful tool in various domains. In the following sections, we will delve into how similarity search works, focusing on the role of vector representations, distance metrics, and different search algorithms.

Vector Representations

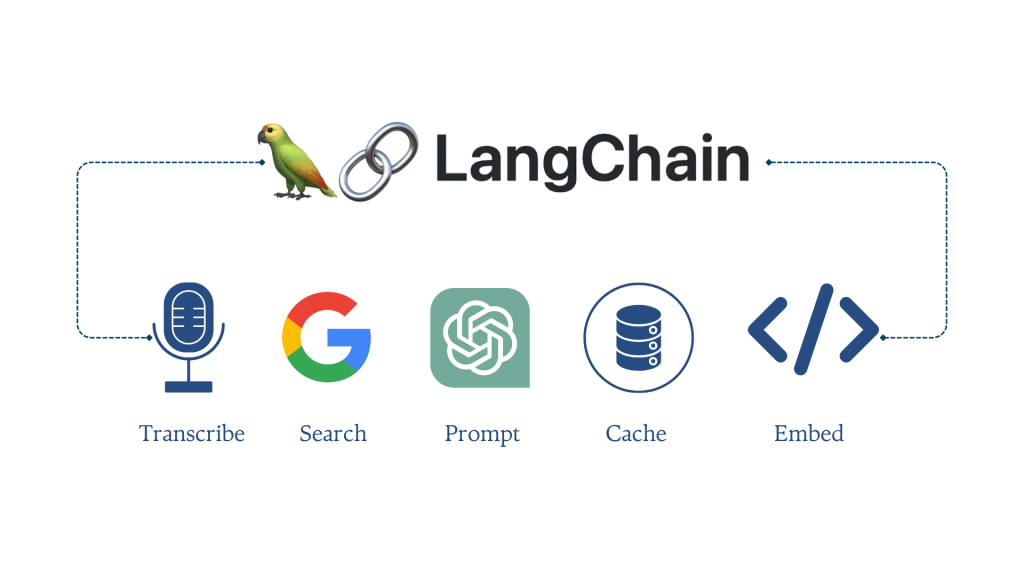

What are Vector Embeddings?

In machine learning, we represent real-world objects and concepts as vectors, which are sets of continuous numbers known as embeddings. This approach allows us to capture the deeper semantic meanings of items. When objects like images or text are converted into vector embeddings, their similarity can be assessed by measuring the distance between these vectors in a high-dimensional space.

For example, in a vector space, similar images will have vectors that are close to each other, while dissimilar images will be farther apart. This makes it possible to perform mathematical operations to find and compare similar items efficiently.

Examples of Embedding Models

Several models are used to generate these vector embeddings:

- Word2Vec: Transforms words into vectors, capturing their semantic relationships.

- GLoVE (Global Vectors for Word Representation): Another model for converting text into vector form, focusing on the global context of words.

- Universal Sentence Encoder (USE): Creates embeddings for entire sentences, capturing the meaning beyond individual words.

- Convolutional Neural Networks (CNNs) like VGG: Used to generate embeddings for images, capturing visual similarities.

These models are trained on large datasets and tasks, enabling them to produce embeddings that effectively represent the items’ semantic content.

Measuring Similarity: Distance Metrics

Overview of Distance Metrics

To determine how similar two vector embeddings are, we use distance metrics. These metrics calculate the “distance” between vectors in the vector space, with smaller distances indicating greater similarity.

Euclidean Distance

Euclidean distance measures the straight-line distance between two points in a high-dimensional space. It is the most intuitive way of measuring distance, akin to the geometric distance you might measure with a ruler. It’s useful when the data is dense, and the concept of physical distance is relevant.

Formula:

Manhattan Distance

Also known as L1 distance, Manhattan distance sums the absolute differences of their coordinates. This metric is suitable for grid-like data structures and can be visualized as the total “city block” distance one would travel between points in a grid.

Formula:

Cosine Similarity

Cosine similarity measures the cosine of the angle between two vectors, focusing on their direction rather than magnitude. This is particularly useful for text data, where the magnitude of the vector (word frequency) might vary, but the direction (word usage pattern) is more important.

Chebyshev Distance

Chebyshev distance measures the maximum distance between the coordinates of a pair of vectors. It’s often used in chess-like grid scenarios where you can move in any direction, including diagonally.

Choosing the Right Metric

Choosing the right distance metric depends on the specific characteristics and requirements of the application. Here are some guidelines for selecting the appropriate metric:

Euclidean Distance

- Use Case: Best for dense, continuous data where the concept of geometric distance is relevant.

- Advantages: Simple to calculate and interpret; works well in low-dimensional spaces.

- Disadvantages: Can be less effective in high-dimensional spaces due to the curse of dimensionality.

- Examples: Image similarity, physical distance calculations.

Manhattan Distance

- Use Case: Suitable for grid-like data structures and scenarios where movement is restricted to orthogonal directions.

- Advantages: More robust to outliers than Euclidean distance in some cases.

- Disadvantages: Less intuitive for non-grid data; can be sensitive to feature scaling.

- Examples: Pathfinding algorithms (like A* in grids), urban planning.

Cosine Similarity

- Use Case: Ideal for text data and high-dimensional sparse data where direction is more important than magnitude.

- Advantages: Effective in capturing the orientation of vectors; not affected by the magnitude of the vectors.

- Disadvantages: May not perform well if the vectors are not normalized.

- Examples: Document similarity, recommendation systems for text-based data.

Chebyshev Distance

- Use Case: Useful in scenarios where the maximum coordinate difference is critical, such as in certain board games.

- Advantages: Simple to compute; can be used in grid-based pathfinding where diagonal movement is allowed.

- Disadvantages: Less common in natural datasets; can be less intuitive for continuous data.

- Examples: Chess algorithms, robotics navigation in grid environments.

Performing Similarity Search

K-Nearest Neighbors (k-NN)

K-Nearest Neighbors (k-NN) is a popular algorithm used to find the closest vectors to a given query vector. Here’s how it works and its pros and cons:

- How it Works: The algorithm calculates the distance between the query vector and all vectors in the dataset. It then selects the ‘k’ nearest vectors (neighbors) based on the specified distance metric (Euclidean, Manhattan, etc.).

- Advantages: Simple to implement and understand; no need for a model training phase.

- Disadvantages: Computationally expensive for large datasets since it involves calculating the distance to every vector.

- Use Cases: Suitable for smaller datasets where exact nearest neighbors are needed, such as in recommendation systems for small user bases.

Approximate Nearest Neighbor (ANN)

To address the inefficiency of k-NN with large datasets, Approximate Nearest Neighbor (ANN) methods provide a faster, albeit less precise, alternative. ANN algorithms aim to find a “good guess” of the nearest neighbors, trading off some accuracy for speed.

- Indexing Techniques: ANN algorithms use indexing structures like KD-Trees, Ball Trees, and VP-Trees to partition the vector space and narrow down the search area.

- Hashing Methods: Algorithms like Locality-Sensitive Hashing (LSH) map similar vectors to the same buckets, reducing the search space.

- Clustering: Methods like k-means clustering group vectors, allowing the search to be conducted within a cluster rather than the entire dataset.

- Advantages: Significantly faster than exact k-NN for large datasets; scalable to billions of vectors.

- Disadvantages: May not always find the exact nearest neighbors; depends on the trade-off between speed and accuracy.

- Use Cases: Web search engines, large-scale recommendation systems, real-time similarity search applications.

Practical Implementation

When implementing similarity search in practice, several libraries and frameworks can help:

- FAISS (Facebook AI Similarity Search): A library optimized for fast and efficient similarity search on large datasets. (Link)

- Annoy (Approximate Nearest Neighbors Oh Yeah): A C++ library with Python bindings, designed for memory-efficient and fast search. (Link)

- HNSW (Hierarchical Navigable Small World): An algorithm and library for ANN search that builds a hierarchical graph to navigate the vector space efficiently. (Link)

Applications of Similarity Search

Similarity search has a wide range of applications across various fields, leveraging the ability to find and compare similar items quickly and accurately. Here are some key applications:

1. Recommendation Systems

Recommendation systems use similarity search to suggest products, content, or services based on user preferences and behavior.

- E-commerce: Recommending products similar to those a user has viewed or purchased.

- Streaming Services: Suggesting movies, TV shows, or music tracks based on viewing or listening history.

- Online Advertising: Displaying ads relevant to a user’s interests based on their browsing activity.

2. Image and Video Retrieval

Similarity search is crucial for retrieving visually similar images or videos from large databases.

- Content-Based Image Retrieval (CBIR): Finding images that match a query image based on visual similarity.

- Video Recommendation: Suggesting videos similar to the ones a user has watched based on visual content analysis.

3. Natural Language Processing (NLP)

In NLP, similarity search helps in various text-based applications by finding semantically similar documents or phrases.

- Document Clustering: Grouping similar documents for topic modeling or categorization.

- Semantic Search: Improving search engine results by understanding the context and meaning of queries.

- Plagiarism Detection: Identifying duplicate or highly similar text across documents.

4. Fraud Detection

Detecting fraudulent activity by finding patterns and anomalies that deviate from normal behavior.

- Financial Transactions: Identifying unusual transactions that are similar to known fraudulent patterns.

- Identity Theft: Detecting login attempts or account activities that match patterns of previous fraud.

5. Healthcare and Genomics

Similarity search aids in medical diagnosis and genetic research by comparing patient data and genetic sequences.

- Medical Imaging: Comparing patient scans to identify similar cases and assist in diagnosis.

- Genomic Research: Finding similar genetic sequences to study genetic variations and their implications.

Challenges in Similarity Search

Handling Vague and Varied Queries

One of the primary challenges in similarity search is the nature of user queries. Queries can range from very generic terms like “shoes” to very specific items such as “Nike AF-1 LV8”. The system must be able to discern these nuances and understand how different items relate to one another. This requires a deep understanding of the semantic meaning behind the queries, which goes beyond simple keyword matching.

Scalability Issues

Another significant challenge is scalability. In real-world applications, we often deal with massive datasets that can include billions of items. Searching through such large volumes of data efficiently requires advanced techniques and powerful computational resources. Traditional database systems, which are designed for exact matches and symbolic representations, struggle to perform well in these scenarios.

Conclusion

Similarity search, also known as vector search, plays a pivotal role in various modern applications. By leveraging vector embeddings and sophisticated distance metrics, similarity search allows us to find and compare items based on their semantic meaning. Here are the key takeaways:

- Understanding Vector Representations: Transforming real-world objects into vector embeddings captures their deeper meanings, enabling effective similarity comparisons.

- Choosing the Right Metric: Selecting the appropriate distance metric (Euclidean, Manhattan, Cosine, Chebyshev) depends on the specific use case and data characteristics.

- Performing Similarity Search: Techniques like k-Nearest Neighbors (k-NN) and Approximate Nearest Neighbor (ANN) help in efficiently finding similar items in large datasets.

- Diverse Applications: Similarity search is integral to recommendation systems, image and video retrieval, NLP, fraud detection, and healthcare, among other fields.

To truly harness the power of similarity search, it’s essential to understand the underlying principles and choose the right tools and techniques for your specific needs. Whether you are building a recommendation engine, a content-based retrieval system, or a fraud detection mechanism, similarity search can significantly enhance the accuracy and efficiency of your solutions.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.