FinOps for AI: Optimizing AI Infrastructure Costs

Introduction

Financial Operations (FinOps) has become an essential discipline in the cloud era, bringing together engineering, finance, and business teams to maximize the value of technology spend. As enterprises adopt AI and large language models (LLMs) at scale, FinOps principles are now crucial for AI workloads as well.

Why? Because AI introduces new cost challenges that traditional cloud cost management wasn’t designed to handle. In the AI-driven world, controlling spend is as critical as model accuracy or uptime. Here are some unique cost challenges introduced by modern AI initiatives:

- Unpredictable Token Usage: LLMs use token-based pricing, and prompt complexity or user activity can cause usage (and costs) to spike. Without visibility, budgets are easily blown.

- Multi-Cloud GPU Sprawl: AI workloads span on-prem and multiple clouds, each with different GPU pricing. Tracking idle or underutilized GPUs is difficult without centralized oversight, leading to wasted spend.

- Prompt Pipeline Complexity: AI workflows often involve multiple chained model/tool calls (e.g. RAG, agents), multiplying cost per query. A small change in behavior can cause exponential cost increases.

- Tool Fragmentation & Visibility Gaps: Different teams use different tools - OpenAI, fine-tuned models, cloud GPUs without unified cost tracking. It’s hard to answer: “What’s our per-inference cost?” or “Which team is spending the most?”

FinOps – is the discipline of cloud financial management, and its core principles are visibility, accountability, and optimization. FinOps for AI means applying those same principles to these AI-specific challenges. In the sections below, we’ll break down how each FinOps principle applies to AI and, crucially, how TrueFoundry’s platform helps implement them in a practical, engineering-friendly way.

Visibility: Centralized Observability for AI Usage and Costs

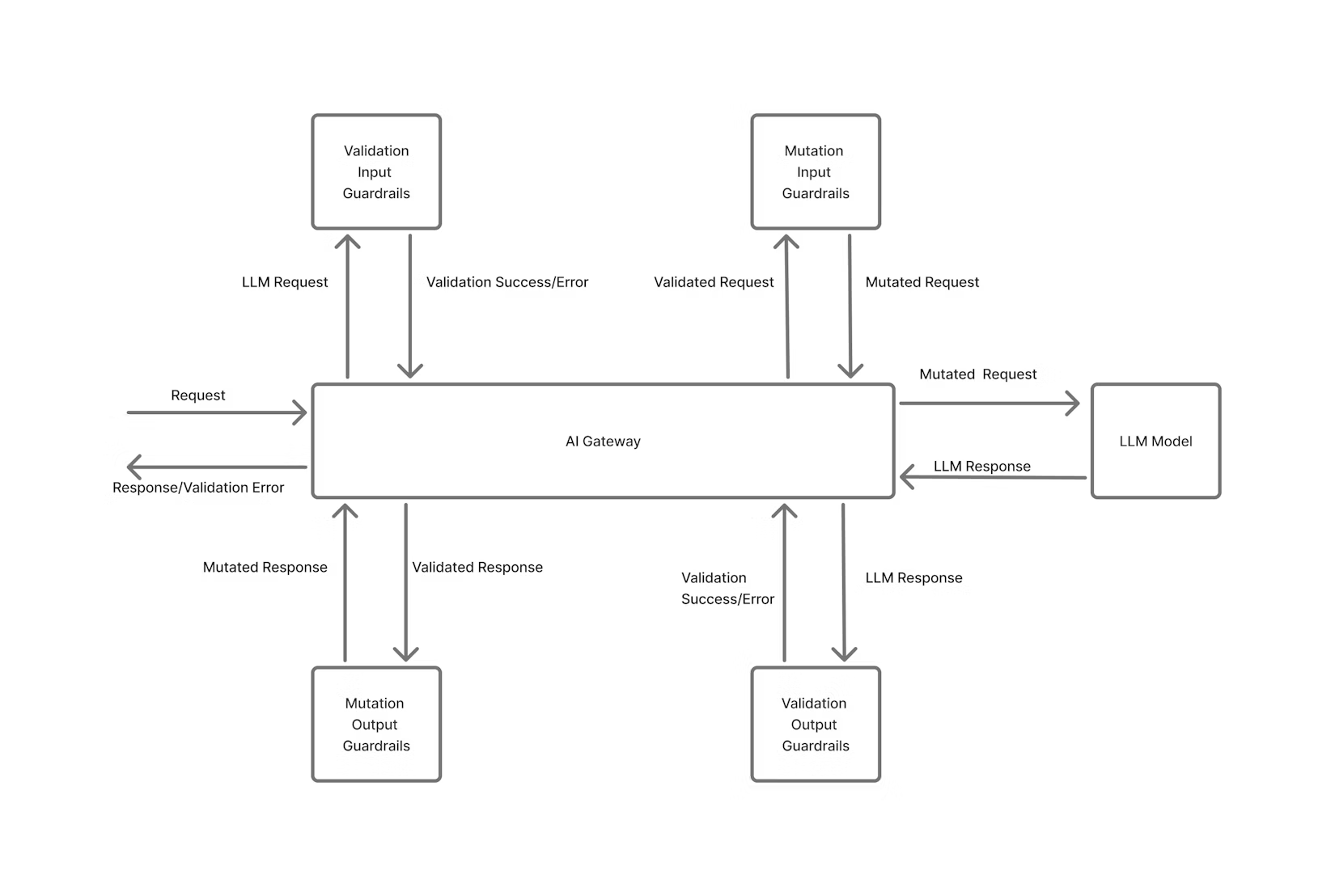

The first pillar of FinOps is visibility – “You can’t improve what you don’t measure.” In the AI context, visibility means capturing comprehensive data about every model invocation, so you know exactly where your token and GPU budgets are going. This is easier said than done when usage is spread across multiple providers and infrastructure. TrueFoundry addresses this through a centralized AI Gateway that all AI requests funnel through.

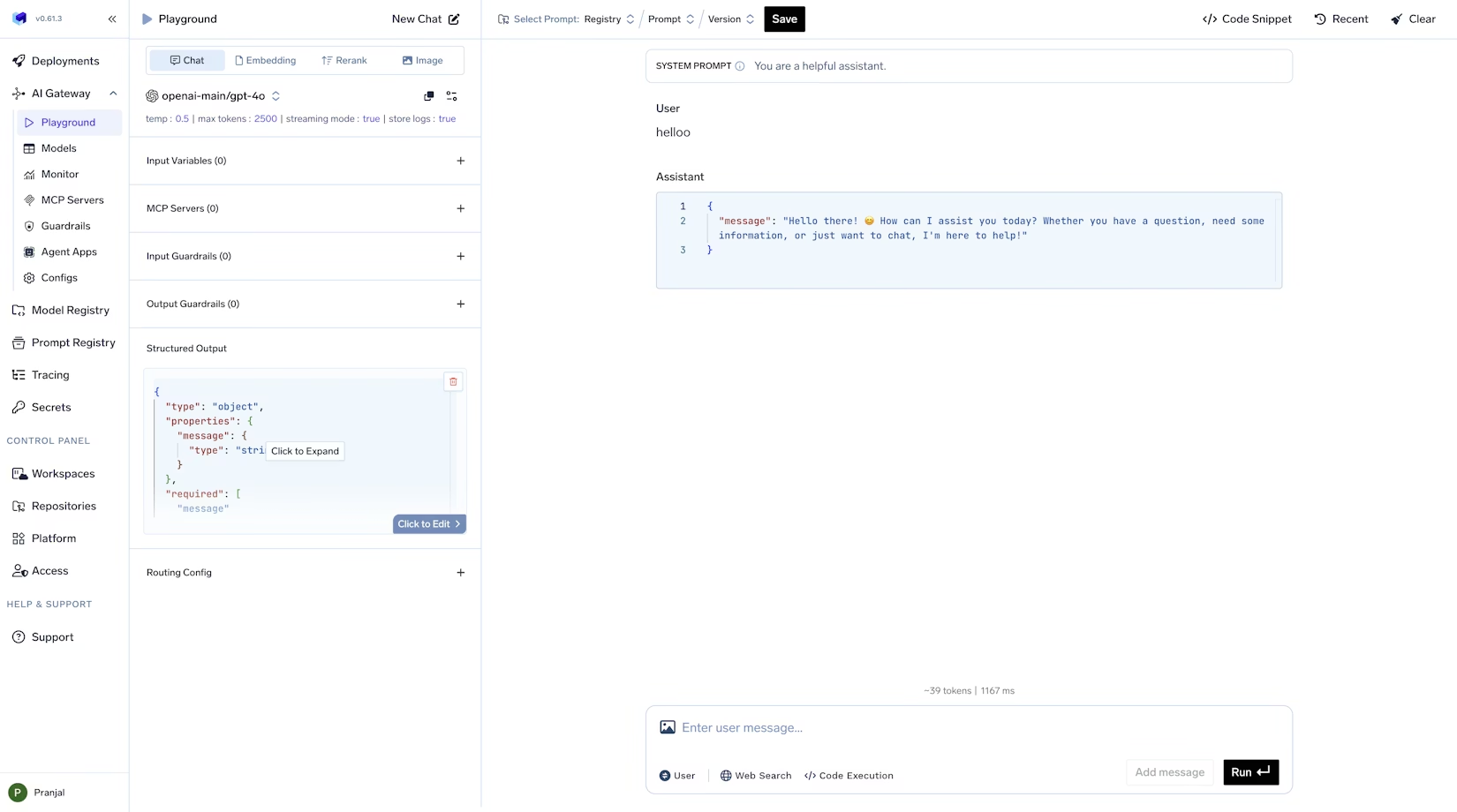

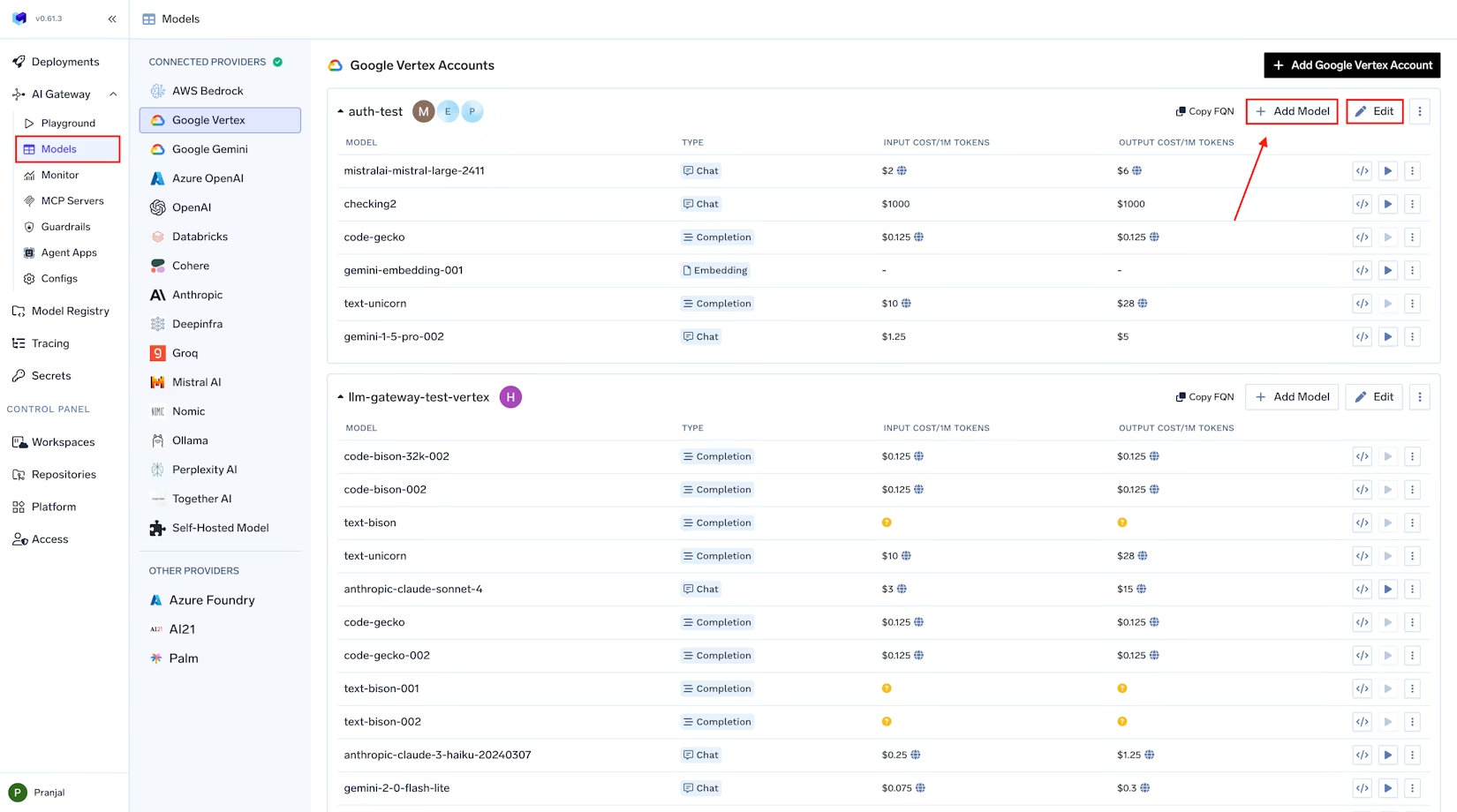

Unified AI Gateway: TrueFoundry’s AI Gateway acts as a single entry point (proxy) for all AI model calls – whether you’re hitting an external API like OpenAI or Anthropic, or a self-hosted model running on your infrastructure. By routing all inference requests through one gateway, you establish a “single pane of glass” for observability and cost tracking. The gateway is aware of model-specific nuances like token counting and latency, and it logs every request in a structured way. This eliminates blind spots from tool fragmentation: no matter which model or provider is used, the usage is tracked centrally.

Granular Logging and Metadata: Every request passing through TrueFoundry’s AI Gateway is automatically logged with rich metadata for attribution. This includes the model name, timestamp, input/output token counts, latency, the user or API key making the request, and more. Teams can also attach custom tags/metadata to each request, such as customer_id, application, environment, or feature_name.

For example, you might tag requests with the product feature or internal team responsible. TrueFoundry makes this easy by allowing developers to include an X-TFY-METADATA header in API calls. For instance, using the Python SDK:

client = OpenAI(api_key="...", base_url="https://llm-gateway.truefoundry.com/api/inference/openai")

response = client.chat.completions.create(

model="openai-main/gpt-4",

messages=[{"role": "user", "content": "Hello"}],

extra_headers={

"X-TFY-METADATA": '{"application":"booking-bot","environment":"staging","customer_id":"123456"}',

"X-TFY-LOGGING-CONFIG": '{"enabled": true}'

}

)

In this snippet, the request is tagged with an application name, environment, and customer ID as metadata. All values are strings (max 128 chars), and you can include as many fields as needed. These tags travel with the request through the gateway and get recorded in logs and metrics.

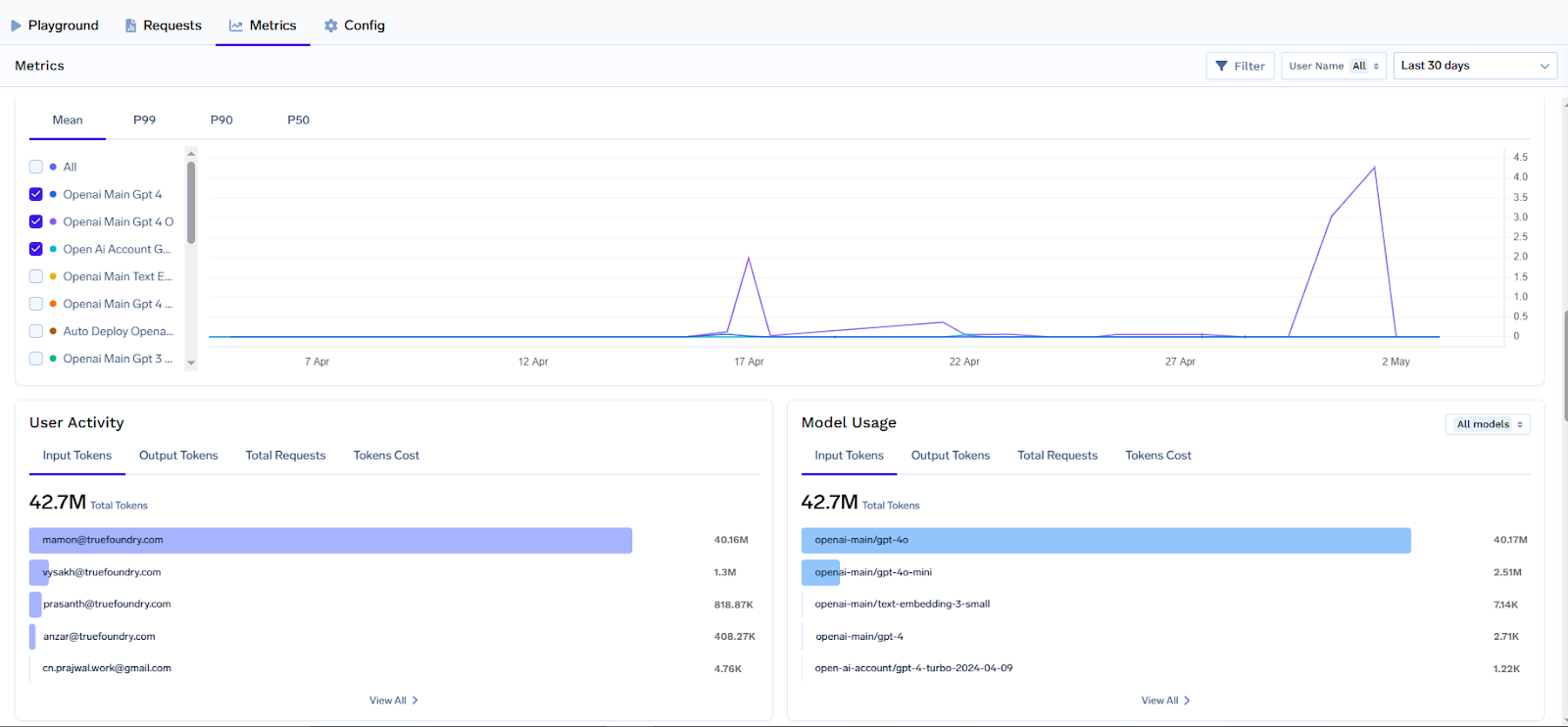

Real-Time Metrics Collection: The AI Gateway doesn’t just log raw data – it also emits structured metrics for monitoring. For every request, TrueFoundry tracks metrics like number of input tokens, output tokens, and the estimated cost of that request. These metrics are labeled with dimensions such as model name, username (or service), and any custom metadata tags you’ve enabled as labels.

For example, llm_gateway_request_total_cost is a counter metric that accumulates the cost of tokens used, labeled by model, user, and custom metadata like customer_id. This means you can instantly break down cost by whatever categories matter to your business (team, customer, feature, etc.) in your monitoring tools.

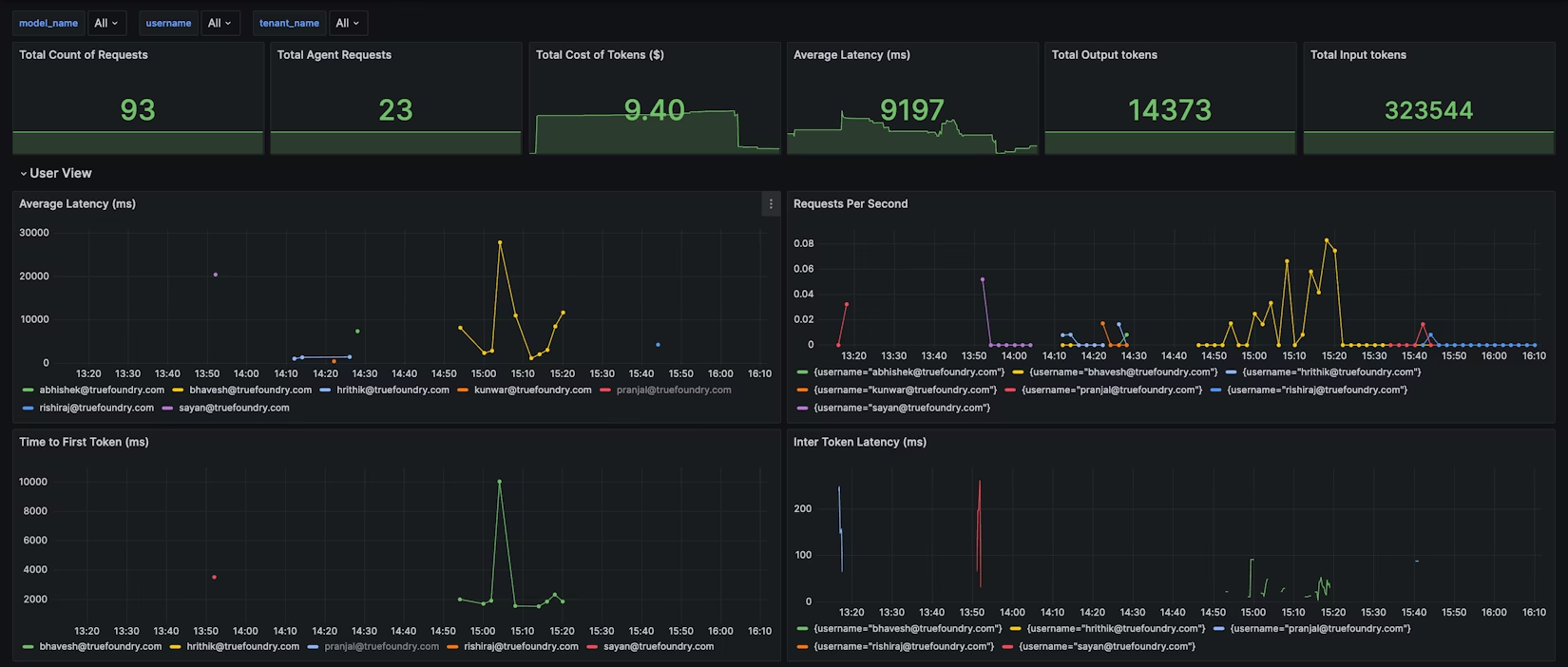

Integration with Monitoring Dashboards: TrueFoundry’s observability is designed to plug into your existing monitoring stack. The gateway exposes a /metrics endpoint with Prometheus-compatible metrics, and can also push metrics via OpenTelemetry. With a few configuration settings, you can have the gateway publish metrics to your Prometheus or Datadog backend in real time. Once ingested, these metrics can be visualized on Grafana dashboards or any analytics platform your organization uses.

In fact, TrueFoundry provides pre-built Grafana dashboard JSON for AI Gateway metrics, covering views per model, per user, and per configuration rule.

For example, the Model View might show token usage and latency per model, while the User View breaks down usage by username to identify heavy users. You can even add custom dashboard filters for your metadata tags (e.g. filter all charts by customer_id or project) to get on-demand cost reports for a given client or project.

By centralizing all this data, you achieve complete visibility into AI consumption. It becomes trivial to answer questions like: Which team generated the most GPT-4 tokens this week? How much did our new chatbot feature cost in API calls? Which customers or users are driving the highest usage? With TrueFoundry, you can simply toggle a filter or run a query to get these answers. This level of transparency is the foundation for FinOps in AI – it shines a light on every token and GPU-hour, turning uncertainty into actionable insight.

Accountability and Governance: Controlling AI Spend Proactively

Visibility sets the stage, but FinOps also requires accountability and proactive governance of spending. In cloud FinOps, teams are encouraged to own their usage and adhere to budgets. In AI FinOps, given the unpredictability of usage, it's essential to enforce some guardrails so that costs don’t run away due to a bug or an experiment gone wild. TrueFoundry’s platform builds cost governance directly into the AI infrastructure layer, so you can control usage in real-time rather than just report on it after the fact.

Per-Request Attribution and Chargebacks: Because TrueFoundry tags and tracks every request with team and project metadata, you can attribute costs at a fine-grained level in real time. This enables internal chargeback or showback models – e.g. show each product team how much their features are spending on AI, or charge an external customer for their specific usage.

TrueFoundry’s cost metrics can be filtered by these attributes to produce instant breakdowns (cost by user, by feature, by customer, etc.). Sharing these reports creates accountability: teams can see the impact of their code and prompts on the bill, and finance can ensure the expenses are mapped to business units or clients.

Role-Based Access Control and Permissions: Part of governance is ensuring only authorized usage of expensive resources. TrueFoundry’s enterprise-grade gateway supports role-based access control (RBAC) and API key management. This means you can restrict who is allowed to call certain high-cost models or limit access for experimental features.

For example, you might allow a QA or staging environment to use a smaller model, but only production can call the expensive GPT-4 API. Or you could limit a junior developer’s API key to a sandbox model. By gating access, you prevent accidental usage of costly models by the wrong people. Combined with detailed audit logs and metadata, this also creates an audit trail for compliance (i.e. you know exactly which user or service made each request).

Rate Limiting Policies: One of the most powerful FinOps guardrails is rate limiting. TrueFoundry’s AI Gateway allows you to configure flexible rate limit rules to cap usage along various dimensions – by user, team, model, or even custom tags.

For instance, you can say “User X can only make 1,000 GPT-4 requests per day” or “All requests from project ABC are limited to 50,000 tokens per hour.” The configuration is defined in a simple YAML format. Here’s an example snippet illustrating a few rules:

name: ratelimiting-config

type: gateway-rate-limiting-config

rules:

# 1. Limit a specific user to 1000 requests/day on the GPT-4 model

- id: "limit-gpt4-user1-daily"

when:

subjects: ["user:[email protected]"]

models: ["openai-main/gpt4"]

limit_to: 1000

unit: requests_per_day

# 2. Limit each project (by metadata tag) to 50k tokens per hour

- id: "project-{metadata.project_id}-hourly"

when: {}

limit_to: 50000

unit: tokens_per_hourIn rule #1 above, a specific user (identified by their API key or username) is capped at 1000 GPT-4 requests per day. In rule #2, we impose a limit of 50k tokens/hour per project, assuming each request includes a project_id in its metadata. The {metadata.project_id} syntax means the gateway will enforce a separate bucket for each unique project ID encountered. In practice, that rule prevents any single project from accidentally consuming more than 50k tokens in an hour (for example, if an integration goes rogue or a client has an unexpected spike). The gateway evaluates incoming requests against these rules in order, and if a request exceeds a limit, it is throttled or rejected on the spot.

Budget Alerts and Quotas: In addition to raw rate limits, TrueFoundry allows setting budget thresholds. You can define monthly or daily spending caps for a team or application. For example, you might budget $1000/month for a dev team’s experimentation with LLMs. The Gateway can track the cumulative cost of requests and once the threshold is crossed, it can either send alerts or disable further usage until an admin intervenes. This is essentially automated budget enforcement. Instead of finding out at the end of the month that Team A overspent, you catch it at (say) 80% of the budget and can take action.

TrueFoundry’s gateway can even auto-throttle or pause requests when a budget is exhausted, preventing overspend while notifying stakeholders. Finance teams appreciate this kind of safety net, as it turns cost governance into an active, continuous process rather than an after-the-fact analysis.

Prompt Guardrails and Validation: Another aspect of governance is enforcing best practices on prompts and usage patterns to avoid cost explosions. TrueFoundry’s platform includes guardrail features to, for example, block certain unsafe or inefficient prompts. You can set up rules to reject prompts that exceed a maximum token length or contain disallowed content.

Similarly, TrueFoundry supports structured output schemas and prompt templates which help keep responses concise and predictable, indirectly controlling token usage.

Optimization: Efficient and Intelligent Use of AI Resources

The final FinOps principle is optimization – continuously improving cost efficiency without sacrificing performance or results. After achieving visibility and setting up governance, organizations can focus on getting more value from each dollar spent on AI. TrueFoundry provides multiple avenues to optimize AI workloads, from intelligent request routing at the model level to efficient utilization of GPU infrastructure.

Smart Model Selection (Right-sizing Models): Not every task needs the most expensive model. A hallmark of AI cost optimization is using the cheapest sufficient model for each job. TrueFoundry’s AI Gateway supports hybrid model routing strategies so you can automatically direct requests to different models based on cost or complexity policies.

For example, you might route simple queries or low-priority requests to a smaller, cheaper model (like an open-source 7B model or an OpenAI GPT-3.5 tier), and only send complex or high-stakes queries to a premium model like GPT-4. Many teams find that a large percentage of their traffic can be handled by cheaper models, reserving the costly model for the few cases where its superior capability is truly needed. TrueFoundry makes this feasible by allowing rule-based routing (or even ML-based dynamic routing) in the gateway configuration. The result is avoiding overpaying for “overkill” models – you never overpay for capability you don’t need when the gateway can downshift to a cheaper model automatically for the right scenarios.

Batching and Caching: When you pay per request or per token, it pays to eliminate redundant work. TrueFoundry’s platform provides features for batch processing and response caching to improve efficiency. With the Batch Inference API, you can combine multiple prompts or inputs into one request to amortize overheads.

Prompt Optimization and Truncation: Another optimization avenue is reducing prompt size. Through observability, you might discover certain prompts are unnecessarily long (e.g. including irrelevant context). Techniques like prompt compression, using shorter history, or employing Retrieval-Augmented Generation (where external knowledge is fetched relevantly instead of dumping a whole document into the prompt) can reduce token counts.

TrueFoundry supports RAG workflows and even prompt management tools (like prompt versioning and testing) to help teams iterate towards more efficient prompts. For instance, instead of a user conversation sending the entire chat history every turn, you might summarize or drop older context once it’s irrelevant – trading a tiny bit of accuracy for a large cost saving. TrueFoundry’s Prompt Playground and analytics can assist in analyzing how prompt length correlates with cost, highlighting where you might trim fat.

Optimizing GPU Usage: For teams running self-hosted models or doing training jobs, optimizing GPU infrastructure is crucial to FinOps. TrueFoundry’s ML platform is designed to maximize GPU utilization and eliminate waste. Key capabilities include:

- Auto-Scaling GPU Workloads: Configure models to scale GPU instances up/down based on load. For example, scale from 1 to 4 GPUs during traffic spikes, then revert, avoiding the cost of idle capacity.

- GPU Time-Slicing and MIG: TrueFoundry supports NVIDIA MIG and time-slicing to let multiple jobs securely share a single GPU. Ideal for small, infrequent workloads, this improves GPU utilization without spawning multiple instances.

- Smart Idle Shutdown: Automatically shuts down idle GPU nodes after a configurable timeout (e.g. 30 minutes). Prevents wasted spend from forgotten or inactive GPU endpoints - no manual cleanup needed.

- Spot Instance & Multi-Cloud Flexibility: Use AWS spot instances for cost savings or reserved capacity for steady loads. TrueFoundry supports multi-cloud and on-prem setups, enabling seamless cloud bursting when local GPUs are maxed out.

Building FinOps Dashboards and Insights

A practical FinOps initiative benefits from clear reporting and dashboards that bring together the metrics and business insights. TrueFoundry simplifies the creation of FinOps dashboards for AI by providing all the necessary data out-of-the-box and integration points for popular tools.

Using the metrics exported by the AI Gateway, teams can build dashboards in Grafana, Datadog, or any BI tool to visualize AI usage and spending trends. For example, because each request is labeled with team and model, you could create a Grafana panel showing “Cost by Team (Last 7 Days)” by querying the llm_gateway_request_total_cost metric grouped by tenant_name (team). Another panel might show “Tokens per Request by Model” to identify which models are token-hungry and might need optimization. TrueFoundry’s pre-built Grafana dashboard already includes views to analyze usage by model, by user, and by configuration rules (e.g., to see if any rate limits are being hit frequently). You can extend these with custom metadata; for instance, add a filter for customer_id as a variable, so stakeholders can select a specific customer and see their token usage and cost over time.

Integration with other monitoring and APM tools is possible via OpenTelemetry. If your organization uses Datadog, you can forward the gateway’s metrics to Datadog’s metrics intake (since Datadog can ingest OpenTelemetry or Prometheus metrics). This means your AI cost metrics can live alongside your infrastructure cost metrics. It becomes easy to correlate, say, a surge in token usage with a particular deployment or feature launch, because all the data is accessible in one place.

TrueFoundry also provides an Analytics API for usage data, so if you prefer to pull the data into a custom finance dashboard or a spreadsheet, you can do so. Many companies export raw data (TrueFoundry even allows CSV download of cost data) to combine with billing records, achieving a complete picture of cost per project when you add in overhead like storage or networking.

The key is that with the groundwork TrueFoundry lays (metadata tagging, real-time metrics), creating these FinOps insights doesn’t require building a data pipeline from scratch. You get accurate, attribution-rich data in real time, which is a huge step up from waiting for the end-of-month cloud bill. Engineering, ML, and finance leaders can together review dashboards that answer both technical and business questions about AI usage. This cross-functional visibility promotes a culture of cost-awareness

Conclusion

Implementing FinOps for AI is an ongoing journey. It starts with awareness and grows into a discipline embedded in the AI development lifecycle. By establishing visibility, accountability, and optimization practices, organizations progress in FinOps maturity – from reactive cost reports to real-time cost control to eventually predictive optimization. Most importantly, building a FinOps culture around AI ensures sustainability. AI adoption will stall if costs grow unchecked or unpredictably. By viewing AI through a FinOps lens, organizations treat model access and GPU time as valuable resources to be managed, not limitless magic. This cultural shift is enabled by tooling: when teams have self-service access to metrics and cost reports, they can take ownership. TrueFoundry’s solution accelerates this cultural adoption by making AI usage transparent and governed by design – cost visibility and controls come baked into the platform, not as an afterthought.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.

.png)