A Detailed LiteLLM Review: Features, Pricing, Pros and Cons [2026]

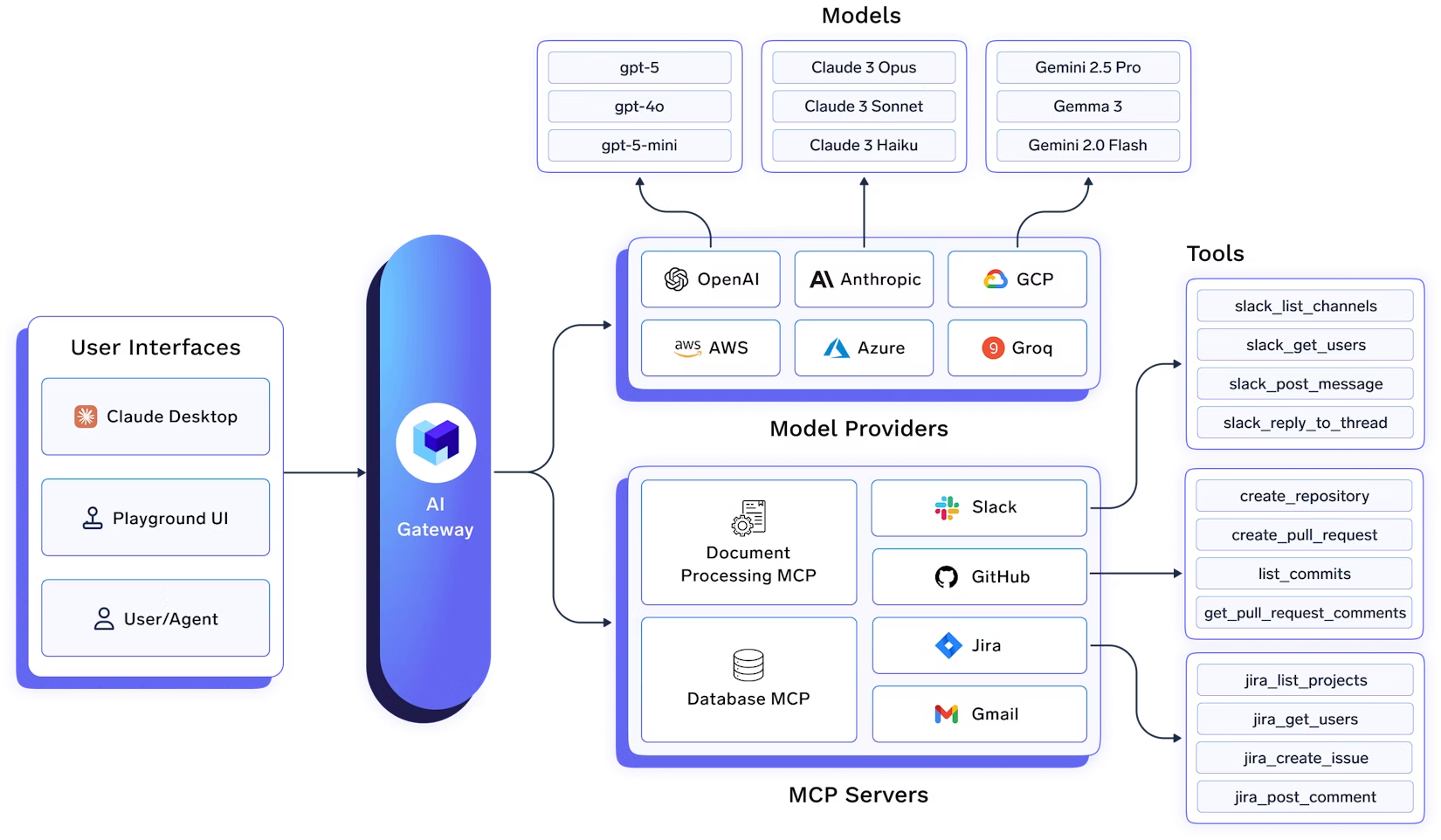

In the messy landscape of LLM APIs, LiteLLM has positioned itself as the "Universal Translator." The pitch is simple: write code once using the OpenAI format, and route it to 100+ models like Bedrock, Azure, or Vertex AI without rewriting your integration logic.

For a developer working on a weekend project, it’s a lifesaver. But does this open‑source library actually hold up as a corporate gateway handling thousands of requests per minute? We spun up the proxy, looked at the code, and checked the enterprise pricing tiers to see what it really takes to run this in production.

Here is the honest review: the good, the bad, and the operational headaches of self-hosting.

What Is LiteLLM?

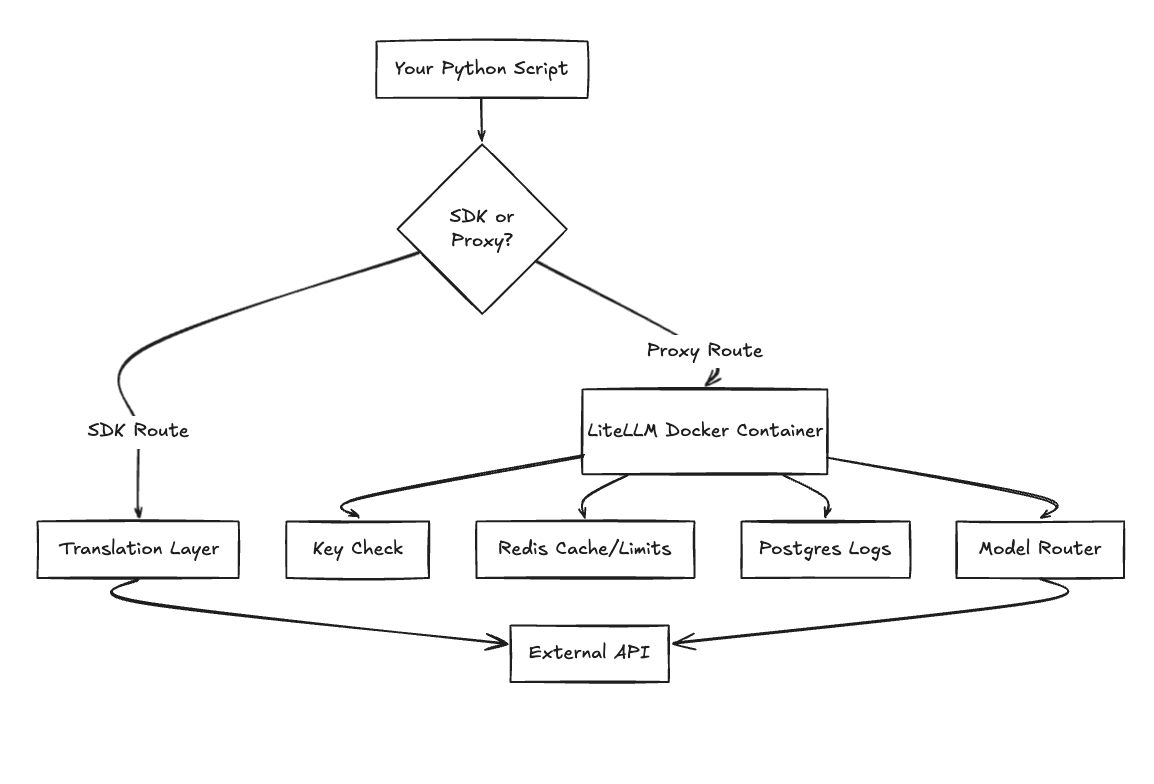

First, let’s clear up the confusion. LiteLLM isn’t just one thing; it’s two distinct tools that share a name. You need to know which one you are actually signing up for.

The Python SDK

This is just a Python package (pip install litellm). It’s a translation layer that runs inside your application code. You pass it a standard OpenAI-style JSON object (messages, roles), and it maps the keys to whatever format Anthropic, Cohere, or Google Gemini expects. It’s stateless, free (MIT license), and runs wherever your Python code runs. It's basically a very complex set of if/else statements that saves you from reading five different API documentation pages.

The Proxy Server

This is the "Gateway" version. It’s a standalone FastAPI server that you deploy via Docker. It sits between your apps and the model providers. Unlike the SDK, this thing has state. It handles API keys, logs requests to a database, and manages rate limits via Redis. This is what you use if you have multiple teams and want a centralized control plane.

Fig 1: The Stack Overview

Where LiteLLM Excels for Fast-Moving Teams

There is a reason LiteLLM has 10k+ stars on GitHub. It solves the most annoying part of AI engineering: API fragmentation.

1. Universal API Standard

The biggest win here is the standardization. If you have ever tried to switch a prompt from GPT-4 to Claude 3.5 manually, you know the pain of reformatting message arrays. LiteLLM handles that token mapping and message formatting logic for you. You point your base URL to LiteLLM, and suddenly Azure, Bedrock, and Ollama all look like OpenAI. It removes the "vendor lock-in" friction at the code level.

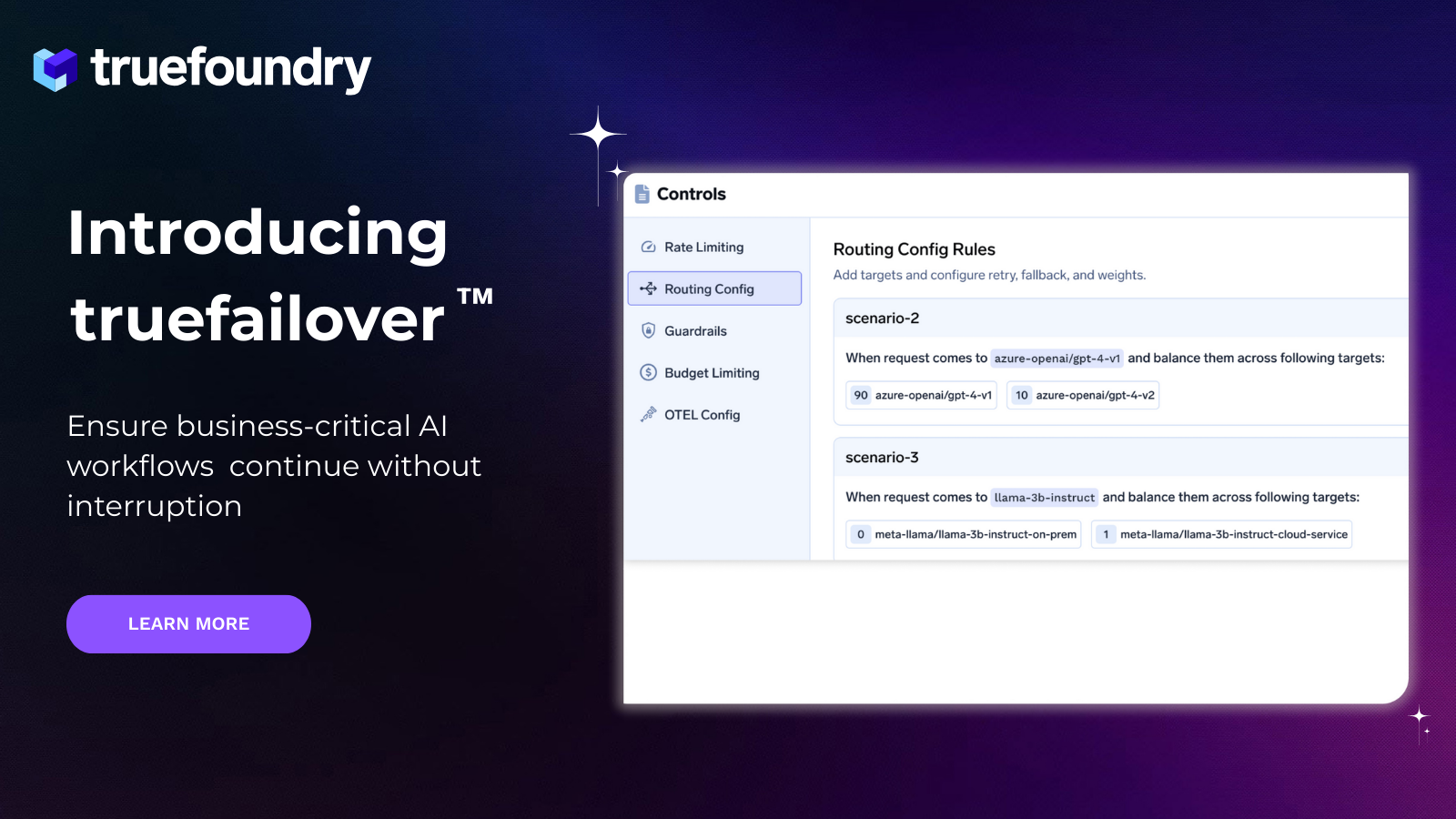

2. Load Balancing and Fallbacks

Writing retry logic is boring and error-prone. LiteLLM handles this at the config level. You can define a list of models, and if your primary Azure deployment throws a 429 (Rate Limit) error, LiteLLM automatically reroutes the request to a backup provider or a different region. It keeps your app up without you needing to write custom exception handlers for every possible failure mode.

3. Open-Source Control

If you are working in a paranoid environment (Defense, Health, Finance), you can't use a SaaS gateway. You need to inspect the code. LiteLLM is open source, which means you can audit exactly how it handles your keys and data. There is no telemetry sending your prompts to a third-party server unless you configure it that way. For air-gapped setups, this is often the only viable option.

The Operational Burden of Running LiteLLM Yourself

Here is the part the README glosses over. Running a pip install is easy. Running a high-availability proxy server in production is a job.

1. The Redis and Postgres Requirement

You can't just deploy the LiteLLM container and walk away. To make it actually useful (caching, rate limiting, logging), you need infrastructure. You need a Redis instance for the cache and the rate limit counters. You need a PostgreSQL database to store the spend logs and API keys. Now you aren't just an AI engineer; you're managing database migrations, backups, and connection pooling. If Redis dies, your latency spikes or your rate limits fail.

2. The Enterprise Feature Wall

LiteLLM follows the "Open Core" model. The free version gives you the proxy. But if you want the stuff your CISO asks for—Single Sign-On (SSO), Role-Based Access Control (RBAC), and team-level budget enforcement—you hit a paywall. You can't just plug in your corporate Okta setup into the open-source version. Scaling this to 500 engineers without these governance features turns into a nightmare of sharing master keys in Slack.

How Much Does LiteLLM Cost?

LiteLLM pricing is straightforward: free for hackers, custom for companies.

Community Edition (Free)

This costs $0. You grab the Docker image, and you run it. You pay for your own AWS/GCP infrastructure to host it. You get the routing, the load balancing, and basic logging. You do not get the admin UI for managing teams, SSO, or the advanced data retention policies.

Enterprise Edition (Paid)

This is "Contact Sales" territory. You are paying for the "LiteLLM Enterprise" license. This unlocks the governance features: Okta/Google SSO, granular RBAC (who can use which model), and enterprise support. It basically turns the open-source tool into a corporate-compliant platform.

Is LiteLLM Production Ready? (The Verdict)

The code works. The routing logic is solid. But "Production Ready" is about your team, not just the software.

If you self-host this, you own the uptime. You are the one getting paged when the Postgres disk fills up with logs. You are the one patching the Docker container. There is no SLA on the community edition. If you have a solid DevOps team who loves managing stateful workloads on Kubernetes, go for it. If you just want to ship AI apps, the maintenance burden is higher than it looks.

TrueFoundry: A Better LiteLLM Alternative

If you want the benefits of LiteLLM (the routing, the flexibility) but you don't want to carry a pager for a Redis cluster, TrueFoundry is the managed alternative. We effectively wrap the functionality of an AI gateway into a managed control plane.

Batteries Included (No DB Management)

We run the control plane. You don't need to provision Redis or Postgres. You don't need to worry about database scaling or log rotation. We handle the stateful parts of the gateway, while the data plane runs in your cloud. You get the interface and the routing without the operational heavy lifting.

Enterprise Features Included

We don't gate security behind a "Talk to Sales" wall for every little feature. SSO, RBAC, and team-level budgets come standard for enterprise users. You can set a budget of $50 for the intern team and $5,000 for the production app, and the gateway enforces it automatically. It’s built for multi-tenant organizations from day one.

Beyond the Proxy (Model Hosting)

LiteLLM is just a proxy; it doesn't run models. TrueFoundry does both. We can route to OpenAI, but we can also spin up a Llama 3 endpoint on a Spot Instance in your AWS account. This gives you a single platform for both API consumption and self-hosted inference, allowing you to optimize costs by moving workloads off public APIs entirely when needed.

Comparing LiteLLM Self-Hosted vs TrueFoundry

Table 1: Operational Comparison

When LiteLLM Is the Right Choice?

LiteLLM is the right tool if you are a small team or a solo dev. If you are building an internal hackathon project, just use the SDK. If you are a startup with strong DevOps chops and you want to avoid SaaS fees at all costs, self-hosting the proxy is a viable path. It gives you raw control, provided you are willing to do the maintenance work.

When Teams Outgrow LiteLLM

You typically outgrow the self-hosted setup when the governance requirements kick in. When you need to track spend across 20 different cost centers, or when you need to integrate with Active Directory, or when you need 99.99% uptime guarantees without managing the HA setup yourself—that’s when teams switch.

Final Verdict: Build or Buy?

LiteLLM is a great piece of engineering. It solves the API fragmentation problem elegantly. But don't underestimate the difference between a Python library and a production gateway.

If you want to tinker, pip install litellm.

If you want a production gateway that handles the ops, security, and model hosting for you, look at a managed platform.

FAQs

Is LiteLLM completely free to use?

The code is open source (MIT). The usage is free. But running it isn't—you pay for the cloud compute, the database storage, and the man-hours to maintain it.

Do I need an Enterprise license for LiteLLM?

Only if you need the corporate stuff: SSO, RBAC, and official support. If you are just routing traffic for a single app, the free version is fine.

How difficult is it to self-host LiteLLM?

It's easy to start, hard to keep running. Spinning up Docker is trivial. Managing a production-grade Postgres and Redis cluster to ensure your API gateway never goes down is a proper engineering task.

What is the best alternative to LiteLLM?

TrueFoundry gives you the same routing capabilities but handles the infrastructure and security management for you, plus it adds the ability to host your own models.

Can I use LiteLLM for caching API responses?

Yes, but you have to bring your own Redis. The proxy has the logic, but you have to provide the storage.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.