Data Residency Comparison for AI Gateways

Introduction

As AI adoption accelerates across enterprises, data residency has emerged as a critical requirement, driven by regulations like GDPR, data sovereignty laws, and sector-specific compliance mandates. Organizations operating across regions must ensure that sensitive data is processed, stored, and handled within approved geographic boundaries not just for compliance, but to maintain customer trust and reduce regulatory risk.

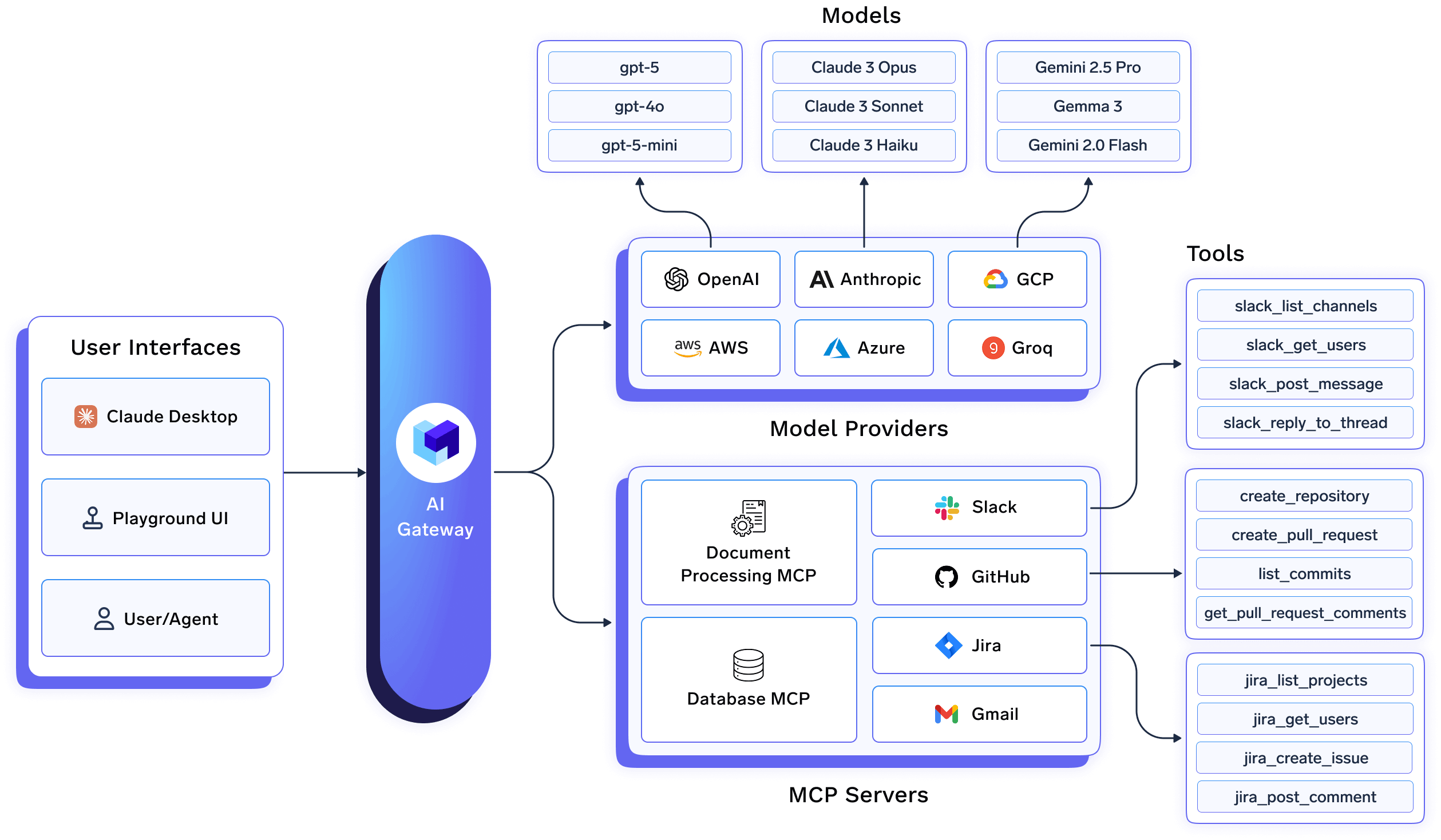

In modern AI systems, however, data residency is no longer determined solely by where models are hosted or which cloud provider is used. Instead, it is enforced at the AI Gateway layer - the control plane that sits between applications and model providers, handling request routing, inference execution, logging, and policy enforcement. Even when underlying models are compliant, data can still cross regions if the gateway layer is not explicitly designed to enforce residency constraints.

This makes AI Gateways a decisive component in meeting data residency requirements. Understanding how different AI Gateways handle regional deployment, request routing, and data flow is essential for enterprises evaluating AI infrastructure in regulated environments. This comparison examines how data residency is supported across AI Gateways, highlighting where guarantees are enforced and where they break down.

What Is Data Residency?

Data residency refers to the requirement that data must be processed, stored, and handled within a specific geographic region or jurisdiction. These requirements are typically dictated by national data protection laws, industry regulations, or contractual obligations with customers and partners.

In AI systems, data residency applies not just to where data is stored at rest, but also to where inference happens, how requests are routed, and where metadata such as logs or prompts are generated and retained. This distinction is critical: even if an organization uses regionally hosted models, data residency can still be violated if requests are routed through infrastructure outside the approved region.

This is where AI Gateways play a central role. An AI Gateway sits between applications and model providers, acting as the control plane for all AI traffic. It determines:

- Which model endpoint a request is sent to

- In which region inference is executed

- Whether prompts, responses, or logs are persisted

- How policies like regional restrictions are enforced

As a result, data residency in AI systems is effectively a gateway-level concern. Without explicit controls at the AI Gateway layer, organizations cannot reliably guarantee that sensitive data remains within required geographic boundaries, regardless of the compliance claims made by downstream model providers.

Why Data Residency Matters for AI Gateways

Data residency is not just a legal checkbox, it directly impacts risk exposure, operational viability, and trust in enterprise AI deployments. For organizations operating in regulated industries or across multiple jurisdictions, failing to enforce residency requirements can lead to regulatory penalties, data access restrictions, or forced shutdowns of AI workloads.

From an AI Gateway perspective, data residency matters for several reasons:

1. Regulatory Compliance

Laws such as GDPR, HIPAA, and regional data sovereignty regulations require strict control over where personal or sensitive data is processed. Since AI Gateways route and broker inference requests, they are the primary mechanism through which these geographic constraints must be enforced.

2. Controlled Data Flow

AI workloads often involve dynamic routing across multiple model providers and regions. Without residency-aware routing at the gateway level, data can unintentionally cross borders during inference, retries, or failovers — even when storage remains local.

3. Auditability and Accountability

Enterprises must be able to demonstrate where data was processed and how it flowed through the system. AI Gateways that provide region-specific logging, traceability, and policy enforcement make it significantly easier to meet audit and reporting requirements.

4. Enterprise Trust and Risk Reduction

For customers in finance, healthcare, government, or defense, assurances around data residency are non-negotiable. AI Gateways that enforce residency by design reduce reliance on vendor assurances and minimize the risk of accidental policy violations.

In practice, data residency guarantees are only as strong as the AI Gateway enforcing them. This makes residency support a key evaluation criterion when comparing AI Gateways for enterprise and regulated deployments.

AI Gateway Deployment Models and Their Impact on Data Residency

How an AI Gateway is deployed plays a decisive role in whether data residency guarantees can be reliably enforced. Different deployment models offer varying levels of control, isolation, and operational complexity.

1. Fully Managed (SaaS) AI Gateways

In this model, the AI Gateway is operated as a managed service by the vendor, typically with a centralized control plane.

Pros

- Low operational overhead

- Fast to adopt

Residency considerations

- Limited control over where control-plane data is processed

- Regional guarantees depend heavily on vendor architecture

- Often suitable only for low-to-medium sensitivity workloads

2. Regionally Deployed AI Gateways (VPC-based)

Here, the AI Gateway is deployed within a customer-controlled VPC in a specific region, while still integrating with managed services.

Pros

- Stronger residency guarantees

- Control over inference routing and logs

- Easier compliance with regional regulations

Residency considerations

- Requires more operational setup

- Residency depends on correct gateway configuration and enforcement

3. On-Premise or Air-Gapped AI Gateways

For highly regulated environments, AI Gateways may be deployed fully on-premise or in air-gapped environments.

Pros

- Maximum control over data flow

- Strongest residency and sovereignty guarantees

Residency considerations

- Highest operational complexity

- Limited access to certain managed model providers

AI Gateway Data Residency Comparison

To understand how data residency is enforced in practice, it’s important to compare AI Gateways as architectural control planes, not just as feature checklists. Below is a comparison of 5 commonly used AI Gateways based on the criteria outlined earlier.

- TrueFoundry AI Gateway

- Kong AI Gateway

- Portkey

- Vercel AI Gateway

- OpenRouter

1. TrueFoundry AI Gateway

TrueFoundry’s AI Gateway is purpose-built for enterprises operating under strict data residency and regulatory constraints, where guarantees must be enforced by architecture rather than policy statements. Unlike SaaS-first gateways, TrueFoundry allows the gateway itself to be deployed regionally (VPC or on-prem), ensuring that inference routing, policy enforcement, and audit logging all remain within approved geographic boundaries.

Data residency is enforced end-to-end at the gateway layer - including region-locked inference routing, strict prevention of cross-region fallbacks, fine-grained control over prompt and response persistence, and region-local audit logs. This makes residency guarantees verifiable and auditable, not assumed. As a result, TrueFoundry is well-suited for regulated industries such as financial services, healthcare, and government, where compliance requirements extend beyond storage to real-time data processing and inference.

Strength: Architecturally enforced data residency with regional isolation, auditability, and enterprise-grade controls.

2. Kong AI Gateway

Kong extends its API gateway model to AI workloads, offering flexibility in deployment. While it can support regional setups, data residency enforcement depends heavily on how policies and routing are configured. Misconfiguration risk is higher compared to opinionated, residency-first gateways.

Strength: Flexible, infrastructure-agnostic

Trade-off: Residency guarantees are not enforced by default

3. Portkey

Portkey operates as a managed AI gateway focused on observability and routing convenience. While it offers useful abstractions, control-plane operations remain global, which limits its suitability for strict residency requirements.

Strength: Easy adoption, multi-provider routing

Trade-off: Limited residency enforcement and regional isolation

4. Vercel AI Gateway

Vercel’s AI Gateway is optimized for developer experience and frontend-centric AI use cases. Residency controls are largely abstracted away, making it difficult to enforce or audit regional guarantees.

Strength: Seamless developer integration

Trade-off: Not designed for regulated or residency-sensitive workloads

5. OpenRouter

OpenRouter functions primarily as a routing layer across multiple model providers. It does not provide explicit residency enforcement, regional deployment control, or audit guarantees, making it unsuitable for enterprise compliance scenarios.

Strength: Broad model access

Trade-off: No data residency guarantees

How to Choose an AI Gateway for Data Residency

For enterprises operating in regulated or multi-region environments, choosing an AI Gateway is as much a compliance decision as it is a technical one. Below is a practical checklist buyers can use to evaluate whether an AI Gateway can truly meet data residency requirements.

1. Can the Gateway Be Deployed Regionally?

Start with deployment. Ask whether the AI Gateway itself can be:

- Deployed per region (VPC, private cloud, or on-prem)

- Isolated by geography rather than managed globally

- Operated without a centralized control plane outside the approved region

If the gateway cannot be regionally deployed, residency guarantees are inherently limited.

2. Is Inference Routing Explicitly Region-Locked?

Many gateways support multi-region routing, fewer enforce hard regional boundaries.

Key questions to ask:

- Can inference be strictly pinned to approved regions?

- Are cross-region fallbacks disabled by default?

- What happens during retries, failures, or traffic spikes?

Residency-safe gateways enforce locality even under failure conditions.

3. Where Are Logs, Prompts, and Metadata Stored?

Residency violations often occur through logs and metadata, not model outputs.

Buyers should verify:

- Whether prompts and responses are stored at all

- Where logs and traces are written

- Whether retention policies are configurable per region

Fine-grained control here is essential for compliance audits.

4. Are Residency Policies Enforced or Merely Configured?

There is a difference between configurable settings and enforced guarantees.

Look for:

- Hard policy enforcement at the gateway layer

- Guardrails that prevent misconfiguration

- Clear failure modes when policies would be violated

If residency depends on “best effort” configuration, risk remains.

5. Can Residency Be Audited and Proven?

Finally, ask how residency claims can be validated.

Strong AI Gateways provide:

- Region-specific audit logs

- Clear visibility into where inference occurred

- Evidence suitable for compliance reviews and regulators

If residency cannot be demonstrated, it will be difficult to defend during audits.

Conclusion

Data residency in AI systems is no longer determined solely by where models are hosted or which cloud provider is used. In practice, it is the AI Gateway - the layer that routes requests, executes inference, and generates logs that defines where data actually flows.

As this comparison shows, AI Gateways vary significantly in how they approach residency. Many optimize for developer convenience and abstraction, offering limited visibility or control over geographic enforcement. Others are designed with residency-first architecture, enabling enterprises to enforce, audit, and prove compliance across regions.

For organizations operating in regulated industries or handling sensitive data, data residency should be treated as a first-class evaluation criterion when selecting an AI Gateway. The right choice can reduce compliance risk, simplify audits, and provide long-term confidence as AI usage scales across geographies.

Ultimately, data residency guarantees are only as strong as the AI Gateway enforcing them.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.