LLM Access Control: Securing Models and AI Workloads in Production

Introduction

As organizations adopt LLMs across teams and applications, access to models quickly becomes a security and governance concern. What begins as a single API key shared across services often turns into dozens of applications, agents, and workflows all invoking models with little visibility or control.

This creates real risk. Without proper access control, teams cannot easily restrict who can use specific models, prevent misuse by agents, or audit how AI systems are being accessed in production. Provider-level API keys and SDK permissions are not designed to handle these requirements at scale.

LLM access control addresses this gap by enforcing who can access which models, prompts, agents, and tools at runtime. Instead of relying on static credentials embedded in code, access decisions are evaluated centrally as requests are executed.

In this blog, we’ll explain what LLM access control means in practice, why it is difficult to implement in production systems, and how gateway-based architectures enable secure and auditable AI workloads.

What does LLM access control mean?

LLM Access Control is the framework that determines who or what is allowed to interact with your AI assets and under what specific conditions. In a traditional IT environment, we are used to controlling access to files or servers. In the AI era, the "asset" is more dynamic. It is a combination of raw intelligence (the model), autonomous capability (the agent), and external action (the tools).

To build a secure perimeter, access control must be enforced across these three critical dimensions.

Who Can Access Which Models?

Not every user in an organization needs access to every model. For instance, a developer testing a new feature might only need access to an open-source model like Llama 3, while a high-level data scientist might require the reasoning power of GPT-4o or Claude 3.5 Sonnet.

Access control at the model level allows you to gate-keep based on cost, sensitivity, and necessity. It prevents "model sprawl," where employees might experiment with unvetted third-party providers, and ensures that your most expensive tokens are reserved for the users who actually need them.

Who Can Deploy Agents?

Deploying an LLM-based agent is fundamentally different from using a simple chatbot. An agent is a persistent entity that can "think" through steps and execute workflows over time.

If access control is weak, any user could technically deploy an autonomous agent that runs in the background, potentially entering recursive loops or making thousands of unauthorized API calls.

Governance here means defining which teams have the "deployment" permission, ensuring that every agent has a clear owner and a strictly defined lifespan.

Who Can Invoke Tools?

This is the most critical layer of the three. When you give an LLM access to "tools" such as your CRM, your internal documentation, or your email server, you are effectively giving it a set of hands.

Granular access control means defining precisely which tools an agent can call. A customer support bot might have permission to read a knowledge base, but should be strictly blocked from writing to a production database.

Without tool-level permissions, a simple prompt injection attack could trick an agent into using its high-level privileges to exfiltrate data or delete critical records. True access control ensures that even if an LLM is "compromised" by a malicious prompt, its ability to cause damage is physically limited by its scoped permissions.

Common Access Control Challenges

As teams move LLM workloads into production, access control issues often emerge not from malicious intent, but from shortcuts taken during early experimentation. These gaps become serious liabilities as usage scales across teams, agents, and environments.

Shared API Keys

Many teams start with a single shared API key for a model provider across multiple services or developers. While convenient, this approach removes any notion of identity or accountability.

Shared keys make it impossible to distinguish between users, applications, or agents. If a key is leaked or misused, the entire system is exposed. Revoking access for one user typically means breaking access for everyone, which is operationally risky in production environments.

Missing Audit Trails

Enterprise security and compliance depend on the ability to answer a simple question: who accessed what, and when?

Without a centralized access control layer, LLM usage is spread across local environments, notebooks, CI pipelines, and third-party dashboards. This fragmentation makes it difficult to reconstruct events after an incident. If sensitive data is exposed or a model behaves unexpectedly, teams often lack the audit trail needed to trace the root cause.

For regulated industries, missing auditability is treated as a failure of the security posture, regardless of intent.

Over-Permissioned Agents

Agents often require elevated privileges to perform useful work, but they are frequently granted broader access than necessary. It is common to see agents deployed with unrestricted access to tools, data stores, or APIs simply to avoid configuration overhead.

This creates a high-risk scenario where powerful models, over-privileged tools, and prompt injection vulnerabilities combine to amplify impact. If an agent is manipulated through a malicious prompt, its excessive permissions allow it to cause real damage, such as data exfiltration or destructive actions. Limiting agent permissions is therefore critical to reducing blast radius.

Key Access Control Capabilities

Effective LLM access control requires multiple layers of enforcement that operate consistently across users, applications, and agents. These capabilities should be applied at runtime and integrated into existing enterprise identity and security systems.

Role-Based Access Control (RBAC)

RBAC ensures that permissions are tied to roles rather than individual users. In an AI context, this allows organizations to define clear boundaries between administrators, developers, and end users.

For example, developers may be allowed to experiment with models and prompts in non-production environments, while end users can only interact with approved agents. Integrating RBAC with existing identity providers enables automatic onboarding and revocation of access as team membership changes.

Environment Isolation

Separating development, staging, and production environments is essential for controlling risk. Access control policies should ensure that high-privilege models, tools, and credentials are only accessible from production environments with additional safeguards.

This prevents experimental workloads from accidentally interacting with sensitive production data and reduces the risk of unintended changes reaching end users.

Model-Level Permissions

Different models have different cost, capability, and data exposure profiles. Model-level permissions allow teams to restrict access based on these factors.

Expensive or sensitive models can be limited to specific teams or projects, while broader access can be granted to lower-cost or self-hosted models. This helps control spend and reduces exposure to external providers when not required.

Tool-Level Permissions

Tool-level access control defines what actions an agent can perform once it is invoked. Rather than granting broad API access, permissions should be scoped to specific functions or operations.

For example, an agent may be allowed to read from a document repository but blocked from modifying or deleting records. Enforcing permissions at this level limits the impact of incorrect reasoning or prompt manipulation and protects core systems even when agents behave unexpectedly.

LLM Access control via Gateways

Managing access control at the application level does not scale in production AI systems. When multiple teams, agents, and services directly integrate with different model providers, access policies become fragmented and difficult to enforce consistently.

An AI Gateway addresses this by acting as a centralized enforcement layer between applications and model providers. Instead of embedding credentials and permissions across services, access control is evaluated at runtime, before a request ever reaches a model.

Centralized Enforcement Point

The gateway serves as a single point of authentication and authorization for all LLM traffic. Provider credentials are stored securely within the gateway rather than distributed across application code.

Applications and agents authenticate with the gateway using managed identities. This allows security teams to revoke access, rotate provider keys, or update policies centrally without redeploying applications. If a service or agent is compromised, its access can be disabled immediately at the gateway layer.

Policy-Based Access Decisions

Beyond simple authentication, a gateway enables policy-driven access control. Each request can be evaluated against contextual attributes such as:

- User or service identity

- Team or project association

- Target model or provider

- Execution environment

Based on these attributes, the gateway can allow, deny, or reroute requests according to defined policies. This enables fine-grained control, such as restricting high-cost models to specific teams or preventing certain agents from accessing sensitive tools.

Runtime Auditing and Traceability

Because all requests pass through the gateway, it becomes the authoritative source of audit data. Every model invocation can be logged with full context, including who initiated the request, which model was accessed, and how the request was handled.

This centralized audit trail is critical for compliance and forensic analysis. It allows organizations to reconstruct events accurately and demonstrate controlled access to AI systems during security reviews or audits.

By shifting access control into the gateway, teams move from scattered, implicit permissions to explicit, enforceable policies that scale with system complexity and organizational growth.

How TrueFoundry Implements LLM Access Control

TrueFoundry takes the theoretical requirements of access control and turns them into a production-ready reality. Operating as a unified control plane, it allows platform teams to manage thousands of models and users from a single interface without introducing bottlenecking latency.

Gateway-Level Controls for Governance

TrueFoundry's AI Gateway provides several granular controls configured at the provider account level via the UI. These features ensure that governance is baked into the infrastructure rather than being an afterthought.

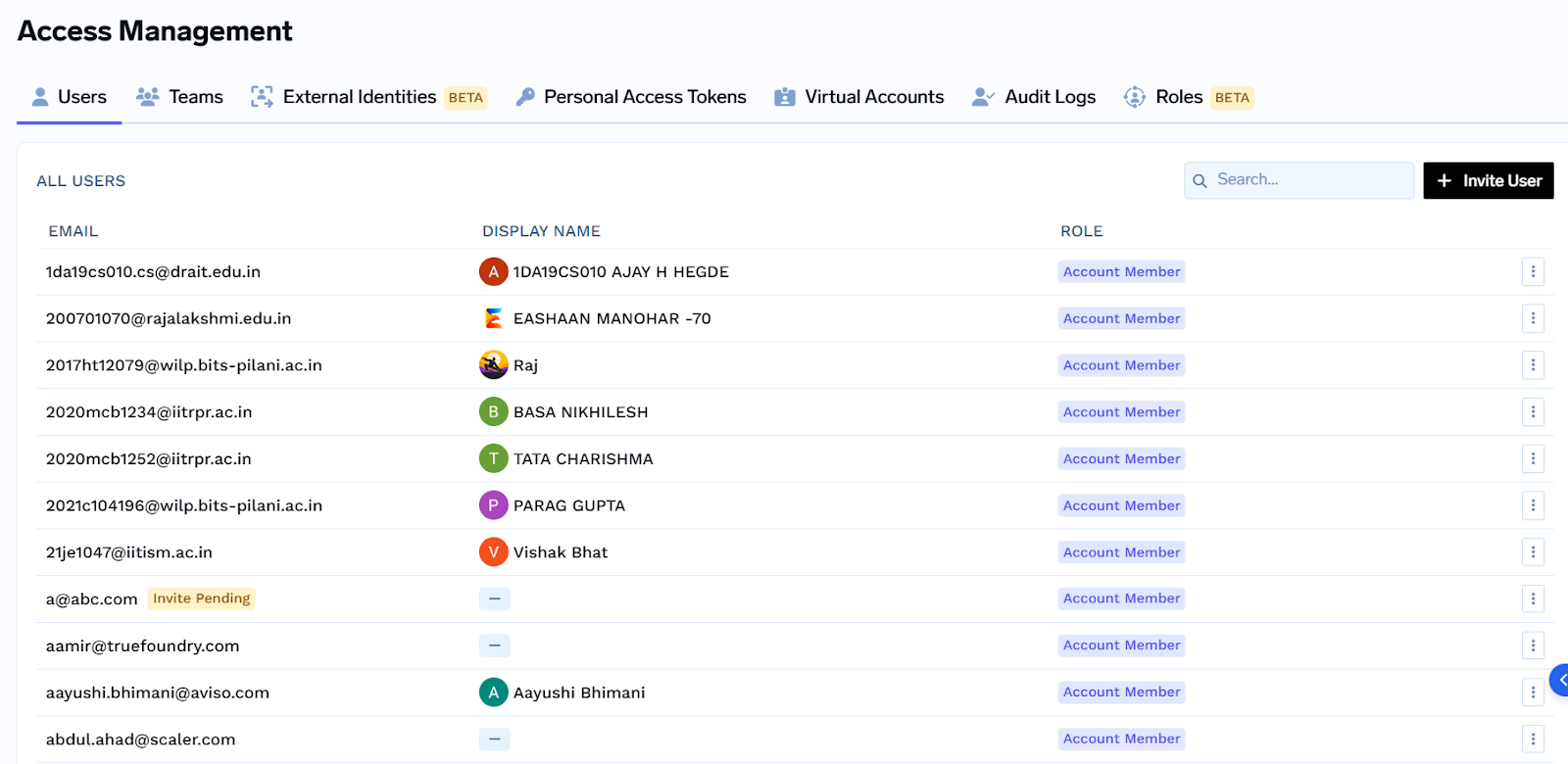

Access Control and Permissions The platform utilizes two distinct permission levels for provider accounts to separate administrative duties from everyday usage:

- Provider Account Manager: These users hold the keys to the kingdom. They can modify account settings, add or remove models, and manage access permissions for other team members.

- Provider Account User: These users can interact with models for inference but are strictly blocked from changing any underlying settings or security configurations.

Managed Access via Tokens To handle the different needs of developers and production systems, TrueFoundry offers two types of keys:

- Personal Access Tokens (PATs): These are tied to individual users. They are ideal for local testing and experimentation because they allow the organization to track usage per developer.

- Virtual Access Tokens (VATs): These are not tied to a specific person. They are the recommended choice for production applications. Since they are independent of individual employee accounts, your services won't break if a specific developer leaves the company.

Security and Compliance Readiness

Security in TrueFoundry is a multi-layered defense. It begins with enterprise-grade Authentication using OIDC, JWT, and managed API keys, ensuring that every request has a verified identity behind it. This is followed by Authorization through Role-Based Access Control (RBAC), which ensures that users only see the models and tools they are authorized to use.

To protect against emerging AI threats, the gateway integrates Guardrails for content safety. These include real-time PII detection to prevent sensitive data leaks, moderation to block toxic content, and specific filters to stop prompt injection attacks. Every interaction is recorded through Request and Response Logging, creating an immutable audit trail that is essential for compliance and forensic debugging.

Advanced Configuration Controls

Beyond simple access, the gateway provides technical controls to keep the system stable and cost-effective:

- Rate Limiting: Protect your infrastructure from abuse by setting limits on requests or tokens.

- Budget Controls: Define spend boundaries to prevent unexpected billing spikes.

- Load Balancing and Fallback: Automatically distribute traffic across healthy models and reroute requests if a specific provider fails.

Conclusion

Securing the enterprise AI frontier is not about slowing down innovation. It is about building the guardrails that make experimentation safe. By moving away from shared keys and towards a centralized, gateway-driven model, organizations can finally treat LLMs as first-class citizens in their security stack. With granular permissions and robust audit trails, the transition from prototype to production becomes a strategic advantage. True governance does not just protect your data; it empowers your teams to build with confidence.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.