TrueFoundry AI Gateway integration with Last9

As generative AI moves into critical user journeys, search, support, decision support, automation, the tolerance for “best-effort” reliability disappears. Platform and SRE teams now need the same level of observability for LLM traffic that they already expect from core microservices:

- What is the end-to-end latency for each request path?

- Which models, tenants, or regions are driving error budgets?

- How do we correlate LLM behavior with the rest of the stack?

The integration between TrueFoundry AI Gateway and Last9 addresses exactly this problem. By exporting OpenTelemetry (OTEL) traces from the Gateway into Last9, teams gain deep, cost-efficient observability into all LLM traffic, without rewriting applications or scattering SDKs across services.

This article explains:

- What Last9 and TrueFoundry AI Gateway provide

- How the integration works at an architectural level

- A practical, step-by-step view of the setup

- The concrete benefits for SRE, platform, and AI teams

Last9: Observability Designed for High-Cardinality Systems

Last9 is a modern observability platform focused on high-performance telemetry management across logs, metrics, and traces. It is designed specifically for environments where cardinality and scale are non-negotiable

Key capabilities relevant to LLM workloads include:

- High-cardinality handling: Last9 can ingest and query telemetry tagged with rich dimensions such as user, tenant, route, provider, model, and prompt version, without prohibitive performance or cost penalties.

- Unified telemetry: Logs, metrics, and traces live in a single platform, enabling teams to move seamlessly from an SLO breach or latency spike to the exact trace and span that caused it.

- OpenTelemetry-native design: Last9 is built around OTEL, making it straightforward to integrate any OTEL-speaking component.

This makes Last9 a natural fit for enterprises that are standardizing on OTEL across their infrastructure and want LLM observability to plug into that same strategy.

TrueFoundry AI Gateway: Unified Control Plane for LLM Traffic

TrueFoundry AI Gateway acts as a proxy layer between applications and LLM providers or MCP servers. It provides a unified, OpenAI-compatible interface to hundreds of models while centralizing governance, security, routing, and observability.

Core capabilities include:

- Unified API access across 250+ models and providers

- Low-latency routing and sophisticated load balancing

- Enterprise security: RBAC, audit logging, quota and cost controls

- Native observability with request/response logging, metrics, and traces

Crucially, AI Gateway can export OTEL traces to external systems, so your LLM telemetry becomes part of the same observability fabric as the rest of your infrastructure.

Integration Overview: How TrueFoundry and Last9 Work Together

At a high level, the integration is straightforward:

- Applications send all LLM traffic to TrueFoundry AI Gateway instead of directly to model providers.

- AI Gateway routes the request to the configured model (OpenAI, Claude, Gemini, self-hosted, etc.), applying routing, rate limits, and guardrails as needed.

- For each request, AI Gateway emits OpenTelemetry traces that capture spans for gateway handling, outbound model calls, MCP operations, and more.

- These OTEL traces are exported over HTTP to Last9’s OTLP endpoint.

- Inside Last9, traces are visualized in the Traces UI, with duration heatmaps, detailed trace lists, and span-level data for the

tfy-llm-gatewayservice.

There are no code changes to application logic. Once the Gateway’s OTEL exporter is configured, every LLM request automatically becomes observable in Last9.

Prerequisites

To enable the integration, you’ll need:

- TrueFoundry account with AI Gateway configured and at least one model provider set up. You can follow the Gateway Quick Start Guide in the TrueFoundry docs.

- Last9 account with access to the Last9 dashboard.

With these in place, the rest of the configuration happens entirely through the respective UIs.

Step-by-Step Integration Guide

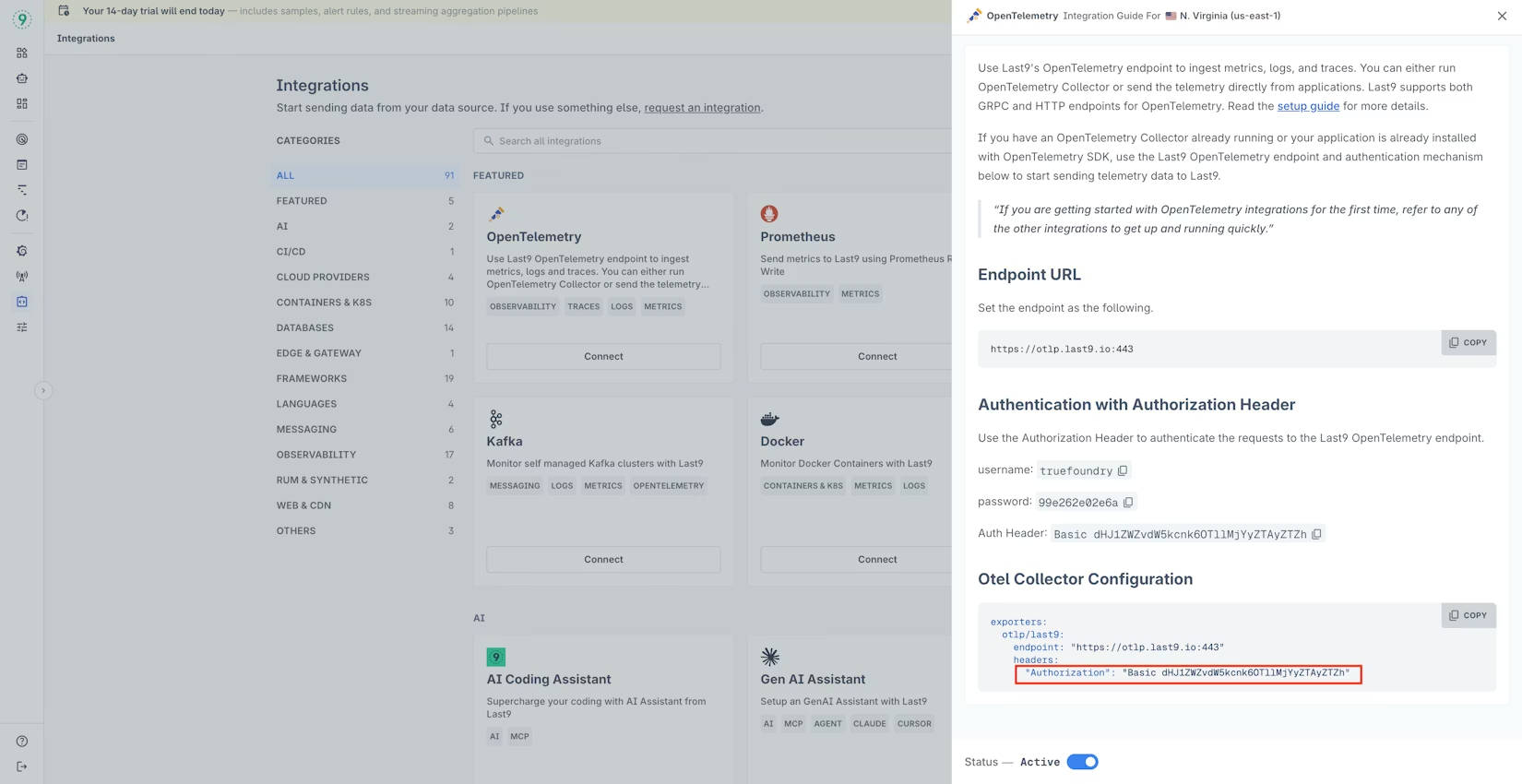

1. Retrieve the Last9 Authorization Header

From the Last9 dashboard:

- Log in to Last9.

- Navigate to Integrations in the left sidebar.

- Click Connect on the OpenTelemetry integration card.

- In the integration guide, locate “Authentication with Authorization Header.”

- Copy the provided Auth Header value, which is already formatted, for example:

Basic dHJ1ZWZvdW5kcnk6...

This header will be passed directly from TrueFoundry to Last9 for OTEL authentication.

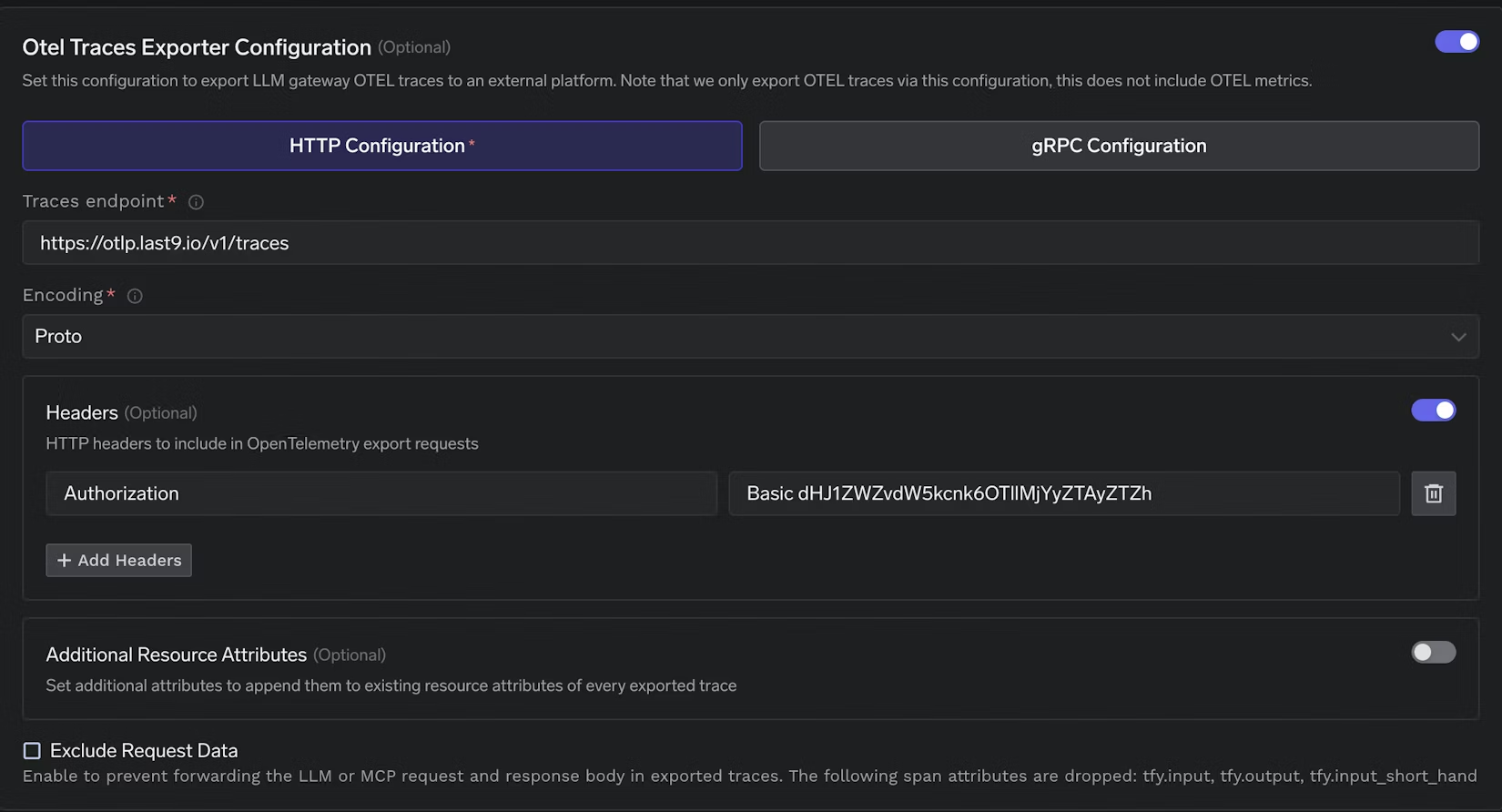

2. Configure OTEL Export in TrueFoundry AI Gateway

In the TrueFoundry console:

- Go to AI Gateway → Controls → OTEL Config.

- Enable the Otel Traces Exporter Configuration toggle.

- Select the HTTP Configuration tab.

3. Set the Last9 OTLP Endpoint

Under HTTP configuration, provide the following values:

- Traces endpoint

https://otlp.last9.io/v1/traces- Encoding

Proto

This is Last9’s OTLP ingestion endpoint for traces.

4. Add the Required Authorization Header

In the same configuration screen, click “+ Add Headers” and add: Paste the Auth Header exactly as copied from the Last9 UI (for example, Basic dHJ1ZWZvdW5kcnk6...). No additional formatting is required.

5. Save the Configuration

Click Save to apply the OTEL export settings. From this point onward, all LLM traces from the TrueFoundry AI Gateway will be exported to Last9.

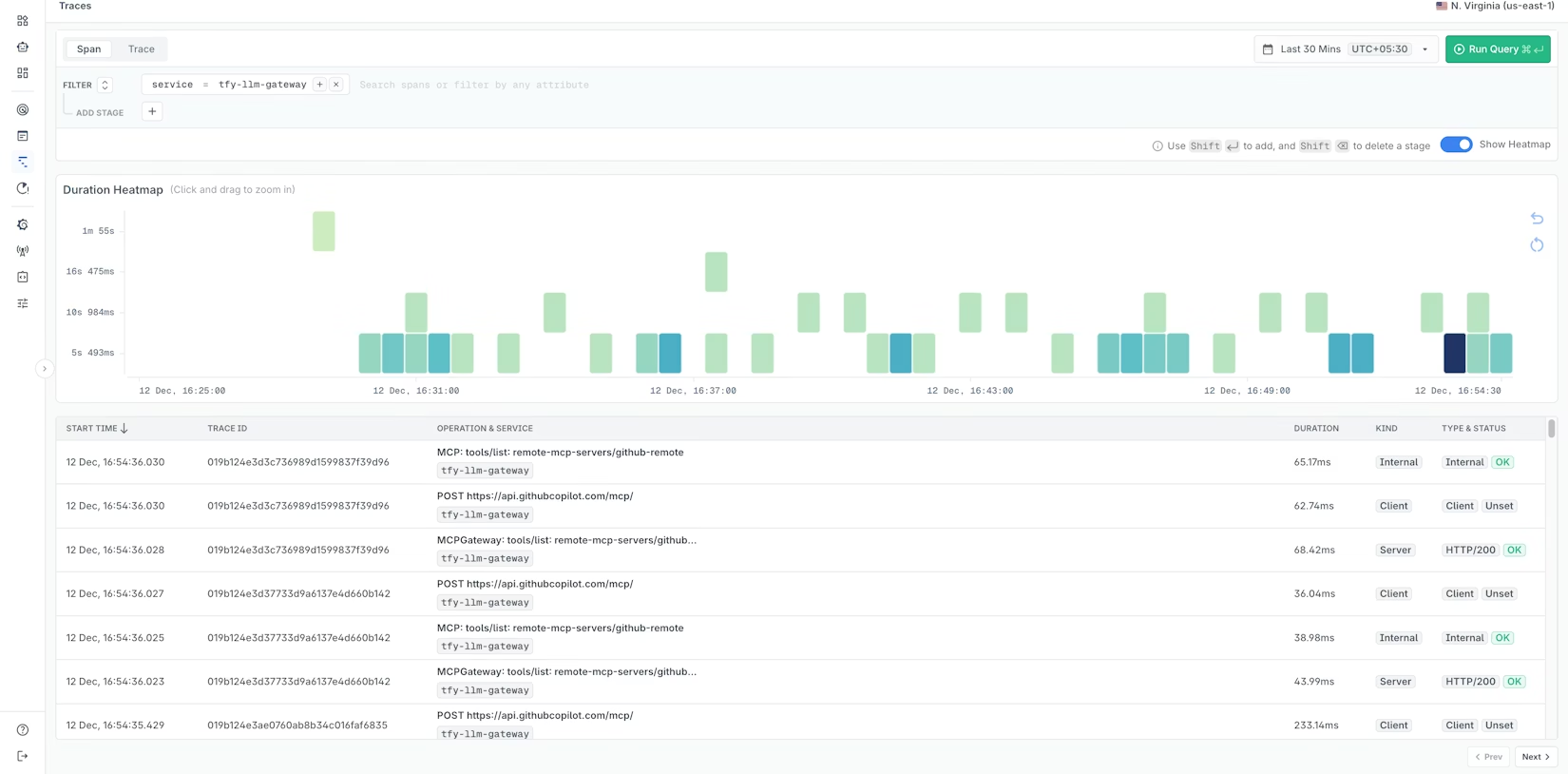

6. View LLM Traces in Last9

Once LLM traffic flows through the Gateway, open the Last9 dashboard:

- Navigate to the Traces section.

- Filter by service name:

tfy-llm-gateway- Explore:

- Duration heatmap – visualize latency trends and outliers over time.

- Trace details – see individual traces with operation names, durations, and status codes.

- Span information – inspect spans for HTTP calls, MCP operations, and underlying LLM requests.

This gives you an end-to-end view of how the Gateway and downstream providers behave under real production conditions.

Advanced Configuration: Enriching Traces with Resource Attributes

TrueFoundry’s OTEL configuration supports Additional Resource Attributes, enabling you to attach custom metadata to every exported trace. This is particularly powerful when combined with Last9’s high-cardinality capabilities.

Typical attributes you may want to add include:

env=prod,env=stagingregion=us-east-1,region=eu-west-1team=platform,team=searchtenant_id=enterprise-customer-a

In Last9, these attributes can be used to:

- Compare latency or error rates across regions and environments

- Isolate incidents impacting a specific tenant or product surface

- Build dashboards per team or business unit without duplicating telemetry

By planning your attribute strategy upfront, you enable richer queries and faster root-cause analysis later.

What This Integration Delivers for Your Teams

For SRE and Platform Engineering

- Production-grade visibility into LLM traffic: Identify latency spikes, error hotspots, and saturation in real time, with full trace context behind each event.

- Faster incident response: Move from a failing SLO to the precise trace and span causing it—whether that’s an upstream service, a specific model provider, or a misconfigured route.

- Consistent tooling: Keep LLM observability within the same OTEL-based workflows and dashboards you use for the rest of your microservices.

For AI and Application Teams

- Safe experimentation with models and prompts: Roll out new model versions, routing rules, or prompt strategies via TrueFoundry, and observe the impact directly in Last9’s traces and heatmaps.

- Performance and cost awareness: Correlate slow or failing interactions with specific routes, tenants, or models, and feed those insights back into routing and caching policies in the Gateway.

- Cleaner separation of concerns: Developers focus on application logic and agent behavior; the Gateway and Last9 jointly handle routing, governance, and observability.

Built for Speed: ~10ms Latency, Even Under Load

Blazingly fast way to build, track and deploy your models!

- Handles 350+ RPS on just 1 vCPU — no tuning needed

- Production-ready with full enterprise support

TrueFoundry AI Gateway delivers ~3–4 ms latency, handles 350+ RPS on 1 vCPU, scales horizontally with ease, and is production-ready, while LiteLLM suffers from high latency, struggles beyond moderate RPS, lacks built-in scaling, and is best for light or prototype workloads.